Last year, I attended the RTC.ON conference organized by Software Mansion for the first time. I shared my take on the conference in this WebRTC.ventures blog post: A WebRTC Developer’s Take on RTC.ON 2024. I also spoke at the 2024 conference, a recording of that talk is available on YouTube: Boosting Inclusivity: Closed Captioning & Translations in WebRTC.

I loved the conference and was excited to come again. This time I was only a participant, and got to be there alongside our CEO and Founder, Arin Sime, and our CTO (and my brother), Alberto Gonzalez.

I’m happy to share my take on the conference again, this time from the perspective of a Senior WebRTC Engineer, as I became last January. The videos are available on the RTC.ON 2025 YouTube playlist.

RTC.ON 2025: First Day

From Super Bowl to Olympics: How CyanView Powers the World’s Biggest Broadcasts with Elixir

To start the conference was Daniil Popov, Head of Technology at CyanView.

Daniil talked about how CyanView powers some of the world’s biggest broadcast events, like the Superbowl, Olympics, NBA and NFL. They build custom devices (CyanView devices) that are attached to the cameras, giving control of the lenses and movement to the team in the broadcast studio. He walked us through their solution, explaining why they decided to use Elixir and MQTT. They also use Phoenix LiveView and apply color correction. He finished talking about the dashboard they built to control the devices.

WhatsApp realtime calling, WebRTC, and how it’s being used to drive important social impact programmes in global south countries

Next was Simon De Haan, Co-founder of Turn.io.

Simon started by pointing out healthcare issues that some countries face, especially in the Global South, with real stats of the problems. He talked about the difficulties of providing good healthcare and why even free healthcare is never free (transport, time, food, childcare…).

Turn.io addressed the challenge by thinking about the future, recognizing that AI is becoming more affordable, as is with phone and data connectivity. WhatsApp has become the default telco in most of these countries (installed by default in many phones), which led them to build their solution with WhatsApp Calls and OpenAI (both using WebRTC) with Elixir, using Kubernetes running in cloud providers (AWS and GCP) and STUN/TURN (Cloudflare). He concluded with a few demos showcasing their solution.

This was one of the most inspiring talks of the conference, reminding us how as developers we can improve other people’s lives.

A QUIC update on MOQ and WebTransport

Following up next was Will Law, Chief Architect at Akamai.

Will talked about WebTransport, QUIC and MOQ, and gave us an update on the current state of MOQ, which he mentioned that it is not “Media over QUIC” anymore, just MOQ since it can be used for other kinds of data. Then he explained how it works and listed some of the use cases, like real-time communication, VOD reviewing and ad insertion. He gave us some insights of the work that the MOQ team is doing (including Will), like the Auth tokens, CAT-4-MOQT and WARP. He finished by showing us some examples of projects and products that are currently using MOQ, some really interesting. One of them is the MoqTail project, which Ali C. Begen demoed the following day.

Arin Sime interviewed Will on a special WebRTC Live broadcast from the event. Watch it here.

Observability in WebRTC: Between Metrics and Meaning

Next was Balazs Kreith, a WebRTC Engineer from Riverside.fm.

Balazs started talking about WebRTC stats, what they are and their usefulness from the users, managers and developers perspective. He explained why application context is important and how to use it alongside the webrtc stats to transform metrics into insights that reflect the user experience. This helps locate the issue and address it faster.

A really interesting option Balazs highlighted was the use of adaptive media systems to automatically adapt the service depending on the user experience and quality. He also talked about building a monitoring system to collect data from the client and the media server, why it is important and how it works. He recommended some tools from ObserveRTC like client-monitor-js, schemas, samples-encoder and decoder, and observer-js, which Balazs has developed. He closed with a demo showing how you can use ObserveRTC to monitor your WebRTC app.

Another open source WebRTC monitoring tool is Peermetrics, which was recently acquired by our team at WebRTC.ventures

From RTP Streams to AI Insights: Building Real-Time AI Pipelines with Juturna and Janus

The next speaker was Antonio Bevilacqua, a Machine Learning Engineer from Meetecho (Janus team).

Antonio’s topic was Juturna, a new tool that they have built to create real-time AI pipelines. You can integrate it into your Janus WebRTC application, but also in other contexts. Antonio explained some of the features it has, like dynamic hot-pluggable nodes (drop a new plugin which is discovered at runtime) and being developer focused. He showed an example pipeline to process audio and video separately, with voice detection, transcription, translation and motion detection. These pipelines are configured with json files. Antonio ended with a code walkthrough showing us how to create and run your pipe, import nodes or pipelines and how to design your own node

Juturna is a brand new project and they are still working on adding new features. It is definitely a really interesting tool that our team at WebRTC.ventures will keep an eye on for future projects.

Video composition using the GPU: a look at Vulkan Video

Next we had Jerzy Wilczek from Software Mansion.

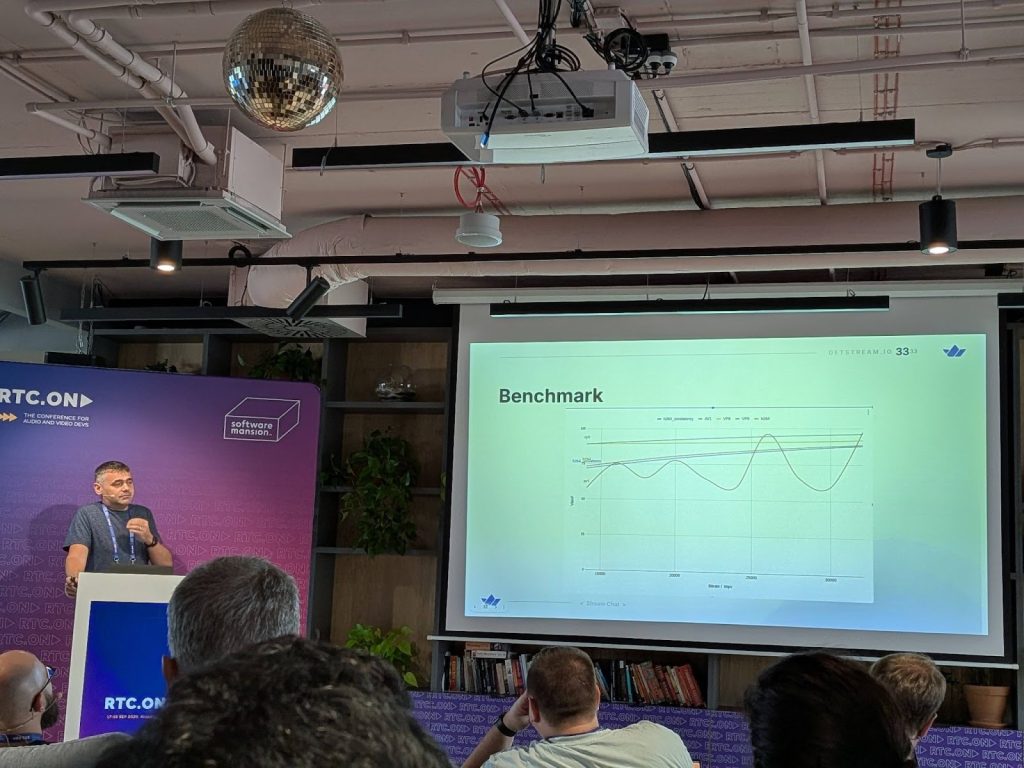

Jerzy introduced Smelter, a tool they built to process video from an input (live or not) and produce an output. It uses Vulkan, an API for rendering that uses the GPU, and in particular Vulkan Video, which is an extension that provides access to hardware encoding and decoding. It reduces the amount of times that the data has to be sent between the CPU and GPU, improving the performance. They also built a rust library called “vk-video” that simplifies the hard part of Vulkan Video (they are still developing it). Jerzy finished by talking about some pain points they faced and showed us a benchmark graph with the performance difference between Vulkan Video (GPU), Vulkan Video with CPU, and ffmpeg libx264, with Vulkan Video coming out on top.

Designing a media container library for the web

Coming up next was Christoph Guttandin from Media Codings.

Christoph started by talking about WebCodecs and media containers. He explained the complications in designing a media container, like having too many different ones and also said there are too many complications to create a specification. There is libavformat which ffmpeg uses, but it has some drawbacks. Then he listed some of the characteristics that a media container library should have. He was building a solution, but then he found out that luckily someone already did build something similar. It is called mediabunny and is a js implementation of the most popular containers. It mostly checks the characteristics that he listed before. At the end, he showed the performance (in size and time) of some other libraries like libav.js, mediabunny and his unfinished project.

Arin Sime interviewed Simon on a special WebRTC Live broadcast from the event. Watch it here.

Finding the perfect balance between easy and flexible audio Interface – Web Audio API

To follow up we had Michał Sęk from Software Mansion.

Michał started by explaining that he has been working on a library called React Native AudioAPI, which aims to bring the Web Audio API to the React native ecosystem. He gave a general overview of Web Audio API, explaining the types of nodes we have (sources, processing and output). He did a code-walkthrough explaining how to add audio effects and showing us the results. But it has some issues, like not being able to start it more than once. He concluded by explaining how it works under the hood, issues for real-time, and how to solve them.

How Low Can You Go? Running WebRTC on Low-Powered (and Cheap) Devices

The next speaker was Dan Jenkins, founder and CEO at Nimble Ape and Everycast Labs, and also the organizer of CommCon.

The topic of Dan’s talk was researching the use of WebRTC in low-powered and cheap devices. He tested many different devices, like Raspberry Pi 3, and also showed us a demo from a few years ago where Tim Panton showcased how a Lego EV3 can run on WebRTC. He also talked about some common low-powered devices that use WebRTC, like surveillance cameras (door bell), baby monitor cameras, Chromecast, Stadia Controller… But he wanted to go lower, so he tried with microcontrollers like Raspberry Pi RP2350 and the ESP32-S3. Dan ran a live demo showing how you can use the ESP32-S3 to connect to OpenAI Realtime API to send/receive audio. Then he talked about the Dottie Boards, a LED matrix display with a microcontroller (a product they sell), and right after he ran a live demo showing how he used the WebRTC data channel to send commands from his laptop to the board, drawing into it. He finished with some insights of what may be coming in the future, like AI and WebRTC at the edge.

Dan mentioned that after a year off, hopefully we will have CommCon back in 2026. Feel free to check out my post of CommCon 2024 highlights.

Trimming Glass to Glass latency of a Video stream one layer at a time

Next we had Tim Panton, Co-Founder and CTO at Pi.pe.

Last year at CommCon 2024, Tim talked about using WHIP to provide low latency live audio and video from a camera placed in a race car. This talk followed up, but focused on the process of lowering the latency as much as possible, one bit at a time. First, he gave a brief introduction of how their pipe solution works and how they addressed measuring the latency in the most accurate way, with a smart hardware hack involving flashing lights and sensors. Then, he walked us through small improvements in multiple different parts of the workflow, like the use of IPv6, getting rid of gstreamer, unsyncing audio, and using host candidates, among others, up to a total of 12. They were able to get down to 170ms, although he mentioned that not all of them may be available in other scenarios and circumstances.

A really interesting talk if you aim to reduce latency in your WebRTC project or build one!

Secure Collaborative Cloud Application Sharing with WebRTC

To finish off the first conference day we had 2 speakers, Damien Stolarz and David Diaz, from Evercast.

We saw Damien present at RTC.ON last year about their product that uses Apple Vision Pro and WebRTC to help creators, some of them are major film and TV show producers, enabling them to do remote editing. This talk was a followup, focusing on the new feature they created called App Share, which gives control of a remote screen. They talked about the architecture, showed a diagram of the flow and explained the security implementation, and concluded with another demo.

As last year, this did not disappoint. It is impressive to see a device like Apple Vision Pro and WebRTC be used to build a 3D 4k collaborative application, with remote control available this time.

Boat Party!

This year, Software Mansion organized the boat party for the first night of the conference. It took place at the same boat-party as last year, on the Vistula river, which runs through Krakow. We had some food and drinks and had time to talk with all the speakers and other participants as myself.

RTC.ON 2025: Second Day

Challenges in Realtime livestreaming at 4k / 60FPS

To start off the day, we had Cezary Siwek from Stream (the other sponsor besides WebRTC.ventures).

The topic was about the challenges of real-time live streaming at 4k and 60 FPS. Cezary explained that there is a market to build this solution, for paid content (high quality), gaming, live music, auctions… Then he walked us through the challenges they came up with during this project, starting with 4k, then increasing the FPS to 60, broadcasting to 20k viewers and having stereo. He went into detail about the implementation, things they tried and what they stick with, related to codec choice, simulcast and RTMP, and also about scaling, having to add SFUs as load balancers of other SFUs. He stated that for stereo it requires SDP munging which is not great, and that WebRTC is good for live streaming although most publishers prefer RTMP. He finished by saying some of the future improvements they will add to their solution.

Arin Sime interviewed Cezary on a special WebRTC Live broadcast from the event. Watch it here.

AI assisted transcriptions in Jitsi Meet

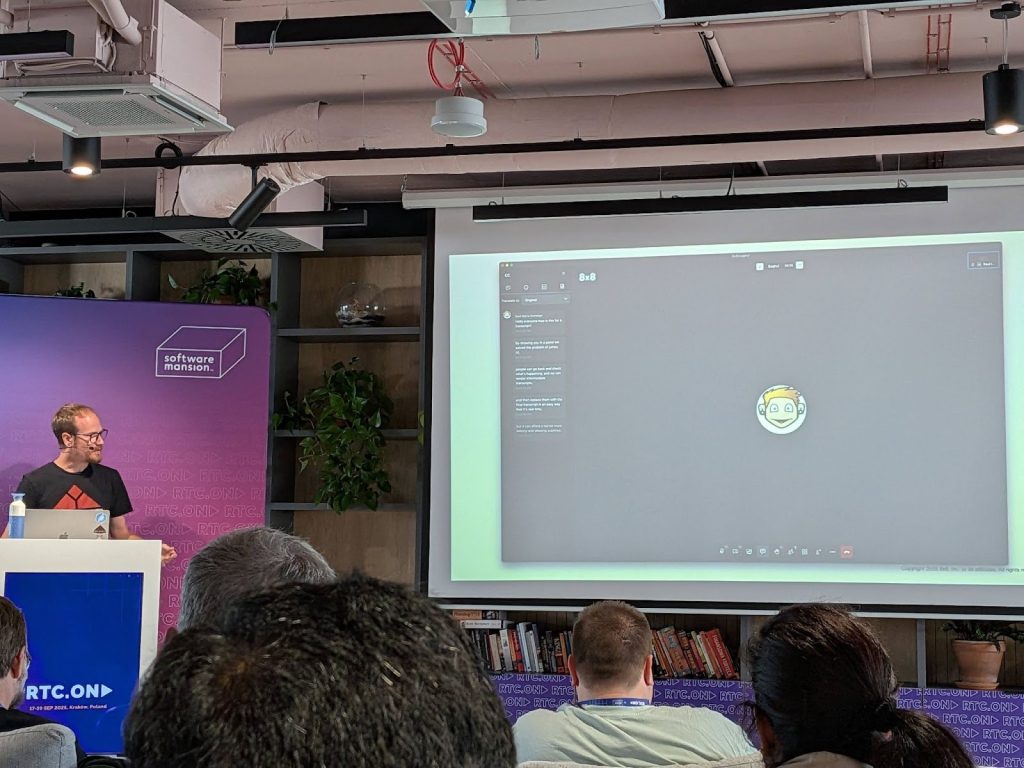

Next we had another talk with 2 speakers: Saúl Ibarra Corretgé and Răzvan Purdel from 8×8 (Jitsi team).

The topic was building AI assisted transcriptions in Jitsi Meet. They started by explaining the multiple components you have at your disposal with Jitsi Meet to create a video conferencing platform. Then they went through the different solutions that helped them get to this point.

Starting with the 1st generation transcriber using Google Speech API and then after the LLM revolution they made Skynet, which mixes transcription and LLMs with Whisper and Llama. But it had some limitations so they decided to work on a new solution, which did not include real-time transcription, only post-call analysis like transcription and summaries. They explained the architecture and the pros, like being cheaper, more flexible, more accurate and multi-lingual, with the option to have real-time transcriptions with Skynet.

To finish up, they talked about OpenAI just recently making their RealTime API generally available, which includes real-time transcriptions and with a really competitive price. This is why they decided to try it out, using Cloudflare workers to send audio to OpenAI and getting the transcription back and storing it. It is still a prototype but they will make it available as an additional component for the customers that want it.

Another interesting tool to keep in mind when building WebRTC applications. We will definitely check it for future projects that require AI integration.

The Future in Focus: AI and the Next Wave of Real-Time Video Intelligence

Next we had Chris Allen, co-founder and CEO at Red5.

This presentation was about using AI for real-time video processing and it focused especially on the use of LLMs for real-time video detection. Chris explained why real-time video processing is important and explained how they built a solution that makes real-time frame extraction and encodes it for the AI to process and finally passes the AI-processed content back. This also includes transcoding and mixing, all server side. Then he showed us some examples, like a real-time video detection of hazard events in highways, where you could see a fire starting in the forest next to it and their solution detecting it really fast. They also built a meet application that includes real-time transcription and ran a live demo to showcase it. He ended by talking about future work Red5 is planning.

What Chris showed us about the real-time video detection of dangerous situations is something really useful that can help improve our society and prevent catastrophes. There are also many other use cases in which it could be helpful.

Where are WebRTC and telephony voice agents headed?

Next we had Rob Pickering, Founder of aplisay, talking about where WebRTC and telephony voice agents are headed.

Rob started listing some of the challenges you can face when building a project that integrates voice agents, like turn prediction, latency, handling interruptions and controlling the conversation. Then he explained that aplisay can integrate with different voice pipeline tools, like pipecat, LiveKit, Ultravox and jambonz, to use STT, TTS and LLM services and showed us a workflow. Then he ran a live demo talking with an AI agent and using WebRTC and audio calls, and walked us through some parts of the code, showing how the agent is defined.

To finish, Rob talked about some bad stuff that can happen with AI and reminded us to be cautious and to focus on the positive impact we can create, like what we saw from Simon De Haan presentation on the first day of the conference, improving people’s lives.

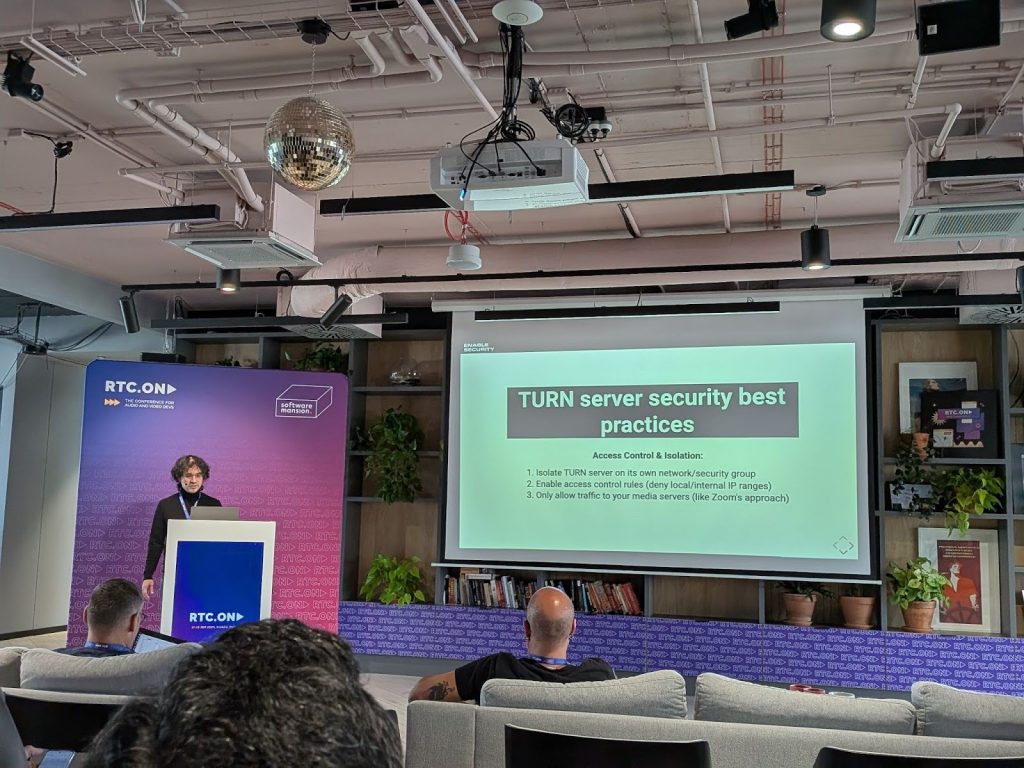

TURNed inside out: a hacker’s view of your media relay

The next speaker was Sandro Gauci, CEO at Enable Security.

Sandro talked about the security issues that TURN (an important component when building a WebRTC application) brings. He listed some of those potential issues, like TURN relay abuse (using it for non-legitimate traffic), Denial of Service (DoS) and software vulnerabilities, and gave more details of how they are performed. He also explained that a few years ago they did a bug bounty project for Slack to hack their TURN servers in which they were able to exploit those vulnerabilities. Of course after talking about vulnerabilities, the final part was about how to prevent them. He shared TURN server security best practices related to access control and isolation, protocol hardening, and operations and monitoring.

A really interesting talk for us developers who build WebRTC applications, to remind us of how to keep our TURN servers secure.

The Future of AI Is Distributed: Tradeoffs in Performance, Privacy, and Power

Up next was Jakub Chmura from Software Mansion.

Jakub talked about the cons of AI running in the cloud (cost, privacy, scalability, requires connection and latency) vs edge-devices (like power consumption, and reduced computational power). He gave us a few examples of projects that run on edge-devices, like a video inpainting application they presented in last year’s conference, Whisper WebGPU which provides real-time transcriptions, Google Meet adding a blur in the background, and FastVLM from Apple which can describe images. He also pointed out that some of the biggest companies are investing in making it more accessible to run AI in browsers and phones, like Google with Gemini Nano and Apple with Apple Intelligence.

Next, he talked about ExecuTorch, an extensive toolkit for edge AI inference, and then presented React Native ExecuTorch, a tool they built to abstract the complexity of ExecuTorch and supports many features. He ended the talk with a live demo running Private Mind (an app they built) on his phone, showing us how it was able to reply to questions related to a PDF file that he uploaded.

Multimodal AI for Real-Time Creator Experiences

As the next speaker we had Niklas Enbom, Founder and Head of R&D at Gigaverse and one of the founders of WebRTC!

Niklas started by talking about their application built to improve the experience of creators, with different use cases like AMA, live podcasts taping, group coaching and educational workshops. He listed some of the features like moving the audience into the stage, creating polls with voice commands, getting post-call assets (eg: highlight clips and transcripts), highlighting important messages and relevant questions, fact checking when a topic is discussed, auto moderation and many more, and showcased it through a demo. An important part that Niklas mentioned is that different data requires different latency, for instance transcription has to be real-time, poll creation with voice commands can have a few seconds delay but not too many, and summaries and highlight clips can be generated with more delay after the call has ended. He also went through some of the performance tricks they used, like using different LLMs for each case and the smallest model that is good enough. He finished by talking about other features that may come next, related to video understanding, like having an auto-producer (to optimize the video layout), video moderation and product highlight, with a video demo of the latter.

Streaming Bad: Breaking Latency with MOQ

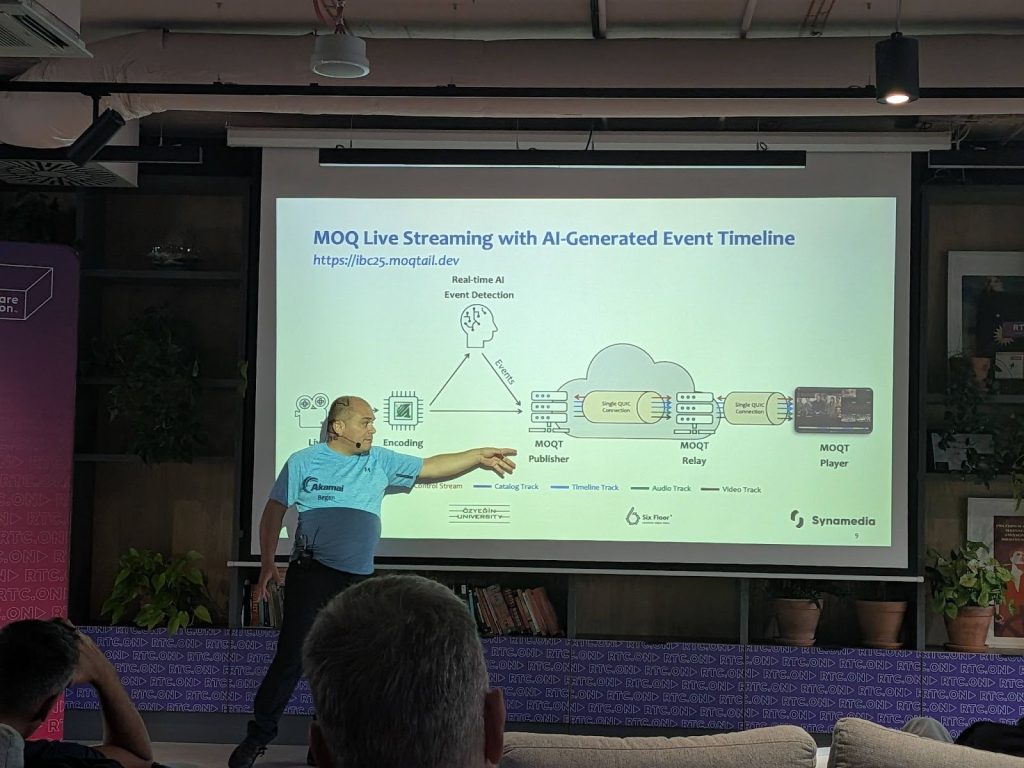

The last speaker was Ali C. Begen (Professor at Ozyegin University), deeply involved in the MOQ development.

Ali followed up on his talk from last year’s RTC.ON about MOQ, which I was excited to hear. He started explaining that QUIC works as a multi-lane highway, being able to partition data into multiple streams with different priorities which you can manage. He showed that MOQ fits in all the spectrum between scalability and interactivity, intersecting with VoD streaming (DASH and HLS), Live streaming (DASH and HLS) and real-time (WebRTC). This is due to the 2 different types of transmission that MOQ has: pub/sub and fetch.

Then he talked about MOQtail, a project they created, giving details about their implementation, and showing us a few live demos. The first one was about live streaming a basketball game, showing us the time difference between the publisher (where the game is being played) and the subscriber (Ali’s computer) and showcasing how he can fine-tune the latency between 0.3 seconds (real-time) and 5+ seconds, with a playback catchup rate (slowing down or speeding up the video when changing the target latency). It also included a timeframe of the past 8+ minutes with highlights (generated by AI) of the game, like 2-point and 3-points shots, and being able to select those time frames to open up a new video frame with the replay, having both videos (live and replay) playing at the same time. The interesting thing is that you can open up to 17 different videos and all of them are using a single QUIC connection, and with QUIC you can prioritize which one is more important, for instance you don’t want to pause the live stream. While running this demo he answered some interesting questions about the implementation. Right after he ran a second live demo, in which he showcased time travel in a video conference, being able to rewind audio and video x seconds for a specific participant. This way you can replay what happened the past few seconds and that while staying in the live conference.

Ali talked about other projects they built with MOQ, like SharePlay which synchronizes VoD for multi-party video play (everyone watching at the same time). He concluded with a brief explanation of what is left to do for MOQ.

As I said, this was one of the talks I was more excited about and it did not disappoint, it was a really interesting talk with some real applications that are quite impressive. MOQ has some interesting features, and although it will not replace WebRTC it will coexist with it and with DASH and HLS as well.

Ali was recently a guest on WebRTC Live: “MOQ Me, Don’t WebRTC Me” with Ali C. Begen

That’s a Wrap!

It was a pleasure to attend the RTC.ON conference for the second time. It looks to have already established itself as one of the must-go WebRTC (more generally audio and video) conferences in Europe.

I got to meet new people and see others again from past conferences, and of course to meet in person with my WebRTC.ventures team (Arin and Alberto). We had a great time there, learning, expanding our network, and getting inspiration for new projects.

Further Reading: