I’ve written recently about the potential of combining WebRTC and bots together into one application. In June, David Alfaro and I made a presentation at WebRTC Argentina which showed some simple examples of how you might combine a bot and live human support for telemedicine applications.

You can see the video of that presentation below, or read the transcript and see the slides on RealTimeWeekly.

In this post however, I want to talk a little more about the code behind such an application.

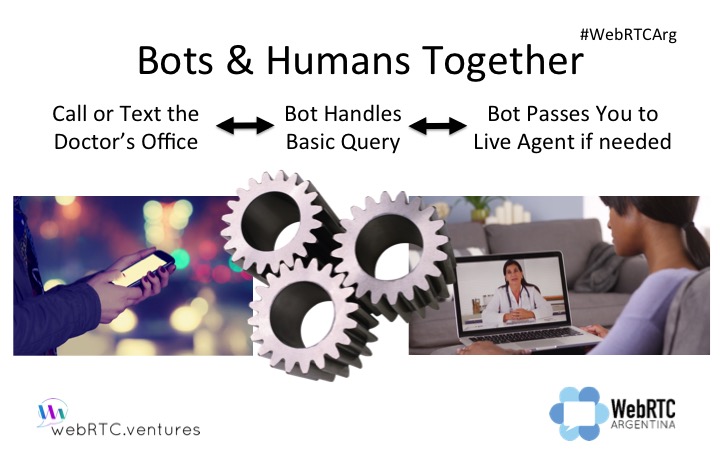

In that presentation at WebRTC Argentina, I first talked about why we would combine bots with WebRTC for live agents.

The basic idea is that you contact the doctor’s office (or any business, this should not be limited to a telehealth example). By phone, web chat, or SMS, you ask some basic questions. Those questions are handled by the bot to the best of its abilities, and the bot should be able to handle many simple queries about the business, about appointments or meeting times, etc.

At some point, you will ask a question that is too complicated for the bot, and that’s when WebRTC comes back in the picture. As this recent TechCrunch article noted, chatbots are not a panacea for all user questions and may actually be a hindrance in many cases.

So if you’re going to use chatbots, you need to also have the ability to escalate that call to a human.

In this simple video from my WebRTC Argentina presentation, I show a chatbot running on top of Twilio Video, that answers some basic questions, but then transfers you to an agent for more complicated questions.

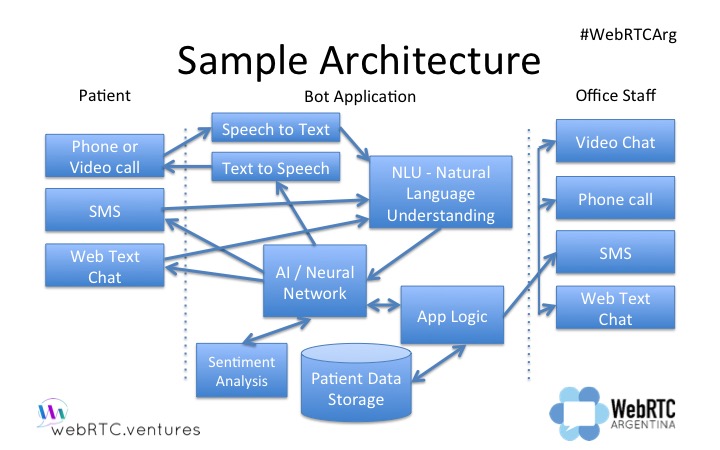

As you’ll see in the code samples below, I’ve made a simplistic implementation to illustrate the basic concepts. To see how complicated an architecture for Bots and WebRTC could be, take a look at this diagram from our presentation at WebRTC Argentina.

Multiple input channels (SMS, video chat, telephony) can all be used to connect to a semi intelligent bot who can understand your voice or written questions, interpret what you mean, and then go perform queries against a database to give you the responses that you need. When it can’t find an answer, it connects you to a live human, via WebRTC video chat, SMS, or a phone call.

This is a big effort to implement that full architecture! There are many off the shelf services that we can plug in to parts of that architecture, such as the IBM Watson API’s for language understanding and machine learning, but it’s not so simple as connecting a bunch of blocks together.

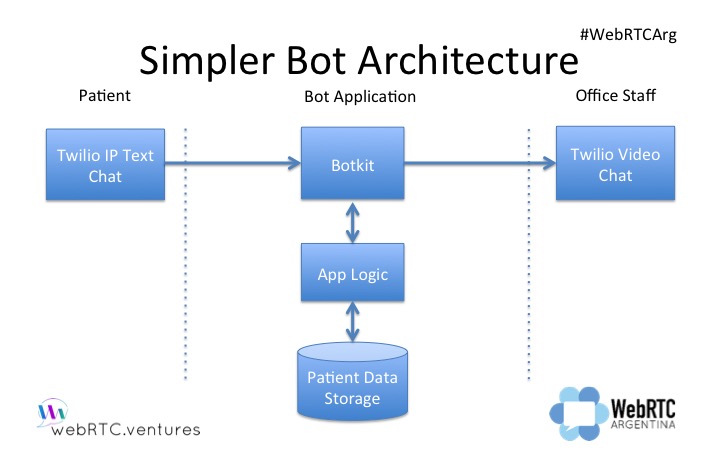

However, to explore the concepts, we can make a simpler example. Instead, let’s connect a user via text chat only, using Twilio IP messaging. The messages we send to Twilio via a standard web text chat interface will then be relayed to Botkit for some basic bot functionality. In this case, Botkit is not doing any complicated Natural Language Understanding or Artificial Intelligence, but some basic pattern matching. Finally, when necessary, we use Twilio Video to initiate a video chat with a real person.

Step 1: Learn Twilio IP Messaging

Example Repo: https://www.twilio.com/docs/api/ip-messaging/guides/quickstart-js

To learn how text chat over the Twilio platform works, the Twilio quickstart above gives you a great starting point for a javascript based solution. They have quickstarts for other languages, and of course you are not limited to using Twilio for text chat. You can use many other commercial platforms or setup your own WebSockets based solution. I chose to use Twilio IP Messaging here just to be consistent with the Twilio Video integration in step 3.

Step 2: Combine Twilio IP Messaging and Botkit

Twilio IP + Botkit

Example Repo: https://github.com/howdyai/botkit/blob/master/readme-twilioipm.md

Starting with this example above, you can see how to combine Twilio IP Messaging and Botkit together. Basically, you’re going to have the bot join a chat room with your customer. Others can join the room too if you want the bot to listen to a group discussion for keywords, or you can just use the chatroom as a 1-1 place to talk with the bot, as I showed in my example video.

Botkit has given us a Bot Controller API that basically just listens to the chat room for keywords, using a method calls “hears”:

This allows you to do some pattern matching to look for key phrases from the human, such as “when is my appointment?”. It’s an imperfect solution, because you have to guess the keywords that humans will use for queries you want the bot to answer, but it’s a starting point at least and is certainly simpler to begin with than a full natural language and AI solution.

Step 3: Twilio Video

Twilio Video Example Repo: https://www.twilio.com/docs/api/video/guide/quickstart-js

Once you’ve confirmed that the Botkit responses are working in your chat room, you can look at triggering a video call.

In my code, I am doing that based on a keyword of “human”, so if a frustrated user asks to talk a human, I will start a call.

This basically causes the application to start a Twilio Video call, which you can find examples of in the repo above. My code is not doing anything complicated, and I’ve basically copied their Twilio Video quickstart code verbatim.

In that Twilio Video code, you create a new Conversations object, attach a media stream to it, and attach it to the video element.

Improvements you can make on this example

Improvement #1 – Add in Natural Language Understanding

A more sophisticated solution would not be based on keywords like “human” or “operator”. Instead, you would use Natural Language Understanding and AI, with confidence scores. If the confidence score tied to a bot’s answer is too low, that means the bot is not certain it has given you a good answer. That is a good indicator that you should get a human involved.

Improvement #2 – Add in Sentiment Analysis

Additionally, you could add in sentiment analysis to see if the human is getting frustrated, based on what they are typing. If the bot determines that the human is getting annoyed by their botty answers, then the bot should offer to bring a human representative into the conversation.

Improvement #3 – Setup Call Queues

Finally, your bot should not assume that a human operator is always available, as my simple example above does. You’ll need to give your bot a way to see who is available to help their human friend, and try to establish a call or wait for the right person to become available. A call queue system is needed. All through this process the bot needs to communicate that status back to the human, so they are not anxiously tapping their fingers on their computer.

What will you do with WebRTC and bots?

Our team at WebRTC.ventures have become experts in a number of different WebRTC platforms, and we have built video apps for web and mobile clients in a variety of industries.

Therefore we’re always thinking about what the future of internet video communications will look like. I’m convinced that chatbots will play a role in that future, and so experiments like this are helping us to explore that future.

Do you need to integrate video chat and automated customer service together? Contact us and we’ll be glad to get you a quote!

Or maybe you’ve seen some other great examples of how humans and bots will conduct business together in the future … please send them to me or share in the comments section, we’d love to share it with others!