Voice AI applications need real-time and reliable audio communication for natural conversations with AI customer service bots, virtual assistants, IVR platforms, and other voice-enabled systems. Choosing the appropriate transport protocol is crucial for teams, as using the wrong one can lead to choppy audio, noticeable delays, and dropped connections.

To support realistic conversational AI, the transport protocol must handle variable network conditions, secure media transport, and deliver high-quality audio with minimal latency. In this post, we will talk about why WebRTC is the best transport protocol for real-time Voice AI architectures, detailing how it fulfills these requirements, and under which scenarios it is the preferred choice to alternatives like WebSockets for building Voice AI that sounds and feels natural at scale.

Why WebRTC Is Ideal for Voice AI

Voice AI interactions only feel natural when speech flows in real time without noticeable lag. That level of responsiveness depends as much on the transport layer as it does on the AI models themselves. WebRTC was designed for exactly this type of challenge.

WebRTC provides:

- Low latency. Peer-to-peer transport and optimized media protocols minimize round-trip delays, preserving conversational flow and enabling natural back-and-forth dialogue.

- AI-ready integration. WebRTC media streams can be routed in real time to AI services. This allows Voice AI applications to process and respond to user speech with minimal latency as soon as an AI response is ready, supporting live conversational workflows.

- Reliability under varying network conditions. Automatic handling of jitter, packet loss, and congestion allows reliable communication even on unstable, slow, or mobile connections.

- Consistent audio quality. Adaptive bitrate streaming maintains clarity on constrained bandwidth.

- Security. Native encryption with DTLS and SRTP protects conversations and ensures compliance with data privacy regulations.

- Plug-and-play deployment. Supported natively across modern browsers and mobile platforms without requiring plugins.

- Scalability. Architectures using gateways and SFUs can support thousands of concurrent sessions for enterprise deployments.

- Additional features such as Noise Suppression and Echo Cancellation already come integrated into WebRTC, so there is no need to implement them by hand.

These qualities make WebRTC the most effective way to deliver smooth, secure, and reliable Voice AI applications, whether for customer service, travel assistants, or enterprise bots.

When Should You Prefer WebRTC over Other Transport Protocols for Voice AI?

When building Voice AI applications, choosing the right transport protocol for media delivery is crucial. The two current options available are WebSockets and WebRTC. Both provide low-latency media delivery, but they achieve it in fundamentally different ways:

- WebSockets: Built on top of HTTP and TCP, WebSockets establish a reliable, bilateral connection. This means they include packet delivery checks that guarantee the order and delivery of every packet. While excellent for most data types, this reliability can be problematic for real-time media. For example, if a packet is lost, the mechanism will delay further packet delivery until the missing one is successfully re-transmitted, leading to issues like frozen video, robotic voice, and high latency.

- WebRTC: In contrast, WebRTC relies on a UDP-like method that prioritizes speed over guaranteed delivery. It sends packets as fast as possible without extensive checks. This approach, while not ideal for all data, is a perfect match for real-time media. In the event of a packet loss, WebRTC simply ignores it and continues streaming the remaining packets. This “fire and forget” approach ensures a smoother experience, even under fluctuating network conditions, as a single lost packet often goes unnoticed.

Why WebRTC Shines for Voice AI

Given that end-users often experience variable network conditions, Voice AI applications running on their devices gain significant advantages from WebRTC as a transport mechanism. Its ability to prioritize real-time delivery over absolute reliability minimizes the impact of packet loss, leading to a more consistent and natural user experience.

Furthermore, leveraging existing WebRTC implementations, whether bundled in browsers or custom solutions like Pion or aiortc, simplifies development. These implementations often come with built-in noise suppression and echo cancellation capabilities, providing additional benefits for Voice AI applications right out of the box.

When WebSockets Can Still Play a Role

For Voice AI components running in controlled environments with excellent network conditions, such as cloud provider networks, the simplicity of WebSockets connections for media transport can still be advantageous. However, as custom, server-side WebRTC implementations mature, we may see an increasing adoption of WebRTC in these scenarios as well, offering a consistent and robust solution across the entire Voice AI ecosystem.

WebRTC Voice AI Architecture

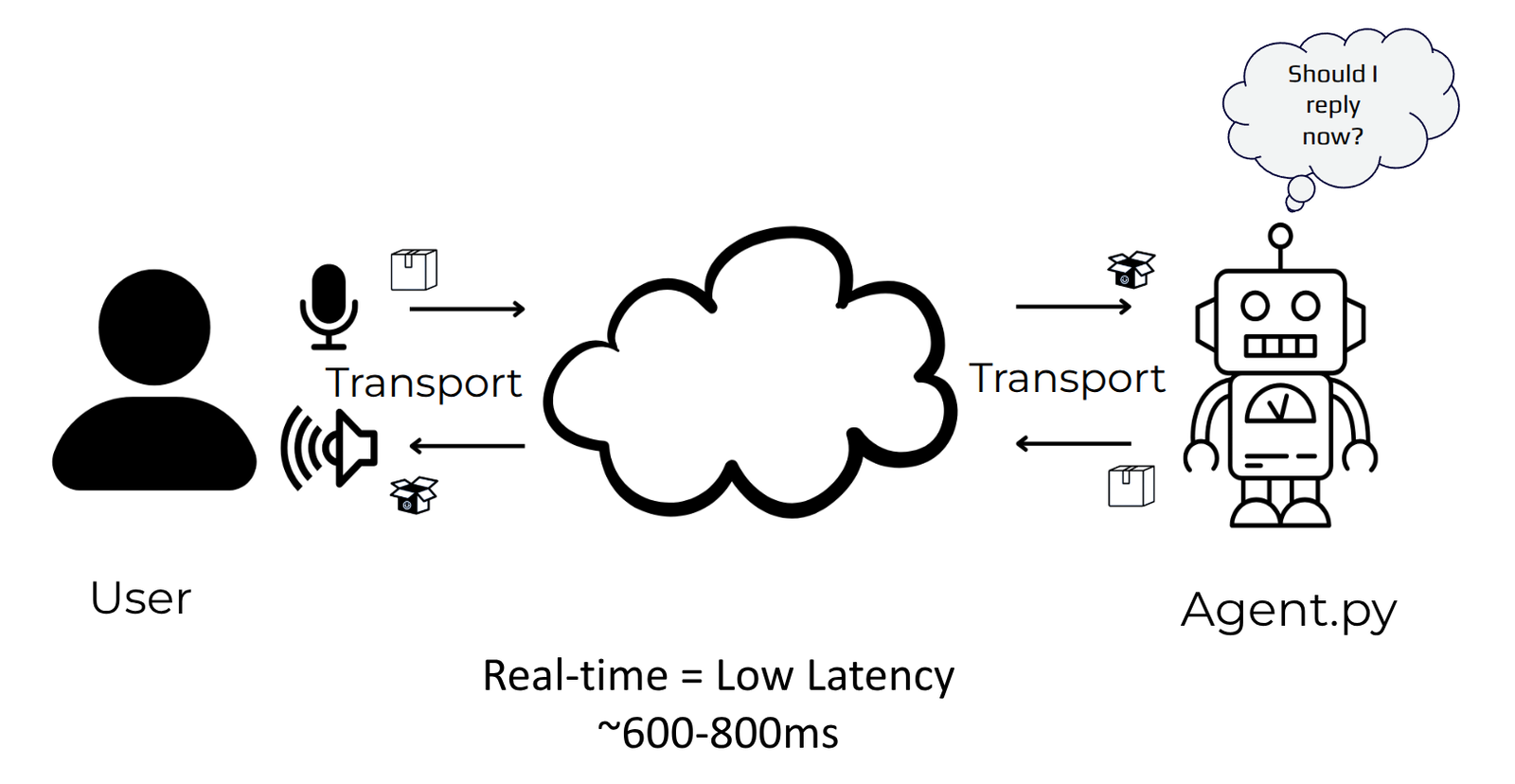

A typical Voice AI system using WebRTC as transport connects the client device directly to a cloud-based AI backend or through a WebRTC media platform. The platform routes media streams from client devices to AI Agent backend services and vice versa..

The architecture usually includes:

- A client device: Browser or mobile app with WebRTC support

- A WebRTC media platform: Routes media streams for AI processing

- An AI backend: Speech-to-text, intent recognition, and text-to-speech services, among others

- A signaling layer: Manages session establishment and control

WebRTC’s encryption layers (DTLS and SRTP) protect voice in transit, which is critical for both user privacy and regulatory compliance.

Example: A customer service voice bot built on WebRTC handling thousands of concurrent calls, allowing natural interruptions and real-time responses that feel like speaking with a human agent.

Build Voice AI with WebRTC Expertise

Building Voice AI applications requires deep expertise in both WebRTC protocols and AI model integration. At WebRTC.ventures, our engineers have been at the forefront of this evolution, from the early days of connecting basic speech recognition to WebRTC streams, to today’s sophisticated integrations with LLMs and multimodal AI systems.

Whether integrating OpenAI’s Realtime API, open-source alternatives, or custom AI pipelines, we design, optimize, and deploy solutions that deliver natural conversational experiences with minimal latency at scale.

Do you need an MVP? A production-ready Voice AI application? Or, consulting to support your team? We can help you build scalable, secure, and low-latency solutions. Contact us today!

Further Reading:

- How to Build Voice AI Applications: A Complete Developer Guide

- Scalable WebRTC VoIP Infrastructure Architecture: Essential DevOps Practices

- 3 Ways to Deploy Voice AI Agents: Managed Services, Managed Compute, and Self-Hosted

- Reducing Voice Agent Latency with Parallel SLMs and LLMs

- Rethinking UX: Emerging Interfaces for the AI Age

- Our Clients Succeed: AVA Intellect, Built with WebRTC.ventures, Acquired by Wowza