What is the future of AI in Voice applications, and how will it intersect with other communication application development? This is what I sought to learn at the Voice & AI conference for Natural Language and Generative AI in Washington DC September 5-7, 2023.

I recorded a video update from the conference, which you can watch below. It includes some views from the conference and glimpses of product demos that I saw from vendors covering conversational AI applications to digital humans and synthetic voices. In this blog post, I’ll go into more depth on many of my observations in the video, I’ll also provide links to vendors and other content where you can learn more.

An Overview of Voice & AI 2023

The conference was held at the Washington Hilton in downtown Washington DC, and was organized by Pete Erickson and the MoDev group. In past years it was referred to as the Voice Summit. Pete and his team added in the “& AI” tag this year which made a lot of sense since so many Voice applications have AI built directly in.

In the Voice Summit I attended in 2022, there was a lot of talk about voice as a user interface and Amazon Alexa devices, and that’s still the case now. (For more about recent examples of Alexa devices, see my video update from the 2023 HIMSS conference, which shows demos of Alexa devices being used in hospitals and with WebRTC on Alexa Show devices.)

Voice Assistants on home devices are just one part of this growing industry, however. At the 2023 conference, there were demos of many different conversational AIs for many different customer contact use cases, as well as tools to help you integrate those with Large Language Models (LLMs) like ChatGPT for additional conversational capabilities. I’ve seen some of that at other conferences too, such as presentations that I saw at AWS Summit DC and AWS Summit NYC earlier this year. These conversational AI bots could be interacted with using text chat or voice interfaces, and the quality of these interactions has markedly improved over the last couple of years.

One area that was more unique at this conference was the addition of synthetic voices and digital humans to the conversation. While these topics were also discussed at the 2022 conference by companies like Veritone and Ericsson, it seemed to me that the technology has advanced a lot in just the last year. I left the conference feeling much more excited about the use cases. More on that later in this blog post and future posts I’ll be writing in the coming weeks.

Truly revolutionary technology that is rapidly maturing

One keynote speaker that I particularly want to call out is Robin S. from Estée Lauder. Robin spoke about their “Virtual Makeup Assistant” that uses an iOS app to help blind or visually impaired people put on makeup. It’s worth checking out a demo to fully appreciate it, but it’s an impressive combination of voice commands and video AI to help the user determine if the user has applied their makeup appropriately and provide advice to correct errors. For example, “your lipstick is misapplied on your upper left lip.We recommend you wipe the extra off”. It was a really cool example of how these innovative technologies could be combined to help an underserved market. Here’s a demo on YouTube of a blind person using the application. (Note: this is a different demo than the one Robin used in her presentation.)

In short, the conference was filled with many great conversations about truly revolutionary technology that is rapidly maturing. Many of the conversations I had were at the AI Happy Hour that our team at WebRTC.ventures sponsored along with our partners at Vonage. We had over 60 people stop by the Lauriol Plaza Mexican restaurant for a cerveza, sangria and margaritas. I got to see a lot of old faces and make new friends.

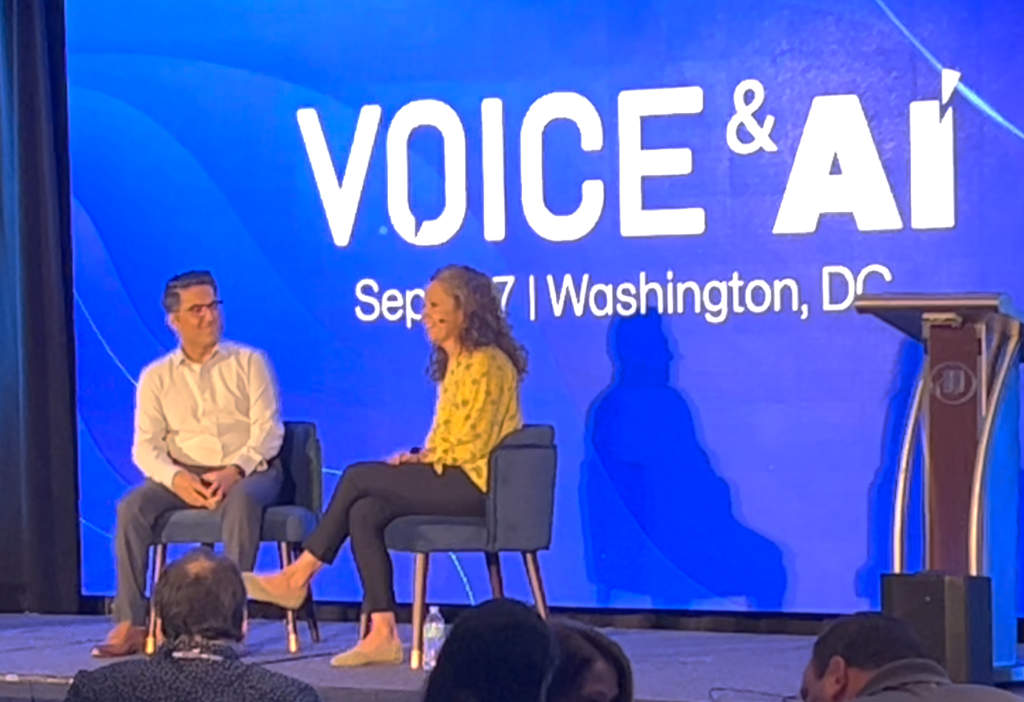

At the conference, I also had the opportunity to meet with many other industry partners and potential clients, including our partners from the Amazon Chime SDK. Vikram Modgil hosted a fireside chat with Jennie Tietema, who is the Principal Product Manager for the Amazon Chime SDK team. They discussed the impact of Generative AI on their work at AWS and how they support many of the innovative companies in this space.

The Fifth Industrial Revolution and its consequences

On Wednesday, the first keynote speaker was Ryan S. Steelberg, CEO of Veritone, who talked about Generative AI as the Fifth Industrial Revolution. Veritone gets a lot of mentions in this blog post as they were one of the larger vendors and impactful speakers at the conference. Ryan made a compelling case about the pace of acceleration and impact of Generative AI compared to past Industrial Revolutions.

I found the comparisons very interesting. The first industrial revolution discussed was the cotton gin – the most ethically problematic due to its relationship to slavery. According to some accounts, the inventor of the cotton gin, Eli Whitney, believed that the introduction of mechanization to agriculture would eliminate the need for as much labor and therefore help end slavery. The actual result was very different: the cotton gin extended slavery in the United States. It is the most brutal example of unexpected and inhumane consequences to a technological revolution.

Similarly, the second industrial revolution described was the rise of mass production and factories in the late 1800s and early 1900s. While this raised overall living standards and brought on the modern consumer consumption driven society, it also created incredible environmental problems to which we are still struggling to find and adopt solutions.

It was both sobering and amazing to realize that I have lived through the subsequent three industrial revolutions! The fact that this is possible in a single lifetime illustrates the accelerating pace of change. The computer revolution began prior to my birth, but was still in full swing as I was born and eventually when I owned my first TRS-80 computer in the early 80’s and began to learn to program. By the time I graduated from college in 1997, the internet revolution was beginning and I was soon working with internet startups for the first time in the boom of 1999 and the bust of 2000.

Now here we are in the early stages of the Generative AI revolution. As our work at WebRTC.ventures continues to evolve and grow, we are both experiencing that change and innovating along with it, as all creative businesses must.

What will the consequences be of the Generative AI revolution? This is an open question, both in my opinion and from what I heard from others at the conference. Generally speaking, a conference like this is filled with techno-optimists. Most of the time that’s where I fall. But all technology can be used for good or bad outcomes. It’s healthy to remember that many people, including myself, were optimistic that the internet revolution would democratize information and make the world flatter. While that has been true, we can also clearly point to many societal problems that the internet has exacerbated, notably the spread of disinformation, the amplification of extremist ideologies, and the highly divided political landscape we live in now.

If you want to hear a range of opinions on the impact of the AI revolution, I recommend the Freakonomics podcast series. It just recently published a three-part series on AI and its potential positive and negative impacts on society.

You can also check out Episode 24 of my own Scaling Tech Podcast with MIT Professor Barb Wixom. The episode will be published on September 26, 2023. Subscribe to us on YouTube, Spotify or Apple and you’ll hear Barb talk about AI in the context of her new book “Data is Everybody’s Business” from MIT Press. Barb talks about the importance of acceptable data use, and other ethical issues such as the rights of employees if their company is using employee input and expertise to train an AI model.

Different Types of AI Applications

Another keynote speaker, Kim Rees from JP Morgan, mentioned three different types of AI applications in her talk:

AI Under the Hood

These are applications where AI is being used to benefit the customer or company, but it’s not apparent to the customer. She gave the example of credit card fraud detection, where the customer doesn’t see that AI is being used behind the scenes to help determine if a particular transaction may be fraudulent. I would describe this as the work of much of traditional data science in large enterprises.

AI Woven In

These are applications where AI is woven into the product to add value, but in a way that is more visible to the user. She gave an example of automatic generation of photo albums based on your smartphone photos. AI could be used to categorize those photos and is used to help you search for photos with particular objects or animals in them.

AI as the Product

This final category applies to many of the vendors at the Voice & AI conference. They are selling APIs and tools that make it easier for application developers to weave AI into their product without being a data scientist. This is very beneficial to product designers, system integrators, and software developers like our team at WebRTC.ventures.

Based on Kim’s description, our work at WebRTC.ventures falls under the second category: AI woven into products. This brings me to our role in the rapidly growing AI economy.

Our Role in the AI Marketplace

Like any business, my company is evolving. I started AgilityFeat in 2010 as an agile consulting business. I quickly pivoted to building nearshore Ruby on Rails development teams in Latin America with my business partner in Costa Rica, David Alfaro. That part of the company is still running well today and operating under the AgilityFeat brand.

In 2015, I spun off another brand, WebRTC.ventures, to build a team specializing in building and managing custom live video and communications applications. Along with my executive team of COO Mariana Lopez and CTO Alberto Gonzalez, we continue to build custom live video applications for a wide range of industries. This includes contact centers, EdTech, Telehealth, Interactive Broadcasting, corporate collaboration, and more. We are leading experts in the integration of WebRTC based voice and video and we’ve worked with all the major CPaaS and open-source projects in the space.

While all those things continue, WebRTC.ventures is at a major inflection point. We continue to be the leading integrators of voice and video into real time applications, but now various forms of AI are also needed by our most innovative clients. We build in services like Symbl.ai to add transcription to live conversations or recordings, as well as call summaries and other data that can help contact center agents be more efficient in their work and to generate enterprise-level insights in the live conversations that our applications facilitate.

It’s also increasingly common that we will build conversational AI chatbots into our clients’ solutions. This could be to enable automation of many customer interactions, such as airline customer service. Or it might be to build in telehealth visit pre-screening questions into a bot so that medical professionals have all the information they need before a remote medical visit. We can work with partners like NLX.ai and Vonage AI Studio for these capabilities. You’ll hear more about our growing expertise in these areas on our blog in the coming months.

We can build these Conversational AI solutions as a stand-alone product. But where our team’s expertise especially shines is combining Conversational AI with the communications application experience that we already have in various modalities including video, SMS, voice and text messaging.

We can make your chatbot multimodal or we can enable your chatbot to transfer to human agents in certain high touch situations. In the next section, I’ll talk more about that.

Conversational AI and Human Agents

In the Freakonomics podcast series on AI mentioned above, there is an interesting insight from economist Simon Johnson. The second episode in that series tackles the question of whether society should be afraid of AI. And whether or not it’s really different from any other technology that is often scary at first.

In order to minimize the negative consequences of AI, Simon suggests that we need to reframe the conversation. Instead of stating that our goal is to build an AI which is better at chess than humans, we should be talking about how AI can make humans better at chess. The important distinction here is to look at things from a perspective of improvement, rather than replacement.

I like that perspective. It fits well with what I said above about our team’s sweet spot in the AI landscape and with industries such as contact centers in particular. In my view, Conversational AI is phenomenal at augmenting customer service agents. Conversational AI chatbots can be used up front in customer service to help ease the burden on call centers by answering the easiest questions, in the same way that an IVR system reduces the number of calls to a receptionist. Let the humans do the interesting stuff, and let the AI take the repetitive questions.

AI is inherently limited by the data on which it is trained. There will always be scenarios where the chatbot has reached its limits and the customer should be transferred to a human agent. Transferring to a human agent is a great place to utilize our expertise in communications applications.

We can help you configure the Conversational AI to know when to transfer the conversation to an agent, and then to facilitate that transfer over video, voice, or text/SMS. For an example of how to build a “Click to Call” application that uses WebRTC to connect a customer with a traditional telephony call center, you can check out my interview with Court Schuett on WebRTC Live, about building Click to Call applications with the Amazon Chime SDK.

Synthetic Voice

Most of the time when someone uses the phrase ‘Conversational AI’, they are referring to written conversations, i.e., text chats with a chatbot. But what if Conversational AI were spoken instead of written?

That’s one of the use cases that Synthetic Voice can enable. Synthetic Voice is a model of a real human’s voice. Using that model, you can give it written text to speak and it will produce audio that is virtually indistinguishable from the real human’s voice. The model could be of a celebrity’s voice, or a more typical person like you or me. As long as the real human’s consent is given for creation of that synthetic voice, it doesn’t really matter.

Veritone specializes in building synthetic voices. When I was speaking with them at their booth during the Voice & AI conference, a Veritone employee mentioned to me that they were doing free recording sessions for conference attendees who wanted to have their Synthetic voice generated.

I couldn’t resist, so I signed up for a slot. All I had to do was step into a recording booth and read a script for about 5-10 minutes. This script included dialogue designed by Veritone so they could hear different types of syllables and inflections from me. Just a few days later, they sent me a sample of my synthetic voice and it was incredibly interesting. I’ll save the details for a future blog post. For now you’ll have to trust me that it’s pretty impressive.

IVR systems have used recorded voices for a long time. We’ve grown accustomed to GPS navigation using somewhat robotic voices. A synthetic voice could be used in those scenarios as simply an improved bot voice, but there are many more possibilities. I’ll also discuss those possibilities more in a future blog post. For now just think about how impressive it would be if you could interact with a chatbot over a voice connection of any kind and it sounded like a real human, in the language and accent you would expect for that company.

Digital Humans

There were also a couple of interesting companies at the Voice & AI conference talking about digital humans. When I attended the 2022 conference, Ericsson talked about their digital humans project. I met another company this year, Virtually Human, who had a demo of their digital humans going at their booth. Basically a digital human is a very realistic avatar. While it’s still very obviously a two-dimensional digital representation of a human, the quality is very high and it is able to show very realistic movements in its face and body as it speaks audio that you feed to the digital human.

What good is a digital human? Our CTO Alberto Gonzalez wrote a blog post recently showing a cool but relatively simple demo of animating an avatar based on his live voice over a WebRTC connection. The avatar he uses is from an open-source project and is not of the same visual quality as the digital humans I saw at the conference. It is still a good representation of one use case for digital humans and avatars: The Metaverse.

Alberto’s blog post is based on a metaverse use case where your avatar is walking around a virtual world, able to interact with other avatars which may also represent real humans or even bots. The “interaction” in many cases might be text chat based, but what if instead your avatar could use your actual voice, over a live audio connection? That is possible using a WebRTC audio channel and an avatar, as Alberto demonstrates.

There are many other use cases besides the metaverse for the more visually sophisticated digital humans I saw at the Voice & AI conference. I was told that research shows customers react more positively to a digital human that is paired with a Conversational AI chat, rather than one without the visual representation. We are more likely to be satisfied with the customer service experience if it appears to be a real human, even though we know it’s just a digital avatar. I don’t have the research to point to, but after I caught myself reflexively smiling back at digital humans who smiled at me in the demo, it makes sense to me that we cannot help but establish a more positive connection with something that looks more human.

A Possible Future for Voice and AI

We’ve covered a lot so far! Let me wrap it up with one possible way all these technologies end up working together.

Imagine you are logged into the app or website for your car insurance provider. You have just bought a new car and sold your older one. You’re trying to replace the car on the policy, but you’re having trouble. Something like this actually happened to me last year. While my issue was ultimately resolved via text chat with a human agent, it was a frustrating process.

Imagine instead that when I first clicked on that “help” link to access customer service, a digital human appeared. She is very recognizable and appears to be the same person who I have seen on their TV commercials before. Let’s call her “Flo” for the sake of argument.

To my surprise, Flo’s digital representation begins speaking to me. It’s in the exact voice I recognize from the TV commercials. Not only that, but she speaks my name and asks about the vehicle I was trying to remove.

“Arin, I see that you are trying to remove your 2010 Subaru Forester from your policy. What’s up, have you got some new wheels?” As Flo’s digital human says this, she laughs and gives me a thumbs up.

As annoyed as I was at the problem I’m having, I can’t help but smile back and chuckle at Flo. I type my answer back to Flo, or maybe the website even allows me to speak to her over a WebRTC audio connection, which is then transcribed to text and fed back to the Flo AI.

I’m able to partially resolve my issue with Flo, but we run into another problem which Flo’s conversational AI is unable to resolve. The Flo digital human shrugs her shoulders apologetically, and says to me in her synthetic voice, “I’m sorry Arin, I just can’t seem to figure out this last part. I’m not sure why we can’t add your new vehicle to the policy. Maybe the real Flo could do it – I am just a bot after all!” Flo’s digital human laughs again and offers to transfer me to a human agent.

After a short pause, I’m connected with a human agent via an audio connection over WebRTC, directly in my browser in the same window in which I’ve been speaking with Flo. We could have done this by text chat instead, but that is much more frustrating and I already was used to interacting with the Flo conversational AI by voice. So an audio connection will be better. We could also use a video connection if the agent needs to see some of my vehicle paperwork, but we’ll start with audio to keep it simple. If I had been reporting damage to my vehicle or making a claim, then a video connection would definitely have been the way to go.

After a conversation with the real human agent, we are able to determine that I misunderstood where to find the vehicle ID number that I was supposed to type in. My new vehicle has been added to my policy, and the human agent congratulates me. On the human agent’s dashboard, an AI agent assist application also lets him know that this is the first new car I’ve added to the policy in over 10 years. So the agent also congratulates me on getting another vehicle for the first time in a while.

Since the Flo conversational AI was never a real human anyways, maybe she never even left! There can be thousands of Flo’s working with other customers in parallel. So just for extra fun and marketing value, the Flo bot is still there and says “Hey Arin, I’m glad we could help. Enjoy that new car and don’t drive it anywhere I wouldn’t!” Both the human agent and the Flo bot say goodbye and I leave the call.

Maybe I leaned into this example too much. But you can see how a really unique, brand-consistent, and maybe even fun customer experience can be created by the combination of Conversational AI, Synthetic Voices, Digital Humans, and WebRTC connections to real human agents when an issue needs to be escalated.

While ultimately I was able to resolve that car insurance problem I had last year via multiple text chats, I can assure you that it would have been a much more fun and positive experience if it had worked out like my hypothetical scenario above.

The Intersection of Voice, Video, and AI

I have more to say about the intersection of Voice, Video and AI, but this is enough for now. Suffice it to say, I really enjoyed the Voice & AI conference and I walked away with lots of ideas for the type of work we are doing at WebRTC.ventures!

If you want to learn more, I have two suggestions for you. The first is to join me in Paris, France on October 19 and 20, 2023! I’ll be at the TADSummit moderating a panel discussion on the intersection of Voice, Video and AI. You can bet that we’ll be talking about cutting edge techniques, and it’s a great excuse to visit Paris.

The second is a bit simpler: Contact our team at WebRTC.ventures! Just fill out our contact form and we’ll get back to you quickly to learn about your unique use case. We are here to Build, Assess, Integrate, Test, and Manage your custom WebRTC based communications application. We would love to work with you on integrating unique applications of Conversational AI and Generative AI to your application, and building better customer experiences!