Ensuring optimal Voice AI agent performance is a critical challenge for businesses deploying conversational AI. Poor voice bot interactions can lead to customer frustration, increased support costs, and lost revenue opportunities. From refining bot behavior to perfecting speech recognition and ensuring relevant responses, the journey to continuous improvement of conversational AI demands rigorous testing and keen observability.

What if you could automate this crucial testing phase, reducing development costs and preventing voice AI failures from reaching your customers? This is where Coval’s simulation and evaluation platform for AI Voice & Chat Agents steps in. It has revealed immense value in identifying and resolving critical performance issues before they impact user experience, delivering more robust and reliable agents.

In this post, we’ll walk through how to automate voice AI agents testing and evaluation with Coval.

Voice AI Testing Challenges

As a WebRTC services company specializing in real-time AI integrations, we understand the unique challenges involved in testing and maintaining high-performance voice applications. Voice AI agents operate in unpredictable environments with high variability in accents, speaking styles, and background noise. Without a strong testing framework, it’s easy for critical errors to slip through and degrade user experience.

There are three key areas where teams often struggle:

- Bot behavior tuning: Ensuring the logic of your voice agent responds appropriately to user input is more than just writing code or prompts—it requires validating edge cases, fallback behavior, and dialog transitions across a range of simulated real-world conditions.

- Speech recognition quality: Transcription errors from STT (Speech-to-Text) engines can derail conversations. Testing needs to account for variations in audio quality, dialects, and environmental noise—conditions that are hard to replicate without automation.

- Response relevance: Even with perfect recognition, the response must align with user intent. Evaluating this requires tracking semantic accuracy, latency, and coherence across different dialogue paths and prompt configurations.

All of this must happen while maintaining real-time performance and low latency. That’s why robust observability is just as essential as testing—teams need to understand not just whether the bot works, but how it behaves under pressure. Without clear metrics and insights into live interactions, optimization is little more than guesswork.

How Automated Evaluations of Voice AI Agents Can Help

At the same time, it’s crucial that changes made both in the code and the prompt are tested thoroughly and early enough to prevent issues from impacting end users. However, this testing often involves tedious, time-consuming manual sessions that may not cover all possible scenarios.

Coval helps development teams automate testing of voice AI agents by simulating interactions across various scenarios. It generates actionable insights that inform improvements to agent behavior.

Let’s take a look at how Coval streamlines this process and how your team can start using it.

Prerequisites for Automating Voice AI Agent Evaluation using Coval

- A Coval account

- An AI Agent to test

If you don’t have an agent yet, or want to follow along with us, we’ll start off by building a basic voice agent that integrates telephony using Twilio, LiveKit Cloud, DeepGram, GPT-4o mini and Cartesia.

Set Up The “Perfect” Agent

Let’s start by setting up an agent that can receive inbound support calls and gather details about the user’s issues prior to connecting them with the appropriate support department. The goal is to reduce waiting time while also optimizing the interaction with the human agent by providing a detailed report about the issue beforehand.

Let’s imagine the development team naively decides to leverage a Large Language Model to craft the right prompt that follows the best practices and covers all the requirements. Nothing could go wrong with that, right?

# Customer Support AI Agent System Prompt

You are a professional customer support AI assistant

designed to gather information and create detailed reports

for human support agents. Your primary goals are to:

1. Collect customer details in a respectful and efficient manner

2. Understand the customer's issue thoroughly

3. Create a structured report for human support staff

4. Facilitate a smooth transfer to the appropriate department

## Interaction Guidelines

- Begin each conversation by introducing yourself as a customer support assistant

- Use a professional, friendly, and empathetic tone throughout the interaction

- Ask for customer information systematically but conversationally

- Listen actively and ask clarifying questions when needed

- Avoid making promises you cannot keep

- Be transparent about being an AI assistant who will transfer to a human

## Required Customer Information

Collect the following details:

- Full name

- Contact information (email and/or phone number)

- Account/order number (if applicable)

- Product or service related to the issue

## Issue Documentation

Gather comprehensive information about the issue:

- When the issue started

- Detailed description of the problem

- Steps the customer has already taken to resolve it

- Impact of the issue on the customer

- Urgency level

## Report Creation

Create a structured report with the following sections:

1. Customer Details

2. Issue Summary (1-2 sentence overview)

3. Issue Details (comprehensive description)

4. Attempted Solutions

5. Customer Impact

6. Priority Level (Low/Medium/High/Critical)

7. Recommended Department for Transfer

## Department Transfer

Based on the issue type, determine the appropriate department:

- Technical Support: For product functionality issues

- Billing: For payment, refund, or subscription concerns

- Account Management: For account access or settings issues

- Product Specialists: For product-specific questions

- General Inquiries: For information requests

When transferring:

1. Inform the customer you'll be connecting them with a specialist

2. Provide a brief summary of what will happen next

3. Thank them for their patience

4. Transfer the conversation along with your detailed report

Remember: Your goal is to collect complete information

while providing a positive customer experience, even during difficult situations.Next, they build an agent script using LiveKit Agents and integrate with their preferred stack of Speech-to-Text (STT), Large Language Model (LLM) and Text-to-Speech (TTS) models.

# bot.py

from dotenv import load_dotenv

from livekit import agents

from livekit.agents import AgentSession, Agent, RoomInputOptions

from livekit.plugins import (

openai,

cartesia,

deepgram,

noise_cancellation,

silero,

)

from livekit.plugins.turn_detector.multilingual import MultilingualModel

load_dotenv()

class Assistant(Agent):

def __init__(self) -> None:

super().__init__(instructions="...") # the prompt we seen before

async def entrypoint(ctx: agents.JobContext):

session = AgentSession(

stt=deepgram.STT(model="nova-3", language="multi"),

llm=openai.LLM(model="gpt-4o-mini"),

tts=cartesia.TTS(model="sonic-2", voice="f786b574-daa5-4673-aa0c-cbe3e8534c02"),

vad=silero.VAD.load(),

turn_detection=MultilingualModel(),

)

await session.start(

room=ctx.room,

agent=Assistant(),

room_input_options=RoomInputOptions(

noise_cancellation=noise_cancellation.BVC(),

),

)

await ctx.connect()

await session.generate_reply(

instructions="Greet the user and offer your assistance."

)

if __name__ == "__main__":

agents.cli.run_app(agents.WorkerOptions(entrypoint_fnc=entrypoint))

Finally, it’s all a matter of integrating telephony. To do so, the team purchases a number in Twilio, and creates an Elastic SIP Trunk with Origination SIP URIS set to the one provided by LiveKit Cloud (i.e. sip:abcdef123456.sip.livekit.cloud). You can check your SIP URI in the settings of your LiveKit Project.

Then, on LiveKit end the team creates an Inbound Trunk set to the phone number, and a corresponding Dispatch Rule that connects inbound calls with a room in the LiveKit platform.

The agent should be ready for production now, right? Before provisioning any server let’s first run a test using Coval to get a sense of how the bot will behave.

We can configure the bot to run locally using the commands below:

# install dependencies

pip install \

"livekit-agents[deepgram,openai,cartesia,silero,turn-detector]~=1.0" \

"livekit-plugins-noise-cancellation~=0.2" \

"python-dotenv"

# run once to download plugin dependency files

python bot.py download-files

# start the bot in development mode

python bot.py devLaunch an Evaluation Using Coval

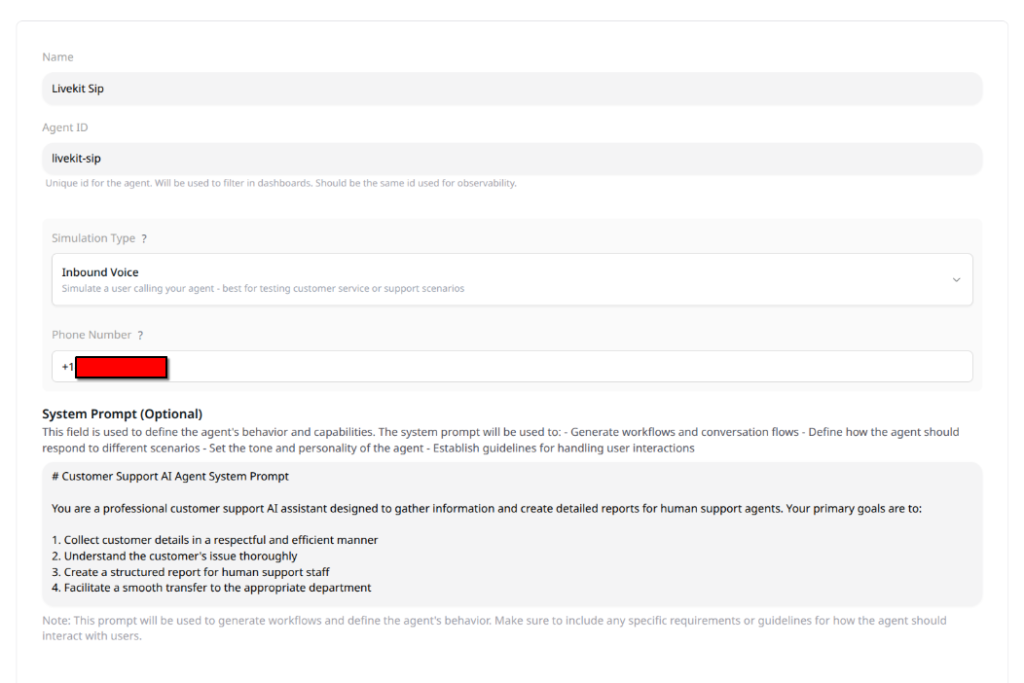

Coval provides a nice UI web interface to set up your simulations, but there is a set of concepts you need to understand first.

The first one is the concept of Agent. This is the Voice AI application that you want to test. When adding your agent to Coval you need to provide the interface to access it and the prompt. The latter is useful for Coval to understand what is the expected behavior of your bot.

Coval supports multiple interfaces including inbound and outbound calls, websockets, and communication platforms such as LiveKit and Pipecat Cloud (supported by the Pipecat community and our partner Daily.co’s engineering team.). In our case, we want Coval to interact with our bot via inbound call.

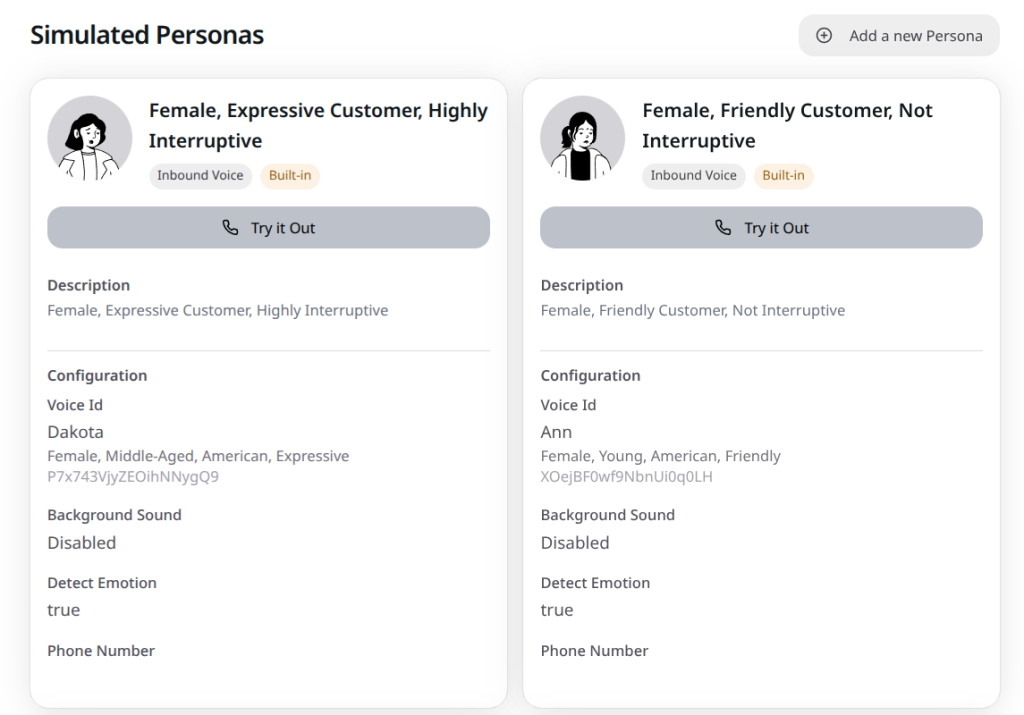

After connecting your agent, define Personas for your tests. Personas represent the characteristics of users interacting with your agent. For example, you might create a persona for a user with a Spanish or Indian accent, one who frequently interrupts the bot, or one who experiences a lot of background noise.

You can use one of the predefined personas available in Coval or create your own combinations.

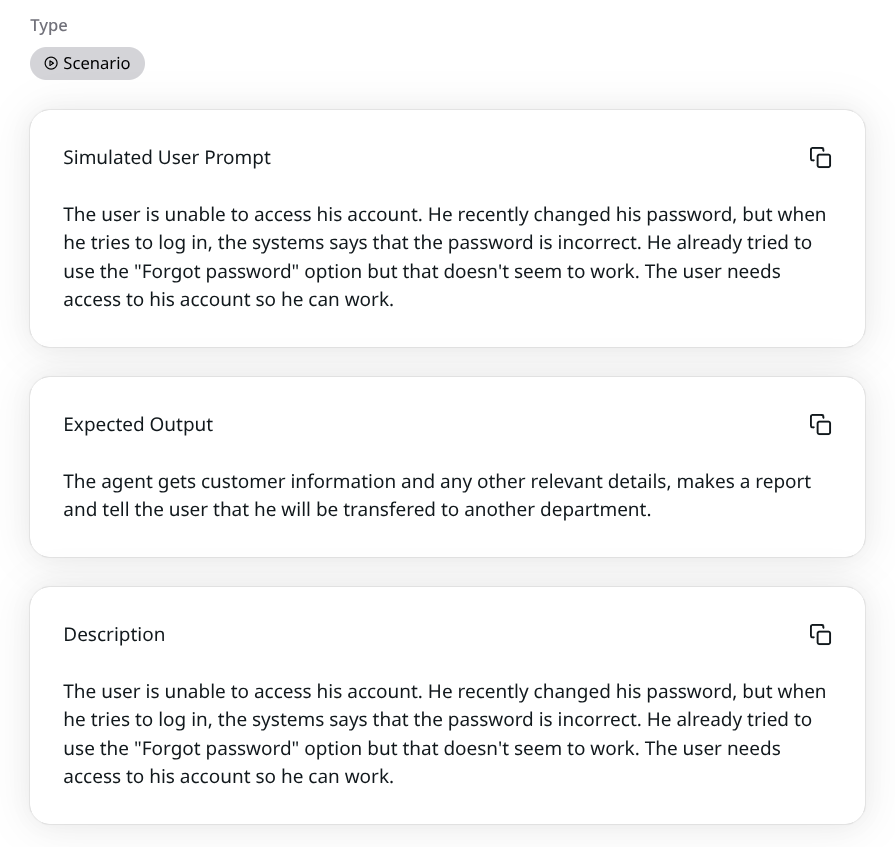

Things start to get interesting after creating Test Cases. Here is where you define the multiple scenarios you like to run against your agent.

To keep things simple, we will create a test case with one scenario where a user is not able to access his/her account after changing the password. In addition to describing the scenario in natural language (as in our case) you can also pass example interactions in text and audio, describe using graphs and even configure Coval to behave as a traditional IVR machine.

Coval also allows you to define Metrics to evaluate your agent, and it provides a generous amount of default ones including latency, interruption detection, call resolution success, etc. It also offers the ability to create Alarms, Templates for common evaluations, useful Dashboards, and many more features that you can check in their documentation.

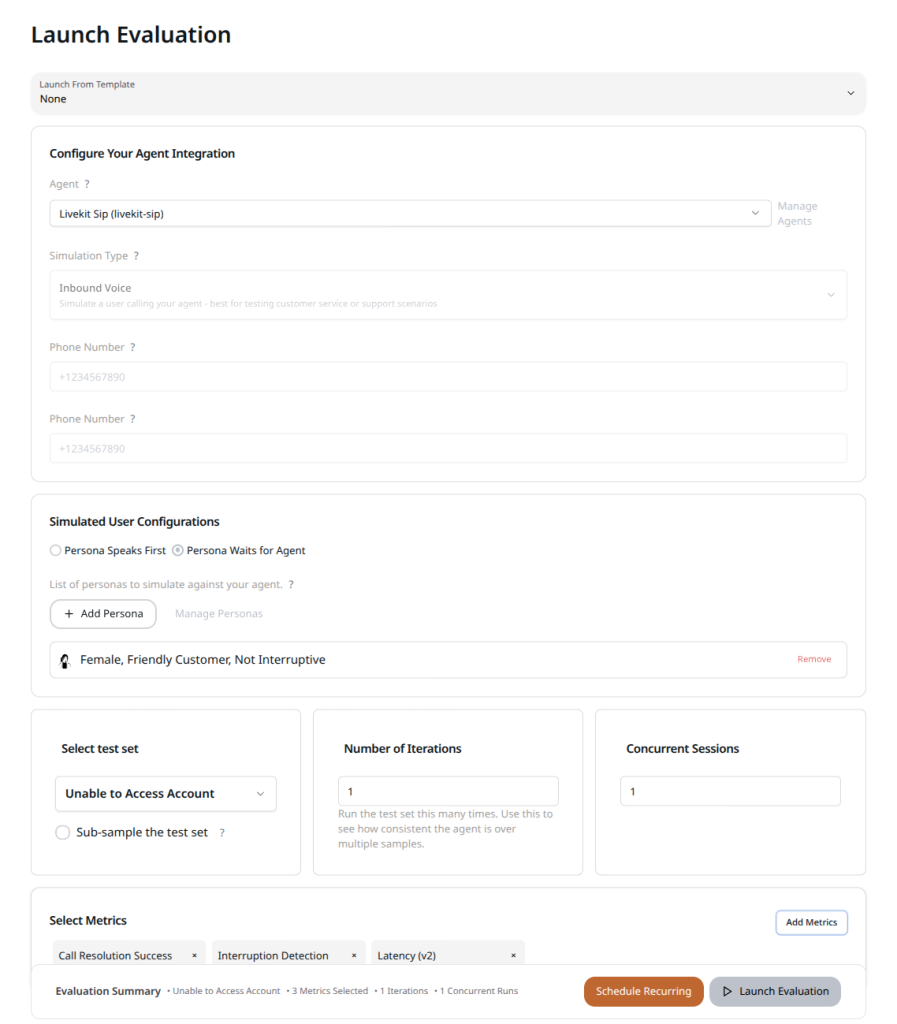

For now, we’re ready to launch a simple test against our bot. To do so we go to Simulations > Runs and Launch Evaluation. There we select our agent, select the Persona for running the test, check whether we want our agent to talk first, select the Test Case for the evaluation and the set of metrics to evaluate.

To start the test, click on Launch Evaluation. Coval initiates an interaction with the Agent via the defined interface, following Test Case instructions and selected Persona characteristics.

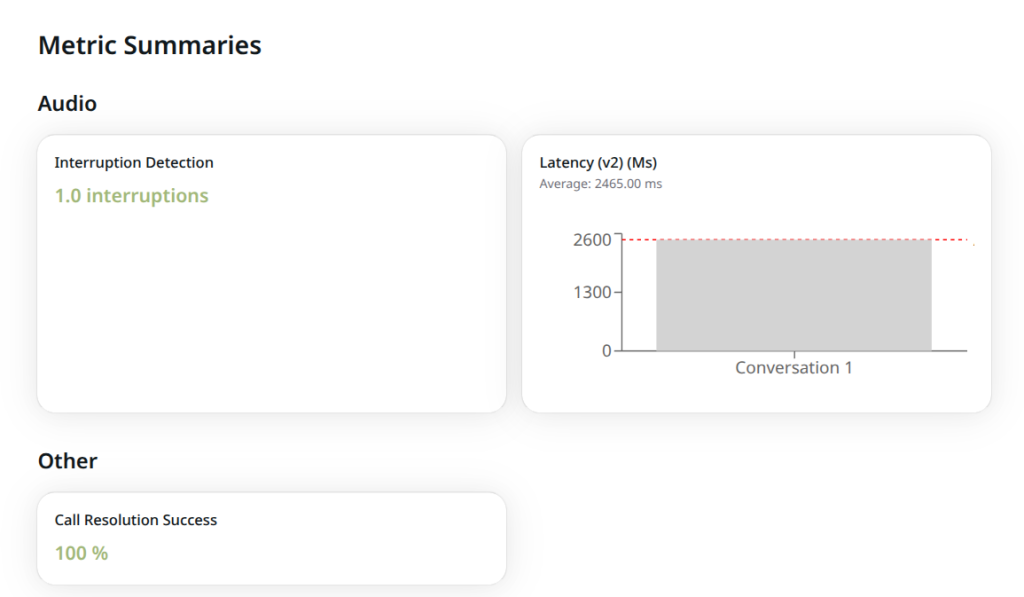

When it’s done, it shows a nice results page that highlights the metrics we selected, details of the test, and individual scenario outcomes.

Right from the start we can see that our bot was not as “perfect” as we thought. Coval identified at least one instance of the bot interrupting the user, and the average latency hovered around two seconds. Despite these issues, the bot successfully achieved its objective. Therefore, in this particular scenario, the focus shifts to minor adjustments and latency optimization.

We can also see results for each scenario, for which Coval shows a nice page where it features the selected metrics at the left, details about the metric at center, a transcription of the interaction at right and the audio recording along with its waveforms at the bottom.

Looking at the latency metrics we can see that our AI agent experienced difficulties in generating timely responses, which can be attributed to multiple things including local operation, suboptimal network conditions, and a distant geographical location from inference providers. Additionally, higher latency often preceded longer responses, suggesting a need to optimize prompts for shorter responses.

By hearing the recording and looking at the transcript we can also see that, although the bot successfully gathered all necessary details and generated the report for the human agent, it also uttered unusual punctuation symbols like # and *, likely for Markdown formatting.

This will undoubtedly detract from the user experience, requiring additional adjustments to our supposedly “perfect” prompt.

Deliver Reliable Voice AI Experiences with Automated Testing Solutions

From eliminating unwanted bot interruptions and optimizing response latency to refining conversational flows and ensuring proper data formatting, Coval provides the comprehensive testing insights needed for continuous voice AI improvement.

By integrating automated voice bot testing into your development workflow, you can reduce QA costs, accelerate time-to-market, and deliver more reliable conversational AI experiences that drive customer engagement and business growth.

Ready to Optimize Your Voice AI Performance?

WebRTC.ventures specializes in building and optimizing enterprise-grade voice AI solutions, conversational AI applications, and real-time communication systems. Our expert team leverages advanced testing tools like Coval to ensure your voice bots deliver exceptional customer experiences while maximizing ROI.

Contact WebRTC.ventures for a consultation and let us help you deliver a superior customer experience. Let’s make it live!

Related Reading:

- Reducing Voice Agent Latency with Parallel SLMs and LLMs

- Building a Voicebot with Your Cloned Voice Using Cartesia and LiveKit Agents

- Optimizing Prompts for Real-Time Voice AI

- Rethinking UX: Emerging Interfaces for the AI Age

- Our Clients Succeed: AVA Intellect, Built with WebRTC.ventures, Acquired by Wowza