Generative AI is a powerful tool for call centers. It can be integrated as a standalone AI Sales Agent or as a co-pilot providing Agent Assist to a human sales rep, among other uses. Generative AI is particularly well-suited for industries like travel because it can pull from public information about destinations, climates, and the like in order to provide highly personalized advice for would-be travelers.

In this post, we’ll guide you through building an AI Travel Agent (aka voicebot) with the integration of Symbl.ai’s Nebula Large Language Model (LLM) for natural human conversations, Symbl.ai’s Streaming API for real-time transcription, and Amazon Connect’s robust communication platform.

In a later post, Integrating Agent Assist with Symbl.ai Nebula LLM and Amazon Connect, we show an alternate use of this functionality where the Generative AI serves as a co-pilot to a human agent. Of course, the benefits of both the AI Travel Agent and the AI Travel Agent Assist transcend the travel sector to almost any field.

Let’s start with a look at the main players in this project: the Nebula Chat, Symbl’s Streaming API, and Amazon Connect, and then we will go through the steps of building our AI Travel Agent.

Nebula LLM

Nebula, Symbl.ai’s proprietary Large Language Model, is optimized specifically for building Generative AI experiences and workflows that involve human conversations. It is capable of processing various types of conversations such as sales, contact center, recruitment, meetings, emails, chats, and more.

Nebula can perform instruction tasks such as request summaries, follow-up questions, draft emails, and issues to review. In the travel agent scenario, it can also identify and recommend resolutions to customer issues and recommend alternative travel arrangements. All this leading to:

- Enhanced Customer Engagement

- Quick Access to Information

- Reduction in Training Time

- Increased Sales Opportunities.

- Error Reduction

Nebula is ideal for scenarios involving human dialogue and supports two distinct model variations.

- Chat Model – The Nebula chat endpoint takes a list of messages comprising a chat between a human and assistant, and returns a response containing an updated list of messages, with the last message containing a response from the model.

- Instruct Model – Model API allows calling the model to perform various tasks by sending prompts and various generation parameters with instruction and conversation transcript, and returns the generated output.

In our project, we use the Chat model.

Interested in learning more about the power of LLMs? Join us for the February 21, 2024 episode of WebRTC Live featuring Symbl.ai’s Dan Nordale. Register today.

Symbl.ai’s Streaming API for Real-time Transcription

Symbl.ai provides a wide range of services and products that leverage advanced natural language processing and machine learning. These tools are adept at analyzing text and speech data to extract valuable insights and intelligence. The features offered by Symbl.ai are diverse, encompassing:

In this project, we used the Streaming API, which uses the WebSocket protocol to process audio and provide conversation intelligence in real time.

Amazon Connect AI-Powered Contact Center

Amazon Connect is the Amazon Web Services (AWS) cloud-based contact center service that is integral to the solutions discussed in this blog. It offers a seamless, scalable, and customizable experience for call centers of all types. Amazon Connect is designed for easy setup and scalability, allowing businesses to quickly establish and adapt their contact center operations as needed, without extensive hardware or complex software. This adaptability is crucial for businesses experiencing growth or fluctuating contact volumes.

A significant advantage of Amazon Connect is its ability to seamlessly integrate with other AWS services and third-party applications, such as we are doing today with Symbl.ai’s Nebula LLM and Streaming API. This integration facilitates real-time data processing and intelligence gathering, enhancing customer service.

Amazon Connect also provides tools for customizing customer experiences, including interactive voice response systems and real-time analytics, enabling businesses to tailor interactions to specific customer needs. Moreover, it adheres to AWS’s rigorous standards for data security and compliance, ensuring secure communications and adherence to data protection regulations.

The Complete Tech Stack

- Symbl.ai

- Symbl.ai Nebula LLM

- Amazon Connect

- Amazon Kinesis Video Stream

- amazon-connect-stream library

- connect-rtc.js library

- Web Speech API

- Next.js

- Tailwind CSS

- Ant Design

- Nest.js with Socket.io.

Building our AI Travel Agent

Prerequisites

You will need access to the following;

- A pair of appId and secret for Symbl.ai, which you can get from the platform’s main page. We use these to retrieve a temporary access token.

- An API Key for Nebula LLM, which you get by joining the beta wait list.

- A pair of Access and Secret keys for the AWS account where Amazon Connect is configured.

Make sure to set up your Amazon Connect account by claiming your number, setting up routing profiles, agents, queues, contact flows, etc., as described in the ‘Get started with Amazon Connect’ documentation.

Architecture

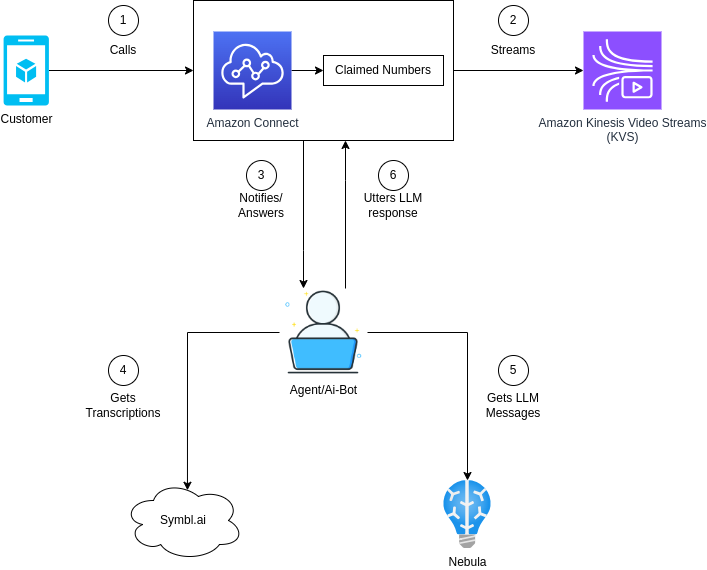

Our AI Travel Agent will work like this:

- A customer calls one of Amazon Connect’s claimed numbers for the application.

- Amazon Connect starts streaming to Amazon Kinesis Video Stream (KVS).

- A human agent receives the call within the web application and answers it.

- The audio from the customer is sent to Symbl.ai which returns a transcription of it.

- The transcription is sent to Nebula LLM with a specific prompt so it returns the desired type of response.

- The response from the LLM is uttered by the browser’s native Text-to-Speech (TTS) engine and sent back to Amazon Connect so the user can hear it.

These steps are depicted in the diagram below:

Connecting and Loading Amazon Connect Contact Control Panel (CCP) on Web

We use Next.js and Typescript for the client side code, along with the amazon-connect-streams library for loading the CCP interface to directly receive calls in our web application. We also download the latest versions of connect-rtc-js and aws-sdk libraries.

Now load the CCP within the Next.js app by following these steps:

- Call the connect.core.initCCP passing the reference of a div element where to load the CCP on the DOM.

- Configure the CCP by passing an options object with some parameters. (You can read more about these in the Amazon Connect Streams github repo.)

- Set softphone.allowFramedSoftphone to be false. This tells the amazon-connect-streams library to not use its default CCP.

- Call connect.core.initSoftphoneManager({ allowFramedSoftphone: true }), which tells the amazon-connect-streams library to use connect-rtc-js as the Softphone manager.

- Set a call back, in case a call is received.

The connect-rtc-js library acts as a wrapper around amazon-connect-streams so that we are able to access the RTCSession in our code. With this we are able to get the remote stream and RTCPeerConnection object.

The code for these steps is shown below:

// 1. Call connect.core.initCCP and 2. Configure the CCP

connect.core.initCCP(containerDivRef.current, {

ccpUrl: "<amazon_connect_url/ccp-v2/>",

loginPopup: true,

loginPopupAutoClose: true,

loginOptions: { autoClose: true },

// 3. Prevent amazon-connect-streams from sing its default CCP

softPhone: { allowFramedSoftphone: false },

pageOptions: {

enableAudioDeviceSettings: true,

enablePhoneTypeSettings: true,

},

});

// 4. Initialize the connect-rtc-js as the softphone manager

connect.core.initSoftphoneManager({ allowFramedSoftphone: true });

// 5. Register a call back in case a call is received

connect.core.onSoftphoneSessionInit((e) => {

// returns connect-rtc-js which is softphone manager

var softphoneManager = connect.core.getSoftphoneManager();

connectionIdRef.current = e.connectionId;

setSoftphoneManager(softphoneManager);

if (softphoneManager) {

var session = softphoneManager.getSession(e.connectionId);

const remoteStream = session?._remoteAudioStream;

session._onRemoteStreamAdded((e) => {

console.log({ remoteStreamAdded: e });

});

}

});

Connecting Symbl.ai Streaming API

To connect to the Symbl.ai Streaming API, we first need an access token to authenticate the requests.

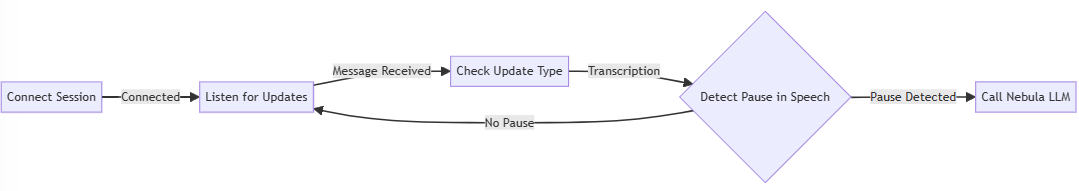

Once the session is connected, you can now bind listeners to the onmessage callback to receive updates such as transcription, trackers, sentiments, action_items, etc. from Symbl.ai.

In our case, we are only interested in the transcriptions and then we are calling Nebula LLM only when we detect a pause in speech from the user for a specific number of milliseconds.

This flow is depicted in the image below.

Calling Nebula LLM

Now this is the part we have been waiting for, the power of Generative AI.

As mentioned above, Nebula provides 2 models: Instruct and Chat. In this blog post we use the latter because we want to simulate a conversation, therefore we want the Nebula Chat Model to keep track of previous messages.

Also note that there are 2 different endpoints for the Chat Model.

- /v1/model/chat: Returns the generated out in a single http response.

- /v1/model/chat/streaming: Returns the generative response using Server-sent-events (SSE) and allows the client to start receiving output progressively as it is getting generated.

In this demo, we use the 2nd option as it allows receiving responses as they are being generated.

Once that text response is received, we just send it to the call agent which in turn will convert text to speech (TTS) and relay that to the Amazon Connect call.

Time for a demo of our AI Travel Agent in Action!

If you are interested in integrating an AI Agent into your communications platform, reach out to the experts at WebRTC.ventures. Contact us today!