I attended Enterprise Connect 2023 at the end of March for the first time in a few years. I had great conversations with a number of vendors and partners of WebRTC.ventures. 2023 is certainly the year of the AI Hype Cycle. While the content at Enterprise Connect was no exception, it provided a lot of interesting insight into the role of AI in the enterprise.

In particular, if there is one phrase that Enterprise Connect emphasized in my mind, it’s AI Agent Assist. In this post, I’ll explain that term, give some examples, and relate it to the role of a human agent in the enterprise. To be clear, I’m using the term as a generic concept and not referring to any one company’s product. Though multiple companies have a product that goes by the same name.

At WebRTC.ventures, we have been building live video applications for our clients since 2015. While we primarily work with video applications, they are almost always multimodal in nature. Our team of multimodal communication developers can integrate voice, video, SMS, and text chat into any web or mobile application. As the speakers at Enterprise Connect demonstrated, multimodal communication development is also rapidly evolving to incorporate intelligent bots, AI, and Large Language Models (LLM) which can provide a helpful assist to the live agent.

The Role of AI in the Enterprise Contact Center

When you talk to people about the role of AI in the enterprise contact center, they are generally of two opinions. Either they believe that AI will soon replace all human agents, and the industry is on the precipice of radical change that will eliminate a whole category of human jobs. Or, that AI will radically change the contact center, and provide a level of intelligent customer service that a human cannot efficiently achieve on their own.

I met people who were adamantly of each opinion at Enterprise Connect. The common belief amongst everyone I spoke to was that this is not a passing fad. Revolutionary change is well underway. The only point of contention is around the role for humans (and their jobs) once this is complete.

Personally, I think it is unrealistic to believe that the role of the human agent in the contact center is going away. Human agents will remain an important part of the picture. It also seems clear to me that many of the least complex tasks that an agent might perform will be done entirely by bots. But there remains a role for human agents and AI to work together and complement each other. That is where AI Agent Assist comes in.

What is AI Agent Assist?

AI Agent Assist is where the knowledge of a human agent is supplemented by an AI bot that is monitoring the conversation between that human agent and the customer. The bot can provide guidance to the human agent along the way, helping them with product specification details, recommendations, suggested answers and follow up actions, insight into the customer’s history with the company, what similar customers have purchased, and more.

This assistance is provided in real-time by using a speech-to-text transcript of the live conversation. This is fed to the bot, whose assistance is then provided back to the agent on their screen. The customer most likely is not aware that the human agent is receiving this assistance, or that some of their answers may have been written by the bot. All the customer knows is that they are receiving a superior level of customer service.

Imagining the Capabilities of Conversational AI and LLMs

Although I don’t believe that the role of the human agent will disappear, it is interesting to first look at an example of how far conversational AI has come. And, how it can be paired with an LLM to create powerful customer experiences, even without a human agent involved.

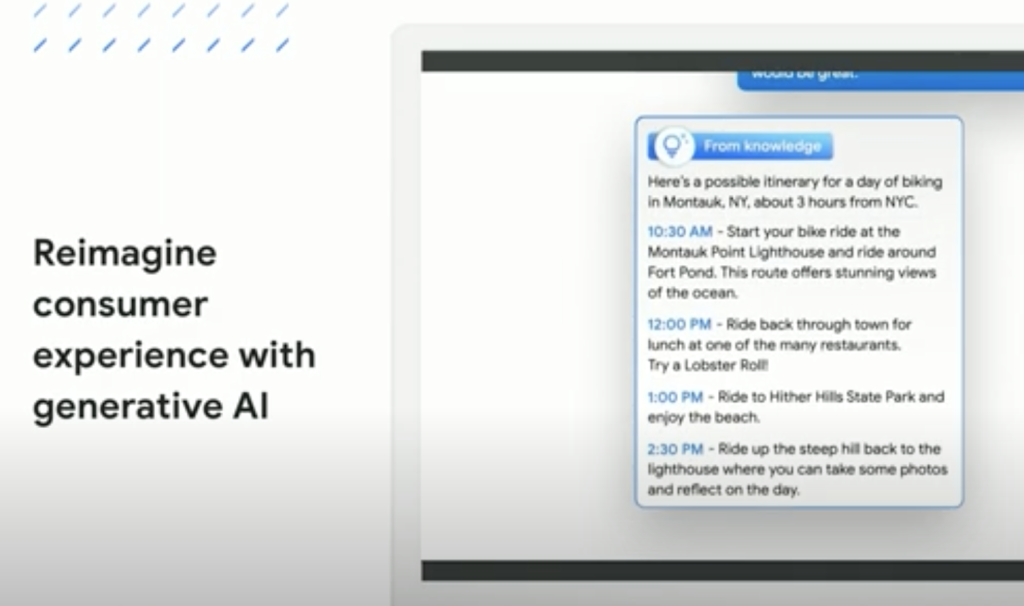

In the Google keynote at Enterprise Connect 2023, there was a very interesting demo that shows the potential of Large Language models in the contact center. The keynote was delivered by Behshad Behzadi, PhD, VP of Engineering for Conversational AI at Google Cloud.

In part of the presentation, Behshad shows an interesting demo of a customer shopping for a bicycle (starts at minute 20:00), and doing their research on what to buy via the conversational AI of a bike shop. The bot uses domain knowledge specific to their shop and what it’s been trained to know about bicycles. It is also able to tap into the wider knowledge that a ChatGPT-style of LLM bot has beyond the knowledge of that specific store. With this, the bot can make product comparisons with competitors. It can even map out a specific bike route that it recommends near where the customer lives, based on publicly available information on the internet.

The Google keynote went on to show how in this fictional scenario, the AI is able to not only fully assist the customer, but also provide summaries of the customer contact and take follow up actions if needed, such as scheduling pick up times for the bike that has been purchased.

ChatGPT is not ready for the Enterprise

Although the example above shows the power of a Large Language Model, Behshad also took time to explain in the keynote that this does not mean that a general purpose LLM like ChatGPT can just be plugged into the enterprise. I think this is an important point, and Google was correct to make it. Even though their perspective could be biased by the fact that Microsoft has invested in ChatGPT and is incorporating it into their own enterprise tools.

An LLM can (simplistically) be compared to a very conversant and well-spoken search engine. Hopefully we all know that just because you can Google something and find an article about it on the internet, does not mean that article is factual in any way. Google just helps you find the content you asked for – it doesn’t do much to help you determine if that content is reliable or factual. ChatGPT is similar, but with the added twist that ChatGPT can answer your question in a very convincing way, even if its logic is based on falsehoods that it discovered on the internet.

That sort of risk is not acceptable in the enterprise. What if your company is using a Conversational AI that is very good at convincing your customers of something which is not true? That is very damaging to your corporate brand and may even carry legal consequences.

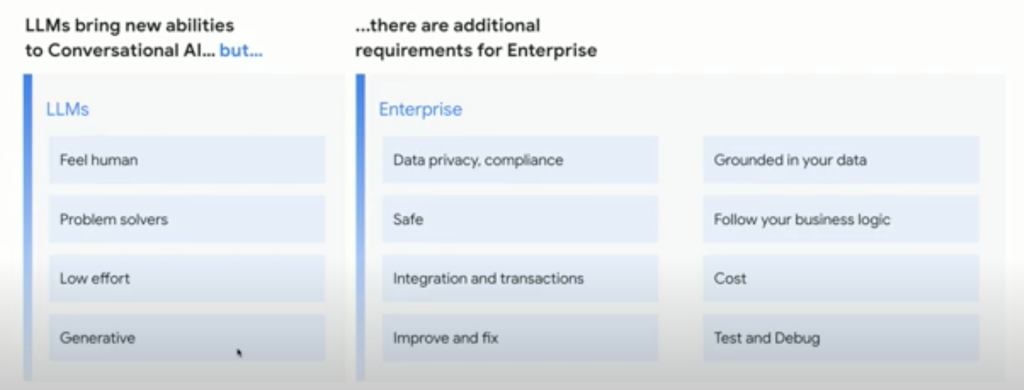

For that reason among others, Behshad said that LLMs bring new abilities to Conversational AI, but that there are additional requirements to use it in the Enterprise. As shown in the image below from his keynote, these include being safe, grounded in data, and following all data privacy and compliance requirements of your enterprise.

We have seen an incredible increase in the abilities of LLMs like ChatGPT in early 2023. However, these enterprise constraints are a big part of the reason that we still have a long way to go before we know how to responsibly incorporate AI into the enterprise.

If you need any further evidence of the risks that the current state of LLMs like ChatGPT can pose, then look no further than this recent threat of a defamation lawsuit by an Australian mayor against OpenAI, the makers of ChatGPT.

The lawsuit is based on ChatGPT responses which have falsely stated that this mayor has been convicted of crimes and gone to prison. In a separate article in the Washington Post about this potential lawsuit, Cambridge University computer scientist Michael Schlichtkrull was quoted as saying, “In AI research we usually call this a ‘hallucination,’ Language models are trained to produce text that is plausible, not text that is factual.”

In other words, there is a lot of potential for LLMs, but the risk of “hallucination” and making up information in order to tell a compelling story is a big risk for the enterprise This is part of the reason that I believe they need to be used with caution, and there is still a very large role for human agents in the contact center. By working together, AI Agent Assist tools and human agents can provide the best customer experience and reduce the risks.

Using AI Agent Assist during a call

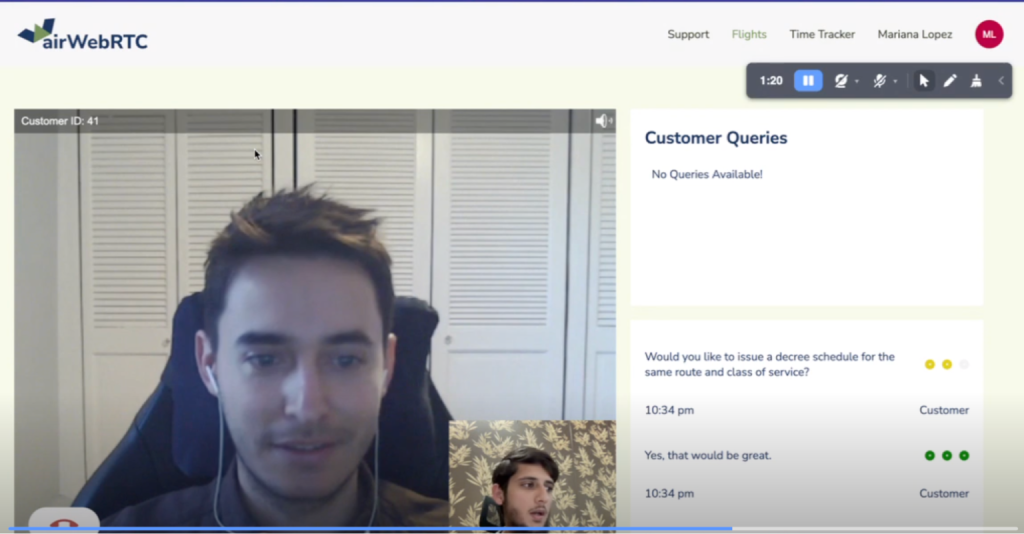

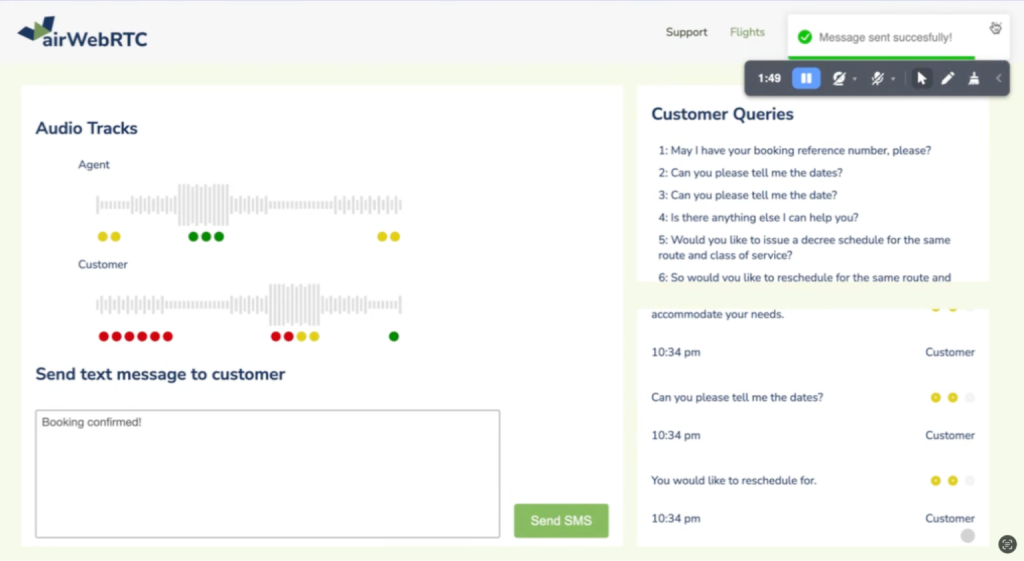

During a call, there are many levels of AI that can help an agent. In a recent blog post on WebRTC.ventures, we demonstrated how to use the Symbl.ai APIs during a Vonage video call to capture a live transcript of a human agent assisting a customer with a flight change. In addition to the transcript, we showed using the sentiment analysis API in real-time to indicate if the customer is feeling positive or negative at that point of the conversation. In addition, the Symbl.ai model was picking out specific questions the customer asked and showing them on the agent’s dashboard.

This is a great example of using AI/ML services like those of Symbl.ai to provide valuable assistance during the call.

If we added in an LLM, then we can go further. Say the LLM has been trained on our product database and has access to our airline database. It could help the agent recommend flights they could then offer to the customer.

This type of functionality is definitely a growing priority for enterprise contact centers. One of our partners, the Amazon Chime SDK, launched Amazon Chime SDK Call Analytics at Enterprise Connect. (See the AWS announcement here as well as this interview with Principal Product Manager Jennie Tietema.)

These new features allow you to take the real-time audio and record and transcribe, as well as do voice tone analysis and speaker search.

Imagine taking all of this real-time information from a call and then using an LLM to help the agent find better answers to customer questions, to proactively look up data or solutions, and to suggest additional products for them. All of this can be done in the background and presented to the agent during the call, so that they can choose what is the best information to share with the customer. That’s the power of implementing an AI-driven Agent Assist during the conversation.

Using AI Agent Assist after a call

After the call, there is more that we can do with an AI Agent Assist. Going back to the demo in our blog post referenced above, we showed that after the agent has helped the customer with their flight query, the agent now sees the full transcript of the conversation, a summary of the questions asked, as well as an analysis of the sentiment of the customer and the agent over the time period of the call. This is an example of how an AI/ML service can give you helpful summaries automatically, instead of having the agent write down notes before going to the next call.

Our application could present a summary of the call and standard call notes based on the format preferred by the airline, and then give the agent a chance to do a quick edit of those if desired before saving them with the call. This allows for a very efficient post-call process for the agent, which returns them to the next call as soon as possible so they can help more customers.

In our simple demo, we also show a box on the agent after-call screen where they can send a text message to the customer following up on the call. We imagined the agent typing in something like, “Your flight has been changed as you requested. Here is your new flight number and seat assignment. Have a great trip!” But with an AI Agent Assist, this message could be filled in by the AI and only previewed by the human agent before sending. Again, this allows the agent to provide superior customer service very efficiently, so they can quickly move on to the next call.

All of this information comes from an AI/ML service reading and understanding the call transcription. Speeding up or even eliminating the agent’s post-call work is not all it can do. The summaries and key information can be stored in your data lake for further aggregate post-call analysis to see trends across your contact center.

In Amazon’s keynote, Dilip Kumar, VP of AWS Applications, talked about how AI in the enterprise will help organizations to “deliver faster, predict abnormalities, personalize messaging, generate customer insights, and give agents the right tools to succeed.”

We are probably only just beginning to understand the different ways that AI can be used in the contact center and the enterprise. It’s definitely here to stay!

AI Agent Assist for Sales and Support

In my example above, I’ve mainly talked about a customer support scenario. AI Agent Assists can be equally valuable in sales situations.

My colleague Dan Nordale at Symbl.ai referred to this as “optimizing the seller’s environment” in a recent conversation. By which he was saying that a properly trained AI/ML model can provide additional product information to assist the seller during the sale. This is more like the Google example above of helping the customer to buy a bicycle online.

The AI Agent Assist can help an agent to compose follow up emails after a sales call, capture next steps and agreed upon actions, set follow up appointments, quickly research and compile data sheets that the sales representative needs to close the deal, and more. Just like our support agent who needs to quickly move on to the next call, our sales agent also needs to quickly complete post-call actions and log their learnings from the prospect, so that they can move on to the next potential sale.

AI Assistance Across the Enterprise

This sort of AI driven assistance will soon impact all of our lives, regardless if we work in an enterprise call center or not. It may not replace your job, but it will help you to be more efficient in your work. The increase in efficiency and productivity will help enterprises in many ways beyond the contact center.

If you watch the full Google keynote, you’ll also see demos of taking notes during a business meeting. An AI assistant is able to help you quickly follow up, build a related presentation, including custom graphics, and more. All based on the key information extracted from the conversations in that meeting.

Conclusion

We’re already applying AI and ML services to our work at WebRTC.ventures. We’re helping our clients go beyond building video and communications applications, and helping them to build intelligent communications apps. One of our more musically talented engineers even used ChatGPT to help write a song about our regular webinar series, WebRTC Live!

If you would like to explore how to build your own intelligent communications application, or integrate any of the services mentioned above into your enterprise contact center, contact our team. We’ll be happy to apply our expertise to your unique needs! We can provide a wide range of services from consulting and assessments, to custom development, integration, testing and managed services.