AWS re:Invent 2024 brought together nearly 70,000 attendees in Las Vegas, reaffirming AWS’s status as the leading cloud provider. This year’s conference was overflowing with groundbreaking announcements and innovations that are set to shape the future of cloud technology.

As WebRTC.ventures are members of the APN network as well as Systems Integration Partners for the Amazon Chime SDK, I was excited to immerse myself in this vibrant atmosphere of learning and collaboration, and to have the opportunity to network with other AWS partners from around the world.

Here’s a recap of some of the noteworthy announcements, talks that sparked my interest, innovations that affect our work with real-time communication applications, standout AWS Partners and Expo highlights, and overall exciting AWS features. (You can also watch talks on demand.)

Headline Announcements

AWS made significant strides in AI with the launch of Nova, a new family of foundational AI models that enhance Bedrock’s capabilities. Nova allows developers to deploy highly scalable generative AI models without the need for fine-tuning. Optimized for natural language processing and multi-modal tasks. These innovations underscore AWS’s commitment to lowering barriers for companies seeking to integrate AI without extensive data science expertise.

Additionally, AWS introduced the Trainium Next-Gen AI Chip. Coupled with new SageMaker Studio features, businesses can now train, deploy, and optimize AI models more cost-effectively.

There were also some advancements in AI cost efficiency and security with the unveiling of Agentic AI featuring Anthropic Claude 3.5. This enhancement improves task management in multi-agent systems while prioritizing safe generative AI practices.

Innovations That Affect Real Time Communications

Bedrock Latency-Optimized Inference

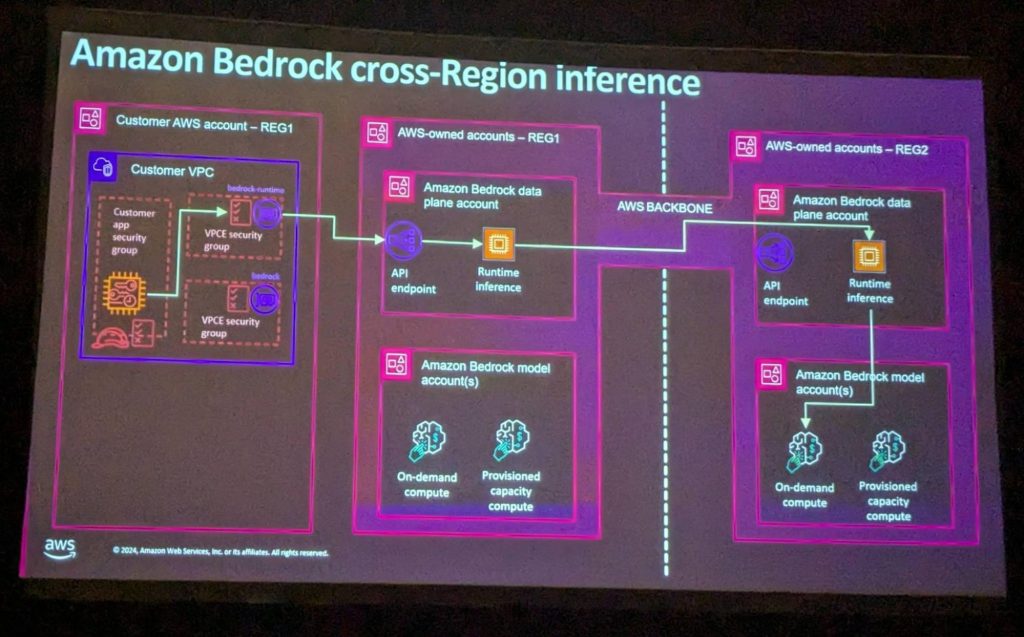

This feature enables real-time inferencing with minimal latency, even without fine-tuning, now available in the US-East region. This is crucial for applications like virtual agents in live calls or real-time analytics.

AWS Connect Enhancements

- Enhanced privacy controls and data governance measures, including integration with WhatsApp for secure and compliant customer interactions through Amazon Connect.

- AI-powered tools like Amazon Q, which deliver guardrails for customer conversations, sentiment analysis, and agent performance evaluation.E

Expansion of AWS Local Zones and S3 storage classes for Dedicated Local Zones

AWS Local Zones extend AWS infrastructure closer to end-users, allowing ultra-low latency applications by deploying compute, storage, and other services in geographically closer locations.

Other AWS Features We Are Also Excited About

MemoryDB Multi-Region Support

Perfect for real-time applications, the multi-region deployment of MemoryDB is increasingly being used as a cache layer for LLM inferencing and Retrieval-Augmented Generation (RAG).

Optimized Cost-Efficient SLM (Small Language Models)

Fine-tuned from LLM (Large Language Models), these models are ideal for targeted applications. More about this below.

Hybrid Nodes EKS Support

For those working on-prem and with AWS, this support enhances flexibility and scalability.

Talks That Sparked Our Interest

How Meta Tests Using AWS Device Farm

This session explored performance testing and how factors like CPU throttling and temperature can affect real-world app performance, offering insights we can adapt to WebRTC testing.

Harnessing Gen AI in Telecom

This talk showcased an example of adaptive education powered by AI, tailored to student preferences. The talk emphasized AI gateways, such as AWS’s LLM Gateway, as crucial for secure, flexible, and scalable access to AI models. These gateways support multi-model setups, enforce usage policies, and enable customizations to meet specific client needs. Explore more in the AWS LLM Gateway project.

Understanding the Security and Privacy Controls within Amazon Bedrock

Developing Teleco SLMs and Gen AI architecture patterns for SMBs

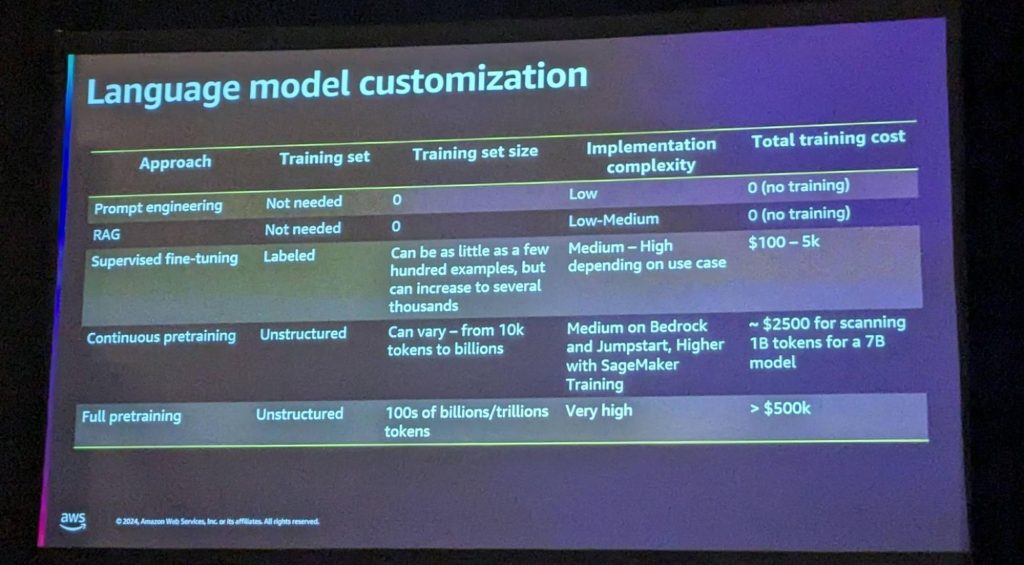

These 2 talks touched on some similar points about fine-tuning foundation models to generate small language models (SLMs) which enables precise adaptation to specific use cases.

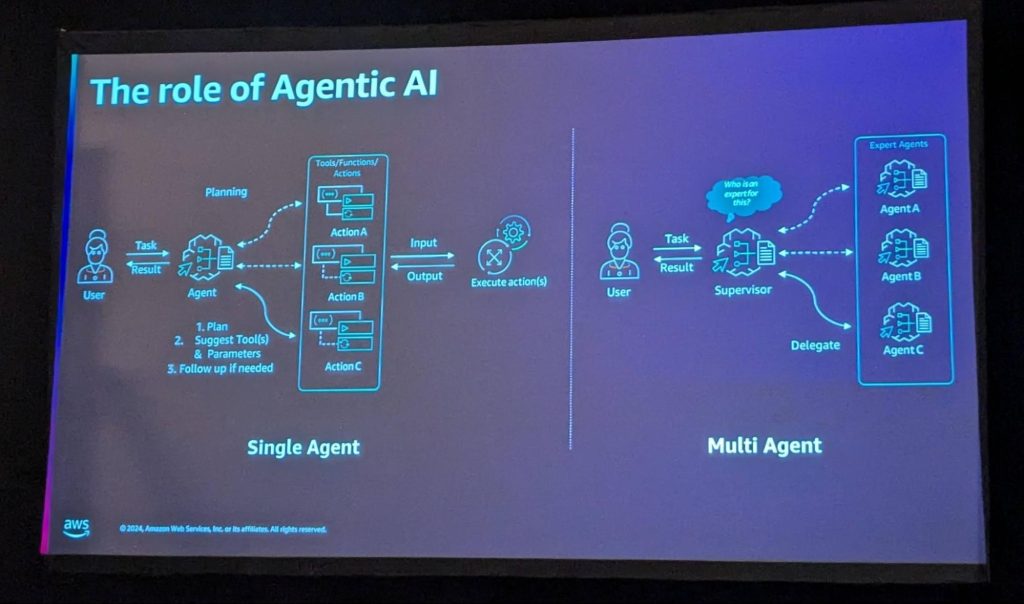

In addition, agentic AI leverages fine-tuned models through single or multi-agent systems. Single agents handle tasks by planning, suggesting tools, and executing actions, while multi-agent systems delegate tasks to specialized agents via a supervisor. This approach enhances scalability and task-specific expertise while potentially reducing cost by using SLMs when possible.

There are different degrees of Language Model Customization depending on the budget, data available and stage you are in your AI project.

Standout AWS Partners and Expo Highlights

AWS’s partner ecosystem showcased cutting-edge solutions:

- Anthropic and Hugging Face: Demonstrated seamless integrations with AWS Bedrock, making it easier to deploy powerful models with just a few clicks.

- AI21 Labs’ Jamba Model: This LLM supports larger context windows (up to 5x more text than GPT models), opening new possibilities for extended text analysis.

- Pinecone: Announced upcoming local development support, a game-changer for vector database use in RAG workflows.

- Ventra: Showcased SOC/HIPAA-compliant solutions optimized for security-sensitive industries.

We had the opportunity to engage with solution architecture specialists from Bedrock, Connect, and Database services, collaborating on innovative solutions to tackle our cutting-edge challenges in real-time communication and AI integration.

Networking and Partnerships

As CTO of WebRTC.ventures, attending AWS re:Invent 2024 was an incredible experience. I engaged in valuable discussions with partners from the US, EMEA, and LATAM regions, exploring potential collaborations and gaining insights into the latest industry trends. These interactions are crucial for our continuous growth and innovation in real-time communication solutions.

Transform your real-time communication solutions with WebRTC.Ventures, an AWS Partner!