What’s behind the success of an AI-powered WebRTC application, or any product for that matter? To showcase the power of AI and WebRTC as well as the steps behind creating a great product, we put together an all-star internal team to create a proof of concept for an on-the-go, real-time language translation application.

Polybot.ai combines the power of Generative AI and Large Language Models with the real-time communication and media stream manipulation capabilities of WebRTC. Polly, as we like to call her, runs right within web browsers and is ready to integrate into the daily routines of individuals and businesses alike. It is targeted toward business professionals, travelers, students, patients and anyone in need of quick, accurate translations on the go.

In later posts, we guide you through Polly’s product development. We’ll start with brand strategy and the design of a polished and modern UX/UI, passing through how to build effective and clever prompts to instruct the LLM what to do, to all the technical details of integrating each component into a web application (still to come).

But first …

Meet Polly

Imagine that you walk into a hospital or tourism agency in a foreign country whose language you don’t speak. No problem! Take out your mobile phone (any one will do – no need for the newest high end AI-enabled devices), open up your browser, speak to the screen and have a GenAI-powered real-time translator speak to the representative on your behalf. Not only that: the representative can answer back to your device and receive their translation, as well.

Polly illustrates how WebRTC and GenAI can be combined to achieve this functionality. See it in action in the below video.

Behind Polly

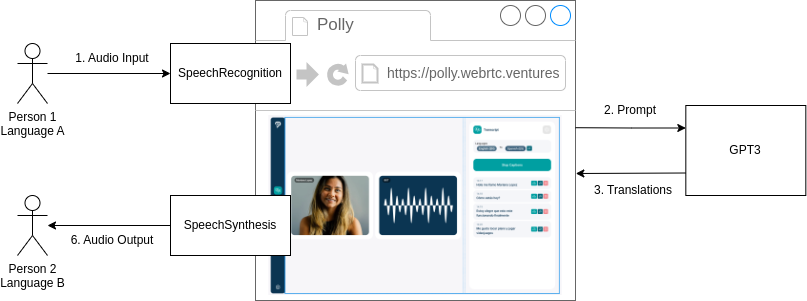

There are multiple components behind Polly.

First, we leverage Web Speech API’s SpeechRecognition interface to transcript the user’s audio. All this happens right within the browser (but keep in mind that some, like Chrome, rely on an external server side process, so this functionality is not available offline). You can also implement a third-party service for this, such as our partner, Symbl.ai.

As part of this example, we also use getUserMedia to show a video stream of the user. This only works as a visual reference for now, but stay tuned for a future post where we use the concepts explained here for a real-time conversation involving video and audio transcriptions. 😉

Once we have transcriptions available, they are sent to OpenAI’s GPT3 LLM which is instructed to identify the language and transform such input into a translation in the second language input previously by the user.

Finally, the outcome from the LLM is displayed on the screen, and then once again we rely on the Web Speech API to read it aloud using SpeechSynthesis interface.

All this is shown in a beautifully-designed, intuitive and user-friendly interface that enables you to focus on the conversation without having to spend too much time figuring out how to use it.

More on Polly

Posts in this series:

- AI + WebRTC Product Development: A Blueprint for Success

- Developing a Brand Strategy and Identity for an AI-Powered WebRTC Application

- Prompt Engineering for an AI Translator Bot

- How to Build a Translator Bot Using Web Speech API and GPT (still to come)

When you are ready to add useful and thoughtful AI into your video or voice communications application, call on WebRTC.ventures. Our team has deep expertise in communications protocols like WebRTC, as well as experience integrating it with various AI and ML services. Would you like to learn more, and explore ways to build AI into your video or voice communications application? Contact us today!