WebRTC is an open framework for the web that enables Real-Time Communications (RTC) capabilities in apps and in the browser. In 2021, WebRTC was finally officially standardized. WebRTC is everywhere these days, and certainly proved itself incredibly useful during the pandemic. But still, it’s not easy to build with WebRTC. Let’s look at why.

Two APIs to rule them all

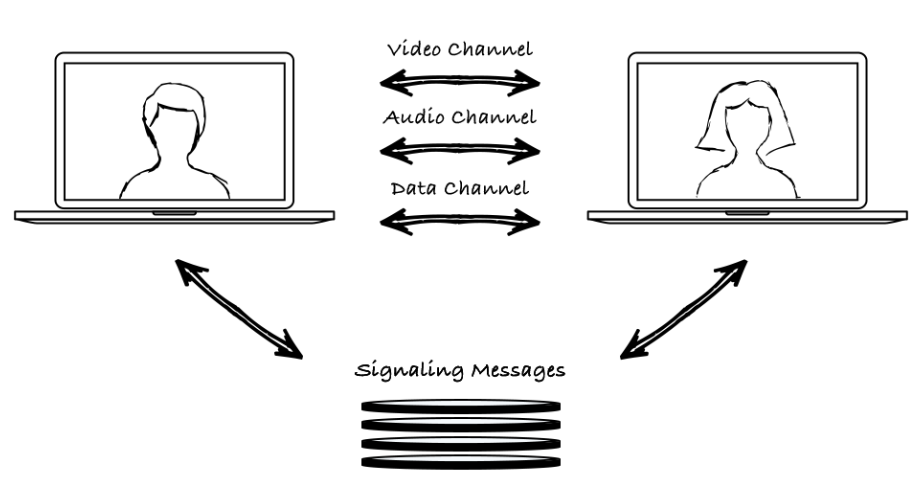

WebRTC is based on just two main APIs: the MediaStream API and the RTCPeerConnection API. Seems simple, right? And it is … at the beginning.

WebRTC in its simplest form

1) Capture/Manage media (MediaStream API)

const stream = await navigator.mediaDevices.getUserMedia({audio: true, video: true});

localStream = stream;2) Send/Receive media (RTCPeerConnection API)

pc1 = new RTCPeerConnection(configuration);

const offer = await pc1.createOffer(offerOptions);

await pc1.setLocalDescription(offer);

localStream.getTracks().forEach(track => pc1.addTrack(track, localStream));

//send offer/receive answer and

//receive incoming mediaHere’s where it gets complicated

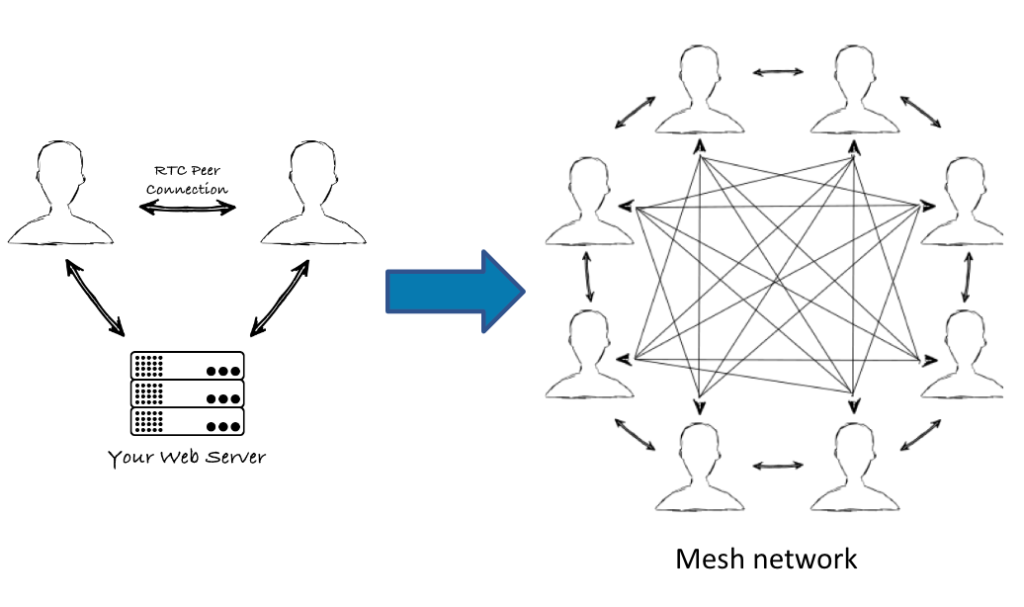

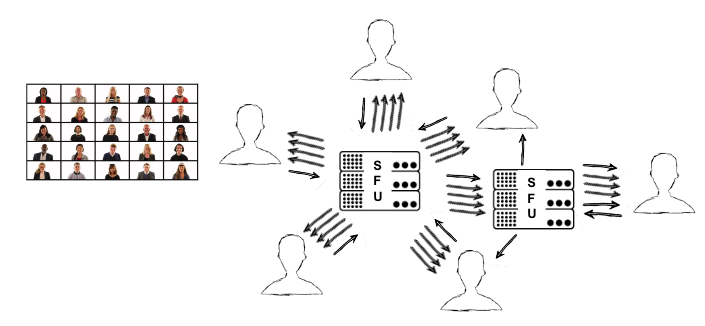

When implementing solutions for more than two participants, complexity rears its head. When we have more than two users connecting paper to peer, it becomes a bit messy.

As we can see in the diagram above, there are many connections happening between the eight users. It creates a mesh (and a mess!). This is known as Mesh Architecture. Each user’s media stream needs to be uploaded to each of the other seven participants, and the others’ downloaded. You can imagine the challenge for the computers or mobile devices to keep up with traffic.

This relationship is linear, as represented in the chart below.

The blue line represents the number of streams/connections needed when using a mesh architecture. The yellow line represents the number of streams for a SFU architecture. The red line represents the streams using an MCU architecture. More about this architectures below.

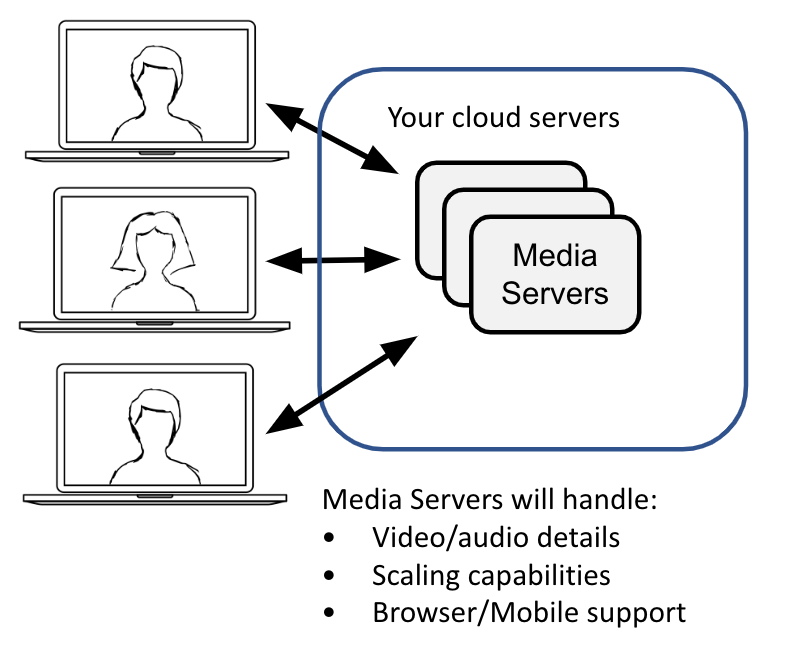

Rise of the media server

The complexity inherent in mesh networks gave rise to new architectures built around media servers. These are intermediate devices that help us distribute traffic. Media servers handle video/audio details, scaling, browser and mobile support.

The most common media server architecture used in WebRTC are SFUs and MCUs. These are described in detail in this somewhat old, but still very informative blog post: WebRTC Media Servers – SFUs vs MCUs

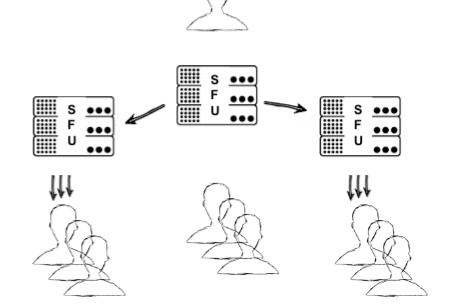

Scaling beyond a single media server

A large server can handle hundreds of media streams, but there is a limit to vertical scaling. At some point, one server won’t be enough. Horizontal scaling with geolocation is a common approach for production apps that need to handle more than a few hundreds of concurrent participants.

At this point, it is time to scale horizontally by adding more servers in a cascading architecture. A typical use case example is a large broadcasting application where you have thousands of subscribers and one publisher.

Another is a large multiparty video conference. Again, you could use a single server. But as you approach a hundred or more people in the same call, you need to add more servers. You can use one SFU that relays traffic to other SFUs, with each user connecting to multiple SFUs.

Scaling WebRTC media servers and distributing user traffic requires orchestration and containers to achieve true horizontal scalability. This will be the topic of my next blog post.

Do you need to manage this complexity yourself?

Of course not! That is why you bring in the experts, like our team at WebRTC.ventures. We can build a custom application directly against the WebRTC standard (aka open source), help you customize an application built on a CPaaS, assess your current architecture for scaling plans, load test your application, and much more. Contact us today!