In the part one in this series, I explored the state of Conversational AI and Voice by attending the Voice22 summit in Arlington, Virginia. In this follow up post, I’ll build on that by exploring how Voice and Video can work together in this multi-modal future.

I’ll use some terms again in this post and briefly define them below. I do recommend reading the previous post for a more complete understanding.

- Agent – A live “real human” customer service representative.

- Customer – The real-life human user of our company’s product or application, who is seeking help on that same product or application.

- Bot – A computer program that can take on some or all of the duties of an Agent, and help the Customer with their question. Bots can have varying levels of sophistication, from a glorified “FAQ” to something that may be hard to distinguish from a human in a virtual context. Like an Agent, Bots can communicate with Customers over different channels, such as text messaging (SMS), text chat, telephone calls, or any other type of real-time or asynchronous chat.

- Voice – A voice-based interface, such as allowing a customer to interact with a Bot over a telephone call or audio chat. For our purposes, we are speaking frequently of customer service, but the Voice industry uses the term more widely to indicate any kind of voice interface. In the case of devices like Amazon’s Alexa, the voice interface is sophisticated enough that no keyboard or other input device is needed.

- Conversational AI – The suite of technologies that facilitate a “natural language” conversation between a customer service agent and a customer. The better the Conversational AI, the more the conversation should flow as if they were speaking in person, or in a traditional phone call.

- Video – Video can take on many forms, such as asynchronous recorded videos, or synchronous live conversations over video chat. Our work at WebRTC.ventures is mainly focused on live video chat applications, and so for our purposes you can assume that all uses of the term Video in this blog post refer to a live video conversation, like a video chat application.

- WebRTC – Web Real Time Communications (WebRTC) is an HTML5 standard for allowing peer-to-peer video, audio, and data connections over the internet. Originally created by Google but now a widely accepted open source standard which is usable in all major browsers and mobile devices, this is the primary way that we build live video applications at WebRTC.ventures.

- Multi–modal – In the Voice industry, this refers to allowing a Bot to explain things to a Customer via different forms of communication, such as displaying a recorded video in addition to a spoken or written explanation of how to perform a task. In this blog post, I’ll expand the term a bit to include the combination of live Video Agents with Bots, although terms like Unified Communications and Omnichannel might also be used in this context.

With those definitions complete, let’s get into discussing the intersection of Voice and Video in a world that may soon be dominated by Conversational AI.

Do humans still matter?

When you see demos of applications that have implemented a very sophisticated Conversational AI, the results can be truly stunning. They make you wonder if soon we will need humans involved in customer service processes at all.

In the last post, I talked about seeing a demo of a funeral home’s Telephone Voice Bot. The Bot was able to understand a thick regional accent and use a Synthetic Voice not only to respond in a localized accent, but even to respond with empathy. While incredibly impressive, it would still be apparent to most Customers that they are speaking with a Bot, not a live human Agent.

You can certainly imagine that with additional improvements in Conversational AI over time, it will be nearly impossible to distinguish between a Conversational AI Bot and a human Agent.

When we reach that point, will humans still matter in customer service?

My answer is an emphatic “Yes!”

Humans will still matter in many customer service interactions, no matter how intelligent a Bot becomes and how much it can learn to interpret human emotions and respond with appropriately empathy.

I make this claim for three reasons:

- Conversational AI will always have some limits. Call me an optimist or a Luddite if you wish, but I think there will always be some limits to how well a Bot can mimic a human, and I’m fine with that. I believe that in high-touch situations, we will always place a value on interaction with a human Agent. In the example of a Voice Bot on a funeral home telephone line, this is a fine way to get some answers to questions, especially off-hours since the emotions of a bereaved family member can be important to address any time of day, even if they are limited by what the Bot can do. When I am planning the details of a funeral, however, I will still want the extra comfort of a human who can sympathize with what is ultimately a very human situation.

- Conversational AI will not be accessible to all companies. Even if Conversational AI allows a Bot to be indistinguishable from a human Agent, that doesn’t mean all companies will have equal access to that technology. Conversational AI and the resulting conversational models are not cheap to acquire, train, or maintain. Perhaps someday there will be SaaS-based Conversational AI companies specific to different industries, so that every corner store can afford a Bot subscription tied to their phone number. But it is still unlikely that small businesses can afford a model which is finely tuned to their situation, at least not until self-learning General AI is available at in the distant future. This is not what we are talking about with current Conversational AI technology.

- Humans will add differentiating value for a company. For luxury brands or high touch situations, maintaining human Agents will provide a differentiating value to their Customers. It’s true that Conversational AI and Bots will be more efficient than Agents in many cases. They can access a depth of information that a human mind cannot retain and recall as quickly as a computer. But if every Customer interaction was driven purely by concerns about efficiency, we would not have more niche markets like organic local farms, community radio stations, or fancy coffee shops that make pour-over drinks.

Hyper Automation and Human Augmentation

When futurists paint their vision of the utopian (or dystopian) end state of some technology, they often speak in black and white. That’s part of their job description, I suppose. You likely won’t consider me a futurist if I give you nuanced answers like, “it depends.” It sounds a lot more visionary to say that Voice interfaces will dominate the future and no one will ever touch a keyboard again.

That’s fine. Sometimes we need to be nudged by grand visions in order to see past the way we’ve always done things. I’m just personally more pragmatic and see many shades of gray in the future.

In his book Age of Invisible Machines, Robb Wilson talks about the Hyper Automation that Voice interfaces can bring. His description is geared towards how a very knowledgeable Bot with excellent Conversational AI can provide much more value than a human Agent.

Hyper Automation does not have to mean without a human involved. We can get tremendous benefits from having AI help a human, which is a form of Hyper Augmentation.

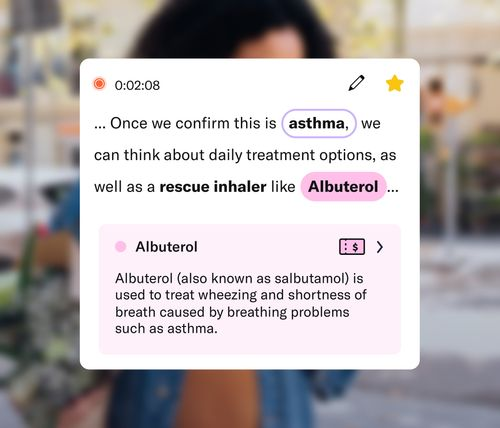

One of my favorite demos that I saw at the Voice conference provides a good example of this. Abridge provides a tool for doctors to “summarize and structure health conversations.”

Dr. Shiv Rao is the CEO and Co-founder. He opened the Abridge app during an onstage conversation with Pete Erickson, the organizer of the Voice conference and founder of MoDev. The app on his phone listened as they had a pretend medical conversation.

Abridge provided a remarkably accurate set of structured notes from their conversation within a few minutes of it concluding, plus a tagged recording which could be shared with both doctor and patient.

The patient’s medical history, medications they discussed, action items they agreed upon, and more were all accurately transcribed by the AI. They were put under the right categories in a summary of the medical visit. This summary was available to the doctor so they would not have to type as many notes – a huge time saver for overworked medical professionals.

In addition, the doctor could share the summary with the patient automatically through Abridge. The app also provides follow up reminders to the patient based on the notes (i.e., did you get your new prescription filled?).

To me, this is an excellent example of the power of Voice and Conversational AI to augment humans, instead of replacing them.

Four ways Video and Conversational AI can overlap

The Voice conference definitely showed me the advances that have been made in Conversational AI in the last few years. It also got me excited about Voice as an interface in general.

There is a lot of potential overlap between Voice and Video technologies. Here’s four ideas to consider:

- Bots and Agents working smoothly together in Customer Service

- Multimodal in Video Chat

- Video Chat with a Bot

- Hyper Augmentation of Video

Let’s explore each in turn.

#1 – Bots and Agents working smoothly together in Customer Service

You are already used to interacting with Bots for Customer Service. Most of the time when you call a customer support telephone number, or you start a text chat on a website, your initial interactions are with a Bot. That Bot may not be very sophisticated. It is still most likely a set of FAQ answers. You must say “Agent” or press “0” to get past the Bot if you need to speak with a human Agent.

With the advances in Conversational AI, this transition from Bot to Agent should be much smoother. You might not even realize it’s happening. In a text chat situation, the Bot may gather initial information and send that contextual information to the Agent, who then joins the text chat to complete the support conversation.

Or, the Bot may detect that you are getting frustrated. It could ask if you would like to speak with an Agent. Or, it might send you there automatically when it realizes it does not know how to handle your situation. Either option will be a more satisfying interaction than repeatedly asking if the Customer would like to hear their main menu options again.

This may be the most obvious way that Voice and Video can overlap right now. The depth and quality of these interactions will continue to improve due to the advances in Conversational AI.

#2 – Multimodal in Video Chat

Imagine a video chat conversation between two normal humans. Perhaps it’s a business meeting. What would “multi-modal” mean in this context? Common collaboration features like screen sharing, whiteboarding, and file sharing may count, but let’s think a bit more creatively about how a Voice interface could further add to that conversation. Recording and transcription for note taking would certainly reduce the need for loud typing during a meeting.

Let’s take it a bit further.

How about using a Voice interface during the Video Chat? Imagine being able to say a co-worker’s name and asking the meeting tool to invite them to join you in this call. Or, to text them to remind them that the meeting has started.

Perhaps during the call you can use a keyword like “Alexa” and bring up other content into the meeting automatically. “Alexa, search Google Drive for Quarterly Projections and share the document here.”

I haven’t seen a Voice interface built directly into a Video chat before. It could be very interesting!

#3 – Video Chat with a Bot

Would you ever video chat with a Bot? This is an intriguing question. Conversational AI is on a track where this should be possible. There are a number of initiatives already. I previously mentioned the Ericsson digital human project which is creating photorealistic video avatars. In a business or customer service context, the idea is that even if you know you’re speaking with a bot, you are more likely to consider it a positive experience if the bot is as human as possible. Their digital humans could be paired with your Conversational AI engine to add a human element to the interaction.

There are also a number of AI bots already being demo’d for gaming and the metaverse. Kuki is “an award-winning AI brain designed to entertain humans.” Kuki is a proprietary AI brain which has an API so that it can be embedded in your applications for chat apps and metaverse applications. The website also says Kuki can be white-labeled with different personas to use for your company brand.

Although visually impressive, Kuki is most definitely an avatar. A user won’t be fooled into thinking it’s a real human. That’s a good thing ethically, since it’s important that we always have some idea who we are speaking with. The current state of Kuki is not likely to pass a Turing test and convince you it’s a human, but it certainly is entertaining as this interview between Kuki and Youtuber Kaden shows.

Other Conversational AIs are already progressing into other areas of human life, such as Replika. Replika can be “your perfect companion – a friend, a partner, or a mentor.” Testimonials talk about how long users have been “together” with their Replika, and say how the robots have been such empathetic friends and nonjudgmental listeners.

What is the point of video chatting with a Bot? If your camera is turned on, machine learning algorithms can analyze your facial features and determine if you are frustrated, happy, or sad. This allows the Bot to better respond to you empathetically. Even in audio only situations, much of this can be detected from your voice alone. AI’s like Replika can be tuned to use these cues to establish a deeper emotional connection with you. Although that makes me a bit uncomfortable, it certainly shows the potential these technologies have.

Whether it’s for the metaverse, social companionship, or just ensuring a better customer service experience, there is a lot of possibility in video chatting with bots. I’m definitely intrigued to see where this goes.

#4 – Hyper Augmentation of Video

In the Abridge demo described above, we saw how hyper augmentation of a Voice conversation could add significant value to a doctor’s visit. The AI takes very good notes and can even organize them with a high degree of accuracy. This reduces the burden on the medical professionals, a big benefit in an age where we have high degrees of burnout in this area.

For the patient and their family members, it also provides a lot of value. Automated reminders related to the visit and the ability to jump back into specific parts of the recording to hear snippets from the real conversation are among them. It also provides reassurance that the AI correctly captured the conversation.

Many of the same exact points could apply to a Video Chat since we have the same scenario of human voices being used. You could feed the audio tracks out to an API like Abridge in order to provide the same capabilities.

While Abridge is focused on the medical use case, other APIs exist which could be plugged into a more generic business video chat and provide similar capabilities. One such service that I met at the Voice conference is Sembly.ai, which “records, transcribes and generates smart meeting summaries with meeting minutes.” Sembly already integrates with major video conferencing tools like Zoom and MS Teams. They have partnered with Philips to build it into smart speakers and conference room tooling.

Sembly is a good example of the type of service that our team at WebRTC.ventures could integrate into your video chat tool to provide hyper augmentation. Hyper Augmentation in Video Chat could take on many forms besides note taking. This is an area we will definitely be exploring more with our clients!

Building the future

I’ve listed a number of APIs in this blog post series. There is no doubt that most applications in this space will be a combination of commercial APIs, open-source libraries, and AI engines combined with proprietary algorithms and training data sets. It won’t be easy to put it all together, but the pieces are almost all there for anyone to build the future that you can currently only see on the keynote stage at tech conferences.

Major platforms like Amazon are providing all of the pieces through cloud-based APIs that make the future more accessible. Services like the Amazon Chime SDK for communication APIs, Amazon Rekognition for image and video analysis, Amazon SageMaker for building machine learning models, and Amazon Polly for deploying natural sounding human voices in dozens of languages.

The future is Video and Voice – together

At the Voice conference, it was clear that Voice-based interfaces and Conversational AI will play a very large role in the future, whether it is through the metaverse, smart devices in your home or car, or even in websites and mobile applications.

Video will also play a key role in the future. The dramatic growth of live video chat during the pandemic has made an impact on how people think about conducting their work. While we may all be excited to return to more travel and in-person interactions, video chat and remote work is still here to stay.

Combine those trends with the next big wave of social interaction – the metaverse – and you can easily imagine a world where Conversational AI becomes a daily part of our lives over both Voice and Video modalities.

Building these things will not be easy. This is where experts like our team at WebRTC.ventures can help. We had years of live video application development experience even before the pandemic. Are you interested in exploring the powers of Voice and Video interfaces? Contact us today!