Interactive, personalized video commerce is shaping the future of online retail. At WebRTC.ventures, we’ve harnessed the power of Agora’s real-time communication platform, OpenAI’s Realtime API, and Simli’s AI-driven avatars to create LiveCart—a next-generation live selling platform that turns watchers into buyers.

Online retailers face several hurdles when trying to replicate the in-store shopping experience:

- Lack of personal interaction leads to lower engagement.

- Product questions often go unanswered, causing cart abandonment.

- Sellers struggle to scale personalized customer experiences.

LiveCart was designed to overcome these obstacles by combining real-time communication, interactive features, and AI-driven selling.

Interested in UX? Check out two related posts on this project: Rethinking UX: Emerging Interfaces for the AI Age and When Humans and AI Share the Interface: A Case Study in Multimodal, Adaptive UX.

A new, smarter way to shop online

The concept of LiveCart is engaging and interactive live selling experience:

- Live chat enables real-time conversations with viewers.

- Automated product information is delivered with clear labeling to distinguish AI-driven insights from human interaction.

- Personalized recommendations are generated based on customer behavior.

- Custom product overlays on video streams highlight key details.

- Embedded QR codes make more information and checkout easier

LiveCart provides a seamless experience for both sellers and buyers:

- Buyers join via browser with no downloads required.

- Integrated text chat allows for questions and feedback.

- One-click purchasing is available directly within the stream.

- Voice commands enable hands-free shopping.

- Real-time captions improve accessibility.

Let’s take a look at a quick demo before we move on to the tech:

Integrating Agora, OpenAI Realtime API and Simli to add support for Live Avatars

This technical post focuses on the technical details of integrating Agora, OpenAI Realtime API and Simli to add support for Live Avatars. Stay tuned for further posts on other aspects of the concept, including the emerging principles of AI-interface design.

The application uses Next.js for the frontend and Express for the backend. For live streaming capabilities, it leverages Agora, which not only provides real-time communication features but also offers useful AI integrations.

Let’s walk through the steps to configure these capabilities and enable support for AI Avatars.

Enabling Live Streaming Using Agora

To enable live streaming capabilities using Agora, start by creating an Agora account. Once your account is set up, create a new project and obtain the App ID. Next, install the agora-rtc-react NPM module in your project to proceed.

Note: Agora just announced a fully managed Conversational AI Engine that simplifies adding conversational AI functionality without the need to manage the underlying infrastructure.

Next, initialize the Agora client by setting the mode and the codec. For live streaming, set the mode to live. For the codec, choose a well supported codec such as vp8. Then, in the Next.js application, add the client to an AgoraRTCProvider component so it is available for the rest of the application.

const Client = function ({ children }: Props) {

const client = AgoraRTC.createClient({

mode: 'live',

codec: 'vp8',

})

return <AgoraRTCProvider client={client}>{children}</AgoraRTCProvider>

}To join an Agora channel, you need a valid token. Get one from the Agora console or generate it dynamically using something like the AgoraDynamicKey tool.

After obtaining a valid token, use a calling flag to manage the status of the stream. Then, utilize the useJoin hook to join the channel based on the flag value. To get the streams from other users in the channel, use the useRemoteUsers hook:

// set a flag for managing the state of the live stream

const [calling, setCalling] = useState(false)

// join the channel based on the value of the flag

useJoin(

{

appid: <your appid>,

channel: <your channel>,

token: <your token>,

},

calling

)

// get streams from remote users

const remoteUsers = useRemoteUsers()Now it’s time to push some media to the channel. Pushing audio is straightforward using the useLocalMicrophoneTrack hook. For video, since LiveCart leverages Insertable Streams to let sellers embed images and QR codes into the video stream (which we won’t cover in this post, but you can follow the provided link for details), you’ll need to create a custom processed video track for Agora to push. One effective approach is to build a useCustomVideoTrack hook.

The hook gets a video stream using getUserMedia. Process it using the createProcessedTrack function described in the link above. And finally, use the AgoraRTC.createCustomVideoTrack to create the final video track that will be pushed to the channel.

...

// get video stream from camera

const cameraStream = await navigator.mediaDevices.getUserMedia({

video: device?.deviceId ? { deviceId: device.deviceId } : true,

audio: false,

})

// transform the video track using the provided transform function

const pTrack = createProcessedTrack({

track: cameraStream.getVideoTracks()[0],

transform,

signal,

})

// create a new Agora custom video track based on the processed video track

const customVideoTrack = await AgoraRTC.createCustomVideoTrack({

mediaStreamTrack: pTrack,

})

...Once you have both tracks, the usePublish hook sends the tracks to the channel. Remember that in order to publish media onto the channel in live mode, you need to explicitly set the role of the Agora client to host.

// get audio track

const { localMicrophoneTrack } = useLocalMicrophoneTrack(!micMuted, {

microphoneId: activeAudioDevice.current?.deviceId,

})

// get video track using the custom hook

const { localVideoTrack } = useCustomVideoTrack(!camMuted, {

device: activeVideoDevice.current,

transform: transformFn, // pass a transform function for processing the track

signal: abortController.current.signal,

})

// set the Agora client to host

const client = useRTCClient()

client.setClientRole('host')

// publish media to the channel

usePublish([localMicrophoneTrack, localVideoTrack])Finally, render the media streams in the page using the LocalUser and RemoteUser components from the library.

<Videos>

<LocalUser

cameraOn={!camMuted}

micOn={!micMuted}

videoTrack={localCameraTrack}

>

You

</LocalUser>

{remoteUsers?.map((user) => (

<RemoteUser user={user} key={user.uid}>

{user.uid}

</RemoteUser>

))}

</Videos>Let’s Make Live Selling Intelligent!

Live selling has already transformed online shopping by making it interactive and real-time. But what if it could make it smart? AI enhances the experience in ways that make live shopping more intuitive, engaging, and personalized for the customer.

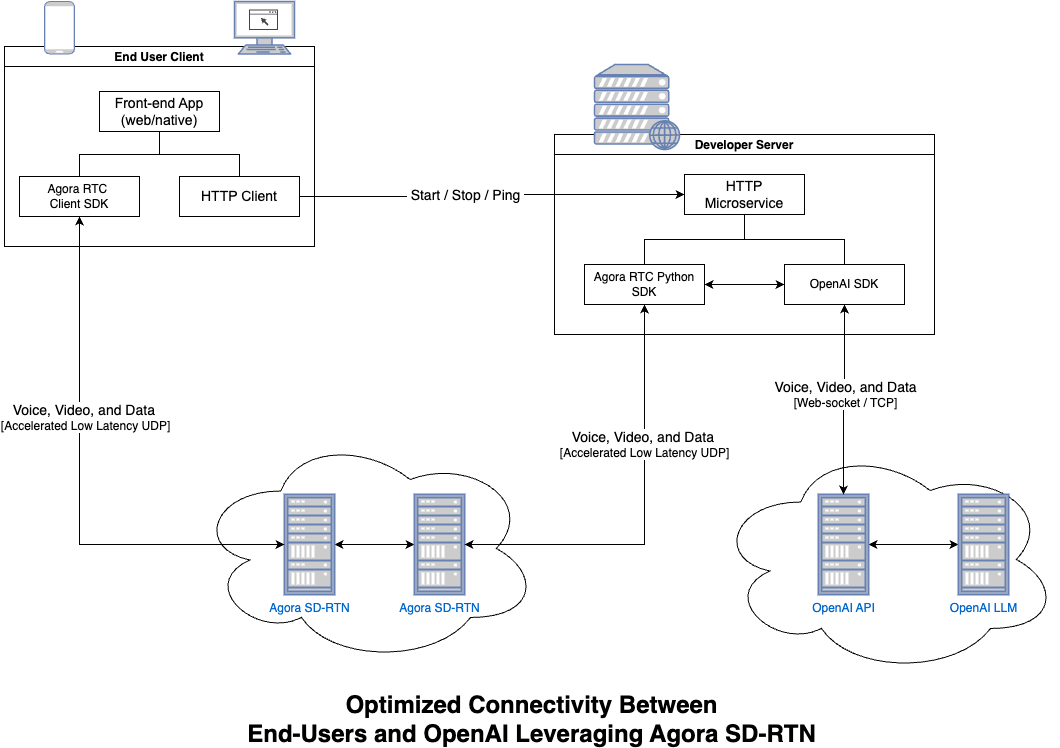

Agora offers a powerful AI agent service that integrates with the OpenAI Realtime API, enabling AI agents to join Agora channels and engage in voice-to-voice interactions with users. What’s great about this integration is that it streamlines the process with simple HTTP endpoints like /start_agent and /stop_agent.

The image below from Agora documentation, describes the flow of the integration.

In the application, all you need to do is to call the right endpoint to start and stop the agent when joining or leaving the channel. Recall there is a calling flag that manages this, so you can leverage that for making the requests and keep the state of the agent in a separate agentRunning flag.

const [agentRunning, setAgentRunning] = useState(false)

useEffect(() => {

// start the agent when joining a channel

if (calling) {

fetch(`<http-microservice>/start_agent`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({

channelName: <your-channel>,

}),

}).then(() => setAgentRunning(true))

// when calling is false and there is agent running then stop it

} else if (agentRunning) {

fetch(`<http-microservice>/stop_agent`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({

channelName: <your-channel>,

}),

}).then(() => setAgentRunning(false))

}

}, [calling])Putting a Face on the AI Companion

At this point in our build, sellers are able to interact with an AI companion that supports them on their selling mission. This companion is just a talking black box, so let’s also give it a face leveraging Simli. Simli is an API that “…allows adding faces to AI agents, creating low latency streaming avatars.”

Given our project architecture, the optimal location to integrate Simli’s SDK is within the AI agent service provided by Agora. To achieve this, we have created a dedicated fork of the service and added some changes. Let’s take a quick look at what we did.

First, we created a Simli account and grabbed an API key from the Simli Studio.

Next, created a separate class for managing the connection between the agent service and Simli. This provides a way to create the Simli client and to initialize and close the connection.

class SimliConnection:

def __init__(

self,

api_key: str | None = None,

face_id: str = "tmp9i8bbq7c",

):

# get Simli API key from constructor param or environment variable

self.api_key = api_key or os.environ.get("SIMLI_API_KEY")

# create a simli client

self.client = SimliClient(

SimliConfig(

apiKey=api_key,

faceId=face_id,

syncAudio=True,

maxSessionLength=60,

maxIdleTime=30,

)

)

# a method for initializing the connection

async def connect(self):

await self.client.Initialize()

# a method for closing the connection

async def close(self):

await self.client.stop()Simli requires audio sampled at 16Khz as input for the avatar’s speech, and outputs individual video and audio frames of the avatar speaking. This required implementing methods to send the audio received from OpenAI Realtime API to Simli, and to process the resulting video and audio frames from Simli. Since OpenAI Realtime API provides audio sampled at 24Khz, it must be resampled before being sent to Simli.

# a method for sending audio to simli

async def send_audio(self, audio: bytes):

# Downsample to 16Khz

current_audio = AudioSegment.from_raw(

io.BytesIO(audio),

frame_rate=24000,

sample_width=2,

channels=1

)

resampled_audio = current_audio.set_frame_rate(16000)

# send resampled audio to simli

await self.client.send(resampled_audio.raw_data)

# obtain resulting audio and video frames

async def get_video_frames(self):

async for frame in self.client.getVideoStreamIterator(targetFormat='yuva420p'):

try:

yield frame

except:

continue

async def get_audio_frames(self):

async for frame in self.client.getAudioStreamIterator():

yield frameTo send the avatar to the channel, some changes also needed to be made to the original code from Agora. We created our own implementations of Channel and RtcEngine classes from the agora-realtime-ai-api package, which we named AvatarChannel and AvatarRtcEngine, respectively. This enabled us to configure a video sender and a video track, as well as to create custom push_audio_frame and push_video_frame methods.

With these changes in place, edit the RealtimeKitAgent class to establish a connection with Simli and create asynchronous tasks that consume audio & video frames from it.

...

class RealtimeKitAgent:

...

simli_connection: SimliConnection # add simli_connection property

...

async def setup_and_run_agent(

...

) -> None:

...

# create a simli connection when setting up the agent

simli_connection = SimliConnection(api_key=os.getenv("SIMLI_API_KEY"))

await simli_connection.connect()

...

try:

async with RealtimeApiConnection(

...

) as connection:

...

# pass the simli connection to the RealtimeKitAgent instance object

agent = cls(

...

simli_connection=simli_connection

)

...

finally:

....

# close the simli connection when closing the agent

simli_connection.close()

# add simli_connection property when instatiating a new object

def __init__(

...

simli_connection: SimliConnection

) -> None:

...

self.simli_connection = simli_connection

async def run(self -> None):

try:

...

# add asynchronous tasks for consuming audio and video frames from simli

asyncio.create_task(self.avatar_to_rtc_audio())

asyncio.create_task(self.avatar_to_rtc_video())Finally, create the avatar_to_rtc_audio and avatar_to_rtc_video functions. First, make sure to send the audio from OpenAI Realtime API to Simli instead of the channel by making a small change to the existing model_to_rtc function:

async def model_to_rtc(self) -> None:

...

try:

while True:

...

# Send audio to simli

await self.simli_connection.send_audio(frame)

# Write PCM data if enabled

await pcm_writer.write(frame)

...

# send audio frames from simli to the channel

async def avatar_to_rtc_audio(self) -> None:

# consume audio frames as they come

async for audio_frame in self.simli_connection.get_audio_frames():

# push audio frames to the channel

await self.channel.push_audio_frame(audio_frame)

# send video frames from simli to the channel

async def avatar_to_rtc_video(self) -> None:

# consume video frames as they come

async for video_frame in self.simli_connection.get_video_frames():

# push video frames to the channel

await self.channel.push_video_frame(video_frame)The Future of Online Retail is Interactive … and Intelligent

In this exploration of LiveCart, we’ve seen the exciting potential of merging WebRTC’s real-time video capabilities with the intelligence of AI. By integrating technologies like Agora, OpenAI’s Realtime API, and Simli’s AI avatars, we’ve moved beyond a simple live selling app. We’ve created a dynamic, interactive experience that is the future of online engagement. This is just a glimpse of what’s possible when we combine the power of real-time communication with the ever-evolving world of artificial intelligence.

Ready to bring the power of WebRTC and AI to your project? Contact WebRTC.ventures today. Let’s make it live!