In the previous posts in this series, I explained the basic networking concepts used in WebRTC. I introduced you to protocols and ports, the different types of networks, and the ways data can be transported. I also provided a high-level overview on signaling and ICE candidates exchange and also media transmission. If you haven’t read these, it’s recommended that you do so as in this post we will use the concepts explained there.

While we could write a complete book that goes deep in the inner workings of all of the above, nothing beats the experience of being able to see the protocols, bytes and dark magic of WebRTC in action.

In this post, we will take a look at a running video conference application. We will go under the hood to understand all the networking that happens in order to establish a call using WebRTC.

Running the Demo Application

As this is just for demo purposes, the focus is on the WebRTC related part. This means the demo video conference application won’t have features such as authentication and scaling capabilities that are required in a production-like environment.

Also take into account that the purpose of this demo is to take a look at the networking that happens when establishing a connection. It is not about making such a connection, so some basic knowledge on building WebRTC applications is required.

Requirements

The demo application will run on Amazon Web Services. You’ll need both an AWS account and also an admin IAM user with its own set of access and key pair. Refer to their respective instructions on how to get these.

You also need to install and configure the AWS command line tool in your computer.

Next, you need to download the demo code from Github. You can install Git on your computer and run the following commands to clone it:

git clone https://github.com/WebRTCventures/webrtc-networking-demo.git

cd webrtc-networking-demoIf you don’t want to install git, you can get the code in a zip file here.

With that in place you’re ready to provision the servers and run the application.

Provisioning the Infrastructure

Our demo application consists of a web client written in JavaScript using React and a pair of servers running the Janus WebRTC Server and Coturn.

To make things easier, there is a CloudFormation template in the repo that will provision all the required services in AWS. In addition to the servers, the template also provides an S3 bucket for hosting the web application and a CloudFront distribution that will act as entry point for both the app and Janus server’s REST API.

To get started, login into your AWS account and go to the CloudFormation dashboard and make sure your region is set to us-east-1.

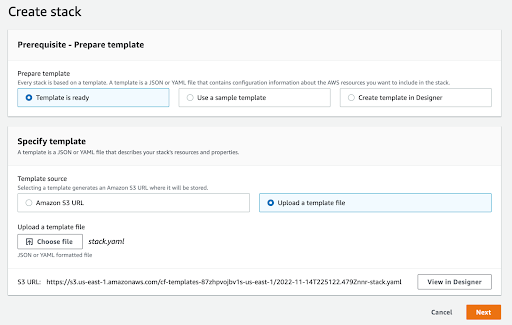

Under Stacks, click on “Create Stack” and you will be presented with a form. For this exercise, check the “Template is ready” option and then click on “Upload a template file”. Be sure to upload the stack.yml file from the repo.

Your screen should look similar to the one below:

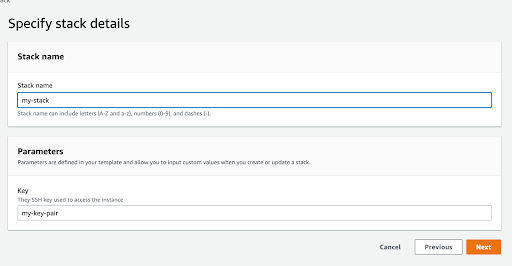

Click on “Next” and then provide a name for the stack and also the name of an SSH key pair to use in case you want to SSH into the servers. Then click “Next” once more.

In the next screen, leave everything as it is and click again on “Next”. Take a look at the values you entered to make sure everything is correct and finally click on “Submit”.

You will be directed to the dashboard of the new stack where you can see the progress of the creation. Wait until the stack is fully created. Then go to “Outputs” and take note of the IP address of the Coturn server, the S3 bucket name and the CloudFront Distribution URL. You’ll use these later.

Deploying the Application

The next step is to deploy the application code in the newly created infrastructure. Before doing so, let’s add the credentials of our Coturn server to it. Open the Videocall.js file under src/components and change JanusComponent attributes like this:

// src/component/Videocall.js

…

return (

<>

<JanusComponent

server="/janus"

iceUrls="stun:stun.l.google.com:19302"

turnServer="turn:<your-coturn-server-ip>:3478"

turnUser="turnuser"

turnPassword="turnpassword">

…Then, build the application by running the following command from inside the repo’s root folder using a terminal application:

cd path/to/application

npm run buildNow, in the same terminal application use the aws-cli to upload the built assets to the s3 bucket created by CloudFormation as shown below:

aws s3 sync build s3://<your-s3-bucket-name-here/With all the pieces in place, let’s take a moment to see the car running before looking under the hood.

Running the Application

Using a web browser navigate to the CloudFront distribution’s URL. You’ll see a form where you can enter a room number and a user name. Enter 100 as the room number and a unique identifier as username, this is used internally to identify the video streams in the media server. Then click on “Join Room”.

Now, join from a different browser tab or device and make sure you enter the same room number.

Congratulations! We’ve just built a simple video conference application. Now, let’s take a look at what is going on behind the scenes to see all the concepts covered in past posts out in the wild.

For this, let’s identify three key moments for establishing communication:

- Connecting with the media server

- Exchanging information with the media server

- Sending and receiving media streams

Connecting to the Media Server

WebRTC is peer-to-peer by nature. This means that it enables two or more web browsers to establish a peer connection and exchange media streams directly without any intermediaries. In a previous post, you learned that this is thanks to a process called Signaling and the exchange of ICE candidates.

However, that peer-to-peer approach is difficult to implement at scale. Sometimes you will need to add intermediate processing for media streams. In these cases, it makes sense to have a media server in the middle.

In practice, the media server is simply another peer that we connect to. Only instead of consuming the media stream for itself, it distributes them to all the other peers. In essence, the same process used for peer-to-peer works here.

With that said, the first step is to be able to connect with such a server. Media servers expose one or more interfaces where clients can connect. Such an interface can be one of many available protocols. HTTP is a popular one.

A (Brief) Word on HTTP and HTTPS Protocols

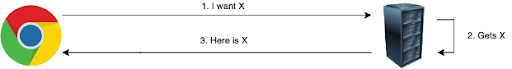

In a previous post, we introduced the concept of protocols and mentioned that HTTP is a protocol used for the web. HTTP can also be accessed programmatically. This allows HTTP to serve as a backend for mobile applications, too.

HTTP works in a request/response fashion: a client (either a browser or a program) creates a request with information for a server and sends it to an address that is exposed by such a server.

The server receives the request, processes the data and creates a response that either contains data required by the client or simply a confirmation that the request was processed successfully. The response is then sent back to the client.

HTTPS is the same as HTTP, but adds encryption into the mix.

The address is composed of the following elements:

protocol://hostname:port/optional-path- The protocol states whether the request goes through HTTP or HTTPS

- The hostname is the unique domain name or IP address of the server

- The port is where the server is listening for requests

- The optional path refers to the specific resource of the server that is being requested. The optional path is also called endpoint.

The address for a typical HTTPS requests to a users resource under a server named example.com would look like this:

https://example.com/usersYou’re probably saying, “I see, but where’s the port?” By default, servers listen for HTTP and HTTPS requests on ports 80 and 443 respectively. If you omit the port, such values are implicitly used.

However, this will depend on server configuration. If another server is configured to listen for HTTPS on port 5000, you will need to use https://anotherserver.com:5000 instead.

This is by no means a complete reference on HTTP and HTTPS. But it’s enough to have a high-level understanding of the interaction between our client application and the Janus server.

Connecting to Janus using HTTPS

In our demo application example, we use the Janus media server and connect using HTTP/HTTPS protocol. In addition to HTTP, Janus also supports many other interfaces and protocols, as explained in their documentation.

First, let’s take a moment to watch this connection live. Using a web browser, open the URL of the CloudFront distribution you’ve just created. Before doing anything else, open the browser’s developer console.

If you’re using Google Chrome, you can do this by clicking on the three dots button at the top right corner of the window and then going to More Tools > Developer Tools.

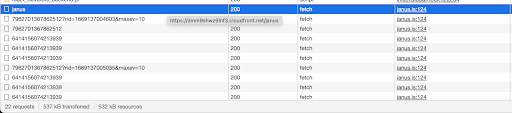

Next, locate the Network tab and try to join a room. You should start seeing a bunch of HTTP requests to a ‘/janus’ endpoint. That’s our very own server!

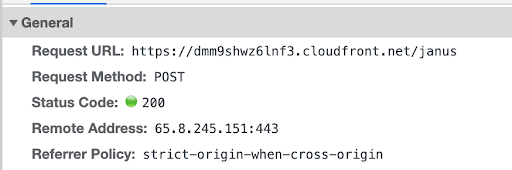

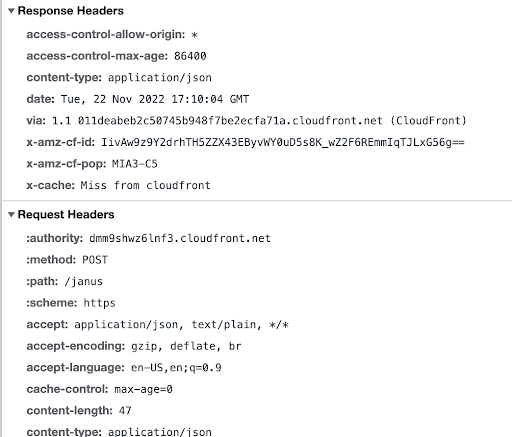

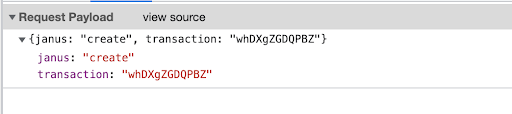

Let’s focus on the first one which represents our very first request with the server. Click the request to see its information. If you’re using Google Chrome, you should see something like this:

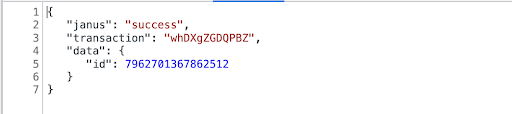

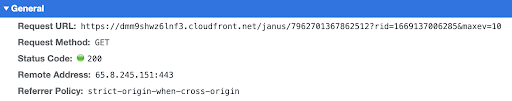

This tells you that the request was made to the CloudFront distribution (remember we said it is acting as the entrypoint) at the ‘/janus’ endpoint, which is where the Janus server’s REST API is available.

It also shows other pieces of information such as the method used and the result of the request.

In the same place you should be able to see more information such as the request headers, the data sent to the server and the response. In Google Chrome, you can see such information by scrolling down, while the actual request and response data live in their own sub-tab.

If you look at the Janus documentation, you will see that a request as the one shown above is for creating a session with the Janus server.

If we were including authentication in this example, the authentication data would be in the headers of the request or as part of the body as described in the Janus documentation.

Once you create a session with Janus, you can start performing further requests that will allow you to negotiate the peer connection, exchange ICE candidates, and finally create the actual video room and join the call. Each one of these actions require their own HTTP request.

Let’s look at a couple more of these requests in the Network tab.

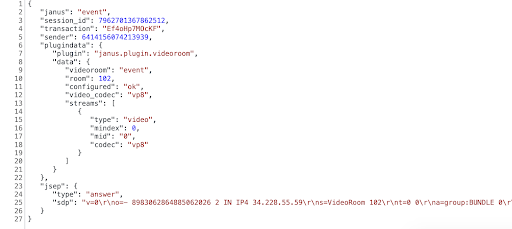

Below you’ll see a request of type GET where the client application asks the server if there is a new event. When using Janus, some requests are processed asynchronously and the client needs to periodically ask if there is any new data related to it. In this case the server is responding with an SDP Answer:

Let’s see another example, this time the server is sending an ICE candidate to the server and the server is simply acknowledging that it was received.

Take some time to review the requests and pay attention to the general information, the body and the response. This will give you an idea of the information that the client exchanges with the server.

You can learn what each specific request means in the Janus documentation.

Now let’s look at the code that makes this connection possible. If you look at src/components/Videocall.js you will see a server property in the JanusComponent component that is set to ‘/janus’. This tells the underlying Janus client library to create requests and send them to /janus endpoint.

// src/component/Videocall.js

…

return (

<>

<JanusComponent

server="/janus"

…>

…Remember that both the application and Janus are being served through a CloudFront distribution. This allows both to share the same URL but gives Janus its own endpoint (/janus). If this wasn’t the case, you’d need to put server’s complete address (and specifying the port if applicable) as shown below:

// src/component/Videocall.js

…

return (

<>

<JanusComponent

server="http://anotherjanus.com:8088"

…>

…By default, the Janus server listens for HTTP traffic on port 8088. If you want to ping it directly, you would have to specify it as shown above.

We’re connected to the server now. The next item is to start negotiating the peer connection and exchanging ICE candidates.

Signaling and ICE Candidates

As we have described in a previous post, in order to establish a peer connection it’s required to perform a process called Signaling. In our demo, Signaling is performed through Janus’s REST API. This means that all SDP Offer & Answers and ICE candidates are exchanged through HTTPS requests.

SDP Offers and Answers

In order to establish a peer connection, both peers need to exchange a special type of message known as Offer and Answer.

Offer and Answers are built using the Session Description Protocol (SDP). In the Demo application, the SDP offer is created transparently and being sent to the server behind the scenes.

Take a look at the publishOwnFeed function in src/vendor/react-janus/utils/publisher.js. There is a createOffer method that generates a JSEP that contains the Offer and sends it to the server.

// src/vendor/react-janus/utils/publisher.js

…

export function publishOwnFeed({publisherHandle, audio, video}) {

…

// Publish our stream

publisherHandle.createOffer({

// Add data:true here if you want to publish datachannels as well

media,

simulcast: false,

success: function (jsep) {

Janus.debug('Got publisher SDP!');

Janus.debug(jsep);

var publish = { request: 'configure', audio: !!audio, video: !!video };

publisherHandle.send({ message: publish, jsep: jsep });

},

…

});

}The publishOwnFeed is being called when mounting the JanusPublisher component.

// src/vendor/react-janus/JanusPublisher.js

…

function JanusPublisher() {

…

useEffect(() => {

if (publisherHandle) {

console.log('publishing...');

publishOwnFeed({

publisherHandle,

audio: false, // no audio for this demo

video: true

})

}

}, [publisherHandle]);

…

}Notice the usage of a publisherHandle object. This is an object that contains the information about the connection with the Janus server. It is the one that creates the HTTP requests under the hood.

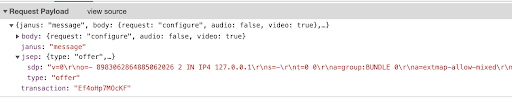

If you go again through the requests in your Network tab, you’ll find one with a body similar to the following:

That’s our client application sending its Offer to the server!

On the server side, Janus already implements the logic for dealing with the Offer and generating an Answer. This logic lives in a plugin called VideoRoom.

Sending the offer is an asynchronous operation in Janus. In order to get the Answer, the client needs to periodically ask if there are new events. When this is received, we just need to validate if it contains a JSEP object. The Answer will be inside such an object.

// src/vendor/react-janus/utils/publisher

…

if (jsep !== undefined && jsep !== null) {

Janus.debug('Handling SDP as well...');

Janus.debug(jsep);

publisherHandle.handleRemoteJsep({ jsep: jsep });

…

}Now let’s look at what our Offer and Answers look like. To do so, we will leverage a feature available in Chrome browser called the WebRTC Internals tab.

Open Google Chrome and navigate to chrome://webrtc-internals. In a different tab, open the demo application and join a room. Now head to the WebRTC internals tab and locate a sub tab which contains the information about this connection. You’ll be able to identify it because it has the URL of the application.

In the subtab you’ll be able to see information such as the state of the connection, the selected ice candidates and a list of all the events that happened prior to the connection. Within that list look for one labeled as setLocalDescription and expand it. You’ll be able to see the SDP Offer that your browser generated.

It will look like this:

v=0

o=- 1078774245226888588 2 IN IP4 127.0.0.1

s=-

t=0 0

a=group:BUNDLE 0

a=extmap-allow-mixed

a=msid-semantic: WMS d543a4e7-549d-4f77-ab7d-d80b7ec3c6b6

m=video 9 UDP/TLS/RTP/SAVPF 96 97 102 122 127 121 125 107 108 109 124 120 39 40 45 46 98 99 100 101 123 119 114 115 116 mid=0

c=IN IP4 0.0.0.0

a=rtcp:9 IN IP4 0.0.0.0

a=ice-ufrag:E/Gp

a=ice-pwd:kzcZjfCOqODS6mxqcemOlCNH

a=ice-options:trickle

a=fingerprint:sha-256 FD:A8:CB:AC:0E:4C:5A:81:96:46:5C:23:F1:BD:DE:D9:0B:C8:8D:86:38:31:93:9D:76:CC:27:94:79:02:30:7E

a=setup:actpass

a=mid:0

a=extmap:1 urn:ietf:params:rtp-hdrext:toffset

… more linesThe list of events also shows the Answer we received from the server. Look for setRemoteDescription and expand it to see it.

v=0

o=- 8691049433307334517 2 IN IP4 54.83.119.48

s=VideoRoom 104

t=0 0

a=group:BUNDLE 0

a=ice-options:trickle

a=fingerprint:sha-256 CE:99:CD:94:99:6F:6F:05:B9:D8:7E:8B:B1:05:31:E0:5E:2B:CF:DF:01:35:F3:ED:57:06:D4:54:11:3E:DF:3D

a=extmap-allow-mixed

a=msid-semantic: WMS *

m=video 9 UDP/TLS/RTP/SAVPF 96 97 mid=0

c=IN IP4 34.228.55.59

a=recvonly

a=mid:0

a=rtcp-mux

… more linesAt first sight, both Offer and Answers might look intimidating because of all the information that includes. The good news is that in most cases you won’t need to deal with it directly. The WebRTC APIs manage them transparently.

However, there are some cases where you do need to make some manipulation. This is a process known as SDP Munging. SDP Munging is easily a topic on its own and it’s out of the scope of this post.

That’s all for the Offer/Answer mechanism. Now let’s look at the other half of Signaling: ICE candidates exchange.

ICE Candidate Exchange

In addition to exchanging Offers and Answers, peers also need to exchange ICE Candidates. As we have learned before, the Interactive Connectivity Establishment (ICE) protocol is what allows a WebRTC connection to traverse NAT. In our demo, both peers will likely be behind some type of NAT.

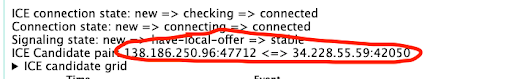

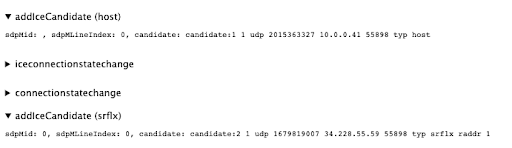

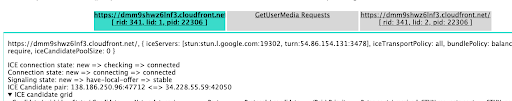

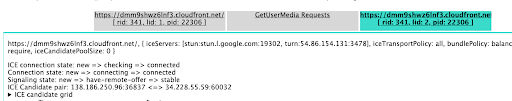

Once again let’s look at this live. Taking advantage of the connection we already have in place, head once again to the WebRTC internals tab. This time note the ICE candidate pair in the top of the screen:

At this point, both peers have finished exchanging information and a pair of ICE candidates has been selected. Media traffic is starting to flow!

As you can see, an ICE candidate is composed of an IP address and a port. An ICE candidate pair is composed of a local candidate (the one at the left) and a remote one (the one at the right).

Using a service like whatismyip, take note of your own public IP address. Is it the same as the local candidate shown in the WebRTC Internals tab? What about the remote candidate? Is it the IP address of the Janus server? If you were able to see both your IP and Janus’s server, you were able to establish a direct connection. NAT didn’t represent an issue whatsoever!

If not, then it means something went wrong and a direct connection was not possible. In this case, a TURN server was required so you should be able to see the Coturn server IP instead.

The ICE candidate pair highlighted above is where the media traffic is actually being transmitted but we’ll get back to this later.

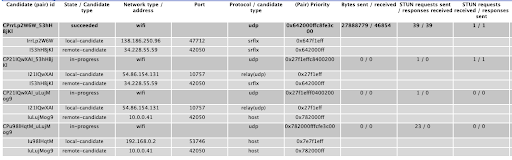

For now, let’s be more curious and check what other ice candidates were considered. To do so, expand the ICE candidate grid and all the tested pairs, their ports, types and even the protocol are shown.

Now let’s see how all this information is gathered. When establishing a peer connection, each peer starts gathering ICE candidates that then send to each other. This is done transparently in the background, all you need to do is to make sure you provide at least two STUN/TURN servers to application code.

In our example this is done in the Videocall component where you added your Coturn server credentials before, in addition to Google’s free STUN server.

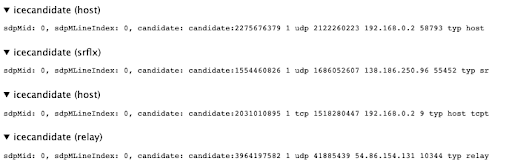

Provided that everything is set up correctly, the ICE candidates start appearing in webrtc-internals tab and when Trickle ICE is enabled (as is the case for our demo) these are sent immediately to the other peer.

The more relevant ICE candidates in the image above are the server reflexive (srflx) and the relay one. They correspond to the ones obtained from STUN and TURN server, respectively.

The srflx candidate is the public IP address provided by the NAT device of the LAN network plus the port used to make the request against the STUN server. In other words, the pair of IP addresses and ports that your device is using to reach the internet, or at least the one used to reach the STUN server.

The relay candidate comes from a negotiation between the client and the TURN server. If it’s not possible for the other peer to send media traffic through the server reflexive candidate, it can be done through an allocated port in the TURN server. Hence, relay is the IP address of the TURN server plus the allocated port.

In other words, a relay candidate is a way of telling the other peer: “If you can’t send data directly to me, send it through here”.

The same applies in the server side that also sends its ICE candidate to be processed by the client. These can also be found in the event list on webrtc-internals.

Notice how the server only sent host and relay candidates. This makes sense as for this exercise we haven’t configured a TURN server for Janus, which is OK for the purposes of this exercise but for production-like scenarios you’ll want to add it.

Sending and Receiving Media Data

If you’re following our instructions closely, there’s only one peer connection established: the one between you and the Janus server where you’re sending your own video stream. That’s cool to understand what’s going on, but nothing is really happening on the application. Let’s make it more interesting!

Using another device, open the demo application and join the same room. If you can, also invite someone else in a different network to try to join. What happens in the WebRTC internals page? You should be able to see additional sub tabs appearing – a.k.a additional peer connections – with different information.

How different are each peer connection ICE candidates? This of course will be different in each specific scenario but here you can see how it behaved in our tests.

You can see how we were able to connect directly with the Janus server in both peer connections, only using a different port. Oh, the wonders of ICE!

Now let’s focus on the actual media that is being sent and received in these connections. To do so let’s briefly get back to the highlighted ICE candidate pair we saw before.

A peer connection is composed of a candidate pair. One, the local candidate, refers to your browser or device. The other one, the remote candidate, refers to the other peer (in this case the server.)

As you can recall from a previous post, ICE candidates tell each peer “where they can meet”, or more precisely where they send media packets to. The packets are sent using SRTP, the secure version of Real Time Protocol that uses DTLS for encryption, to the IP address and the port in the remote candidate. They are received in the IP address of the local candidate.

This is different from the HTTP connection we saw in the beginning. In this case, we’re transmitting packets for media content. Since the rules and channels are not the same, a different protocol is used.

In case a relay candidate is used on any end, there is an intermediate server that is receiving the media traffic and it is relaying (hence the name) such traffic to the other peer.

Cleanup and Final Thoughts

Before anything else, let’s clean up the infrastructure to prevent AWS from charging you more than it should.

In the AWS web dashboard, head to Services > CloudFormation and select the stack you’ve created in this post. At the top, click on “Delete”. Cloudformation will start deleting all the resources.

When that is done, head to Services > S3 to delete the S3 buckets. This is a two step process. You need to click the bucket you want to delete, then click on Empty and complete a confirmation step.

After the bucket is empty, you can click on Delete. Again there is a confirmation step to make sure you’re not deleting it by accident.

With that settled, it’s been a long journey to go from connecting to a server and sending messages to starting to exchange media streams. However, we were able to see what happens behind the scenes from the point of view of networking. This includes using the right protocol and port to connect with the server, setting up STUN/TURN servers correctly, and overall, understanding the type of messages that peers exchange during a connection.

This understanding is a powerful tool not only to build high quality real-time applications, but also for troubleshooting when things are not working properly.

Whether you’re looking to build a WebRTC application from scratch or are trying to solve problems with the one you have, contact us to know how we can help. Let’s make it live!

Posts in this series: