Building an application with a CPaaS such as Vonage’s Video API (formerly known as TokBox), enables developers to quickly create functional video conferencing applications with many features, including screen sharing and broadcasting with custom layouts. As the standard features become easier to implement, richer experiences can be created as developers have more time to explore and build.

An alternative to screen sharing

Other forms of media or dynamic content can be streamed into a video call, providing applications with the possibility of more features. One example is to stream a controllable slide deck as a video stream into a Vonage media session. The stream can be published by a remote host that runs as a Vonage Video API client.

Having a remote service stream content has the great benefit of not using resources of a user’s machine to send content to the session. Screen sharing a slide show presentation, for example, is another media stream that must be processed by the machine.

This alternative also avoids a few drawbacks that come with screen sharing:

- Each browser has their own UI and flow for screen sharing, which can confuse users

- Permission issues are common and tend to change when operating systems and browsers are updated

- Having to manage a separate window to the video call often results in mistakes such as sharing something that shouldn’t be shared, or dropping off the conference by accident

Working a slide share service into an application avoids these issues and is both user-friendly and secure.

Implementation

The implementation of a remote streaming service uses a server to stream media into the Vonage media session. Since browsers (such as Google Chrome) tend to be the most compatible clients with WebRTC, the streaming service will use a browser as the client to stream the content.

Note that Vonage has a Linux C++ SDK. This can be used to build a client, as well.

Hosting The Service

Puppeteer is a node library that provides APIs to control a Chrome browser. The server can make use of this library to load a custom webpage. The webpage can be built with the same JavaScript library and frameworks that integrate into the SFU, just like how the main web conference application would.

The server, along with the webpage, can be made controllable using a signaling server to perform specific actions (start/stop stream, download new media to be streamed, etc).

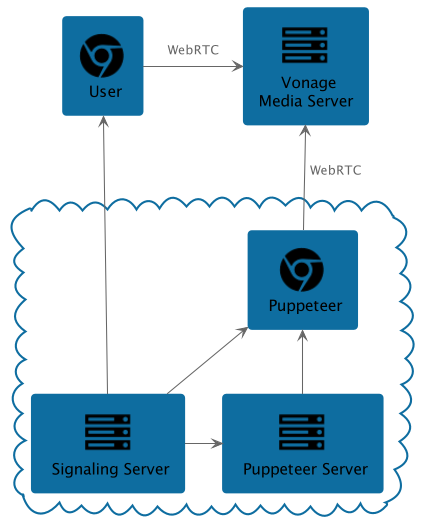

The following component diagram shows how Puppeteer can be hosted and launched from a server to run the web application that will render the slide deck stream:

The Puppeteer server can spin up an instance of Google Chrome and load the web page for the web application to stream the slide deck. The Puppeteer server may also host a simple server application to serve HTML and JavaScript for this integration. This solution makes it secure and easy to integrate with other service components of the application (e.g. signaling server, or API server).

Rendering Media for WebRTC on a Browser

WebRTC samples has a short guide on streaming from a canvas to a peer connection. With this in place, all that is needed to do is to render whatever is desired onto the canvas and it will be streamed to the media session.

HTML Canvas is very flexible and powerful and allows us to have deep control over rendering the image, which makes it easy to add things like name badges, and other dynamic content.

The following image shows this workflow at a high level:

The application code can use this and integrate with a signaling server to control what images are being drawn onto the canvas.

The following code snippet shows how this can be integrated with an Vonage Video API Publisher:

const session = OT.initSession('apiKey', 'sessionId')

const publisherWrapper = document.getElementById('publisherWrapper')

const canvas = document.getElementById('canvas') as HTMLCanvasElement

const canvasVideoTracks = () => canvas.captureStream().getVideoTracks()[0]

const publisher = OT.initPublisher(publisherWrapper, {

insertMode: 'append',

width: '100%',

height: '100%',

name: 'Slide Deck Publisher',

videoSource: canvasVideoTracks(),

publishAudio: false

}, (err) => {

if (err) {

console.error(`Unable to publish slide deck stream`, err)

return

}

session.publish(publisher)

})

The power here comes from canvas.captureStream().getVideoTracks(), which gets a video stream from the HTML canvas object. At this point, the application code may render anything onto the canvas, and it will get published as video into the Vonage session.

The application can then listen to signals from the signaling server to update what is being rendered onto the canvas.

Other Types of Media

Other types of media can also be streamed in this setup. The Puppeteer server provides a lot of ability to publish almost any kind of content into the Vonage session. For example, a mp4 video can be streamed by loading it in an HTML <video> tag, and sent to the Vonage Publisher with HTMLMediaElement.videoTracks().

As application development capabilities improve, new and innovative features can go a long way. They truly make all the difference between a video conferencing application with just the basic features versus one that provides a rich and innovative user experience.

Are you interested in building live video into your application, complete with rich experiences like these? Our expert team can help! Contact us today.