In today’s remote world where communication technologies are becoming more and more critical, it is no surprise that WebRTC usage has hit an all-time high. The fact that WebRTC is open source allows developers to build technologies that make it easier to stream data. One of those technologies is MediaSoup. MediaSoup is a media server that enables developers to build group chats, one-to-many broadcasts, and real-time streaming.

Today, we are going to build a basic voice and chat application with MediaSoup and explain how it is done. The full app can be found on GitHub, which includes instructions on how to run it. In this blog post, we will touch on some of the highlights of how it works.

What is MediaSoup?

MediaSoup is an open source SFU WebRTC server. (Check out this blog post for more information about SFUs and MCUs!) It is possible to relay audio, video and use SCTP data channels with MediaSoup. It includes a Node.js module that handles only the low-level media layer, as well as several client libraries.

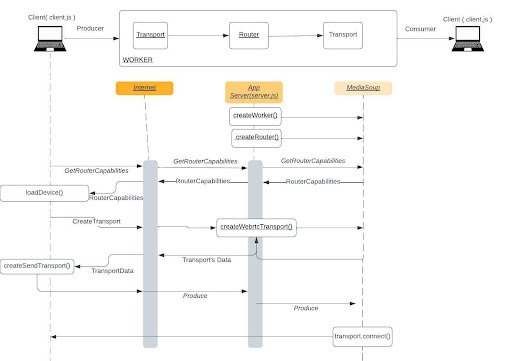

MediaSoup Architecture

If you take a look at the architecture, you will realize that there are multiple layers inside the transfer protocol. There are workers, routers, transports, consumers, and producers.

- Workers are a single CPU thread C++ subprocess that MediaSoup is responsible for. They can include multiple routers, which behaves as an SFU and is responsible for the media transfer between consumers and producers.

- WebRTC transports contain the consumers or producers. Simply put, producers are created to send data to the server and consumers are created for receiving data from the server. In today’s sample application, let’s say N number of participants are available on the audio call: there will be N producers for N participants in total. For each participant, there will be N-1 consumers because every participant has to consume the other streams.

Let’s dive into the example for more details.

Implementing Our Sample MediaSoup App

The application will be a tutorial app with one worker, one router, and several transports for both consuming and producing. Also there will be datachannel producers and consumers. Overall, it will look like this for publishing:

Initializing the application

The application server will be an express app in which the socket will be implemented with SocketIO, an event-driven JavaScript library for real-time web applications. The initialization of the server will be as follows:

(async () => {

try {

await runExpressApp();

await runWebServer();

await runSocketServer();

await runMediasoupWorker();

} catch (err) {

console.error(err);

}

})();Here, we are creating the server and initializing the mediasoup worker thread. While we are creating the worker, we are creating the router since we don’t require more than one router for the tutorial application.

sync function runMediasoupWorker() {

worker = await mediasoup.createWorker({

logLevel: config.mediasoup.worker.logLevel,

logTags: config.mediasoup.worker.logTags,

rtcMinPort: config.mediasoup.worker.rtcMinPort,

rtcMaxPort: config.mediasoup.worker.rtcMaxPort,

});

worker.on('died', () => {

console.error('mediasoup worker died, exiting in 2 seconds... [pid:%d]', worker.pid);

setTimeout(() => process.exit(1), 2000);

});

const mediaCodecs = config.mediasoup.router.mediaCodecs;

mediasoupRouter = await worker.createRouter({ mediaCodecs });

rooms[roomName] = {

router: mediasoupRouter,

}

}Client side code will be a basic SocketIO client that connects the server and sends the required command. We will implement publish(), subscribe() methods, which are available in the source code.

While we are connecting to the websocket, we are also initializing the device for client side MediaSoup operations by getting the routerRtpCapabilities from the application server with the getRouterCapabilities message. The application server will extract the RtpCapabilities from its router.rtpCapabilities. The server side implementation will look like this:

socket.on('getRouterRtpCapabilities', (data, callback) => {

callback(mediasoupRouter.rtpCapabilities);

});While the client side load device method looks like the following:

async function loadDevice(routerRtpCapabilities) {

try {

device = new mediasoup.Device();

} catch (error) {

if (error.name === 'UnsupportedError') {

console.error('browser not supported');

}

}

await device.load({ routerRtpCapabilities });

}Connecting to the producer

After initial connection, to start the producer, the client will initiate the process. The process will include these steps:

- Client.js sends a request for creating the transport to the server.js

const data = await socket.request('createProducerTransport', {

forceTcp: false,

rtpCapabilities: device.rtpCapabilities,

sctpCapabilities: device.sctpCapabilities,

});

if (data.error) {

console.error(data.error);

return;

}- A webrtcTransport will be created on server.js by router.createWebRTCTransport() (https://mediasoup.org/documentation/v3/mediasoup/api/#router-createWebRtcTransport)

socket.on('createProducerTransport', async (data, callback) => {

try {

const { transport, params } = await createWebRtcTransport();

//producerTransport = transport;

addTransport(transport, roomName, false, socket.id, false)

callback(params);

} catch (err) {

console.error(err);

callback({ error: err.message });

}

});- It will be replicated with the response data on client.js with device.createSendTransport()(https://mediasoup.org/documentation/v3/mediasoup-client/api/#device-createSendTransport)

const transport = device.createSendTransport({...data, iceServers : [ {

'urls' : 'stun:stun1.l.google.com:19302'

}]});- Client.js will subscribe to connect and produce

- Client.js will use getUserMedia to obtain necessary tracks and calls transport.produce()(https://mediasoup.org/documentation/v3/mediasoup/api/#transport-produce)

- Transport will emit connect and produce events and it returns a producer instance on the client side which will transfer the data.

navigator.mediaDevices.getUserMedia(mediaConstraints).then( (stream) => {

const newElem = document.createElement('div')

newElem.setAttribute('id', `localAudio`)

//append to the audio container

newElem.innerHTML = '<audio id="localAudio" autoplay></audio>'

videoContainer.appendChild(newElem)

document.getElementById("localAudio").srcObject = stream;

const track = stream.getAudioTracks()[0];

let params = { track };

params.codecOptions = {

opusStereo: 1,

opusDtx: 1

}

producer = transport.produce(params);

})Connecting to the consumer

Very similar steps will be taken to connect to the consumer. The process is:

- Client.js sends createTransport request to the server.js

- router.createWebRTCTransport() is called and the data is sent to the client.js

- device.createReceiveTransport is created on the client.js

- Here a key difference from producing is that, the device.rtpParameters should be sent to the server.js and server will call the router.canConsume() method to check whether the client can receive the media stream.

- This time, the server will call transport.consume() and it will be replicated on the client side.

Data channel produce and consume is no different than the above mentioned methods. The only difference is on the method names and sctpStreamParameters should be sent in addition to the rtpCapabilities. Check out the source code for further implementation.

Our application in action

Conclusion

MediaSoup is a great SFU that you can easily integrate to your application by using its Node.js module. It is open source, so it can greatly reduce the expenses for your project. However, it does have a steep learning curve.

Here at WebRTC.ventures, we are experienced in using a wide range of technologies to meet your real-time communication needs. Let us know how we can help you build your WebRTC application!