With APIs like Amazon Machine Learning API, Google Cloud Vision API, and IBM Watson Discovery API, it’s becoming easier to derive insights from your images and videos. Some of the features you can build relatively quickly with these platforms include object detection, product search, understanding text from images, and detecting explicit content.

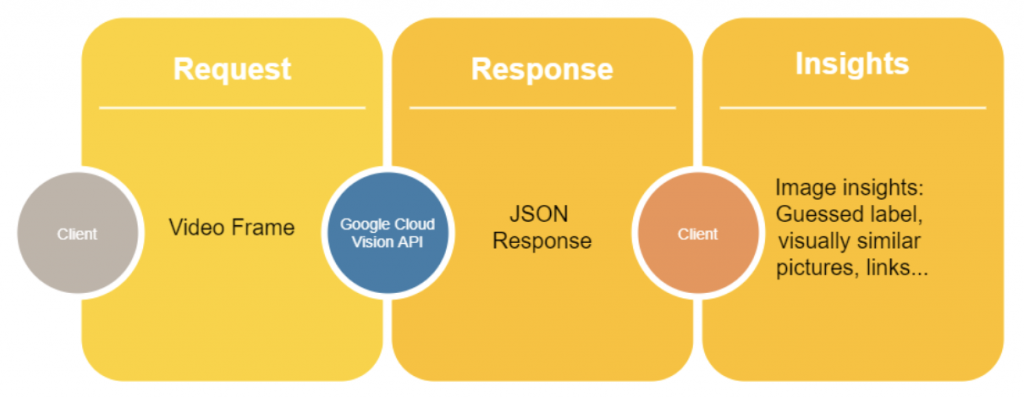

In this demo, we’ll demonstrate how to start using Google Cloud Vision API in live videos. We will assume that we want to learn more about what we are watching and, ideally, we’d like to find a matching object or emotion that appears in the video.

To test and familiarize ourselves with the basics, we’ll start with playing with the GC Vision API. You can try it without coding just by pasting an image here. It’s a powerful tool with many image detection options. For this example, we will use web detection. This API lets you detect web references to an image.

Testing Google Cloud Vision

We will send the following request pointing to our stored test image. The request will include Figure 2.

Request body:

{

"requests": [

{

"features": [

{

"type": "WEB_DETECTION"

}

],

"image": {

"source": {

"imageUri": "gs://tests-xxxx/test_picture.jpg"

}

}

}

]

}The response body will look like this:

{

"responses": [

{

"webDetection": {

"webEntities": [

{

"entityId": "/m/02bccw",

"score": 0.52201724,

"description": "Inca rope bridge"

},

{...}

],

"visuallySimilarImages": [

{

"url": "http://staceyrobinsmith.com/wp-content/uploads/2010/09/Lynn-Canyon-003.jpg"

},

{...}

],

"bestGuessLabels": [

{

"label": "lynn canyon suspension bridge"

}

]

}

}

]

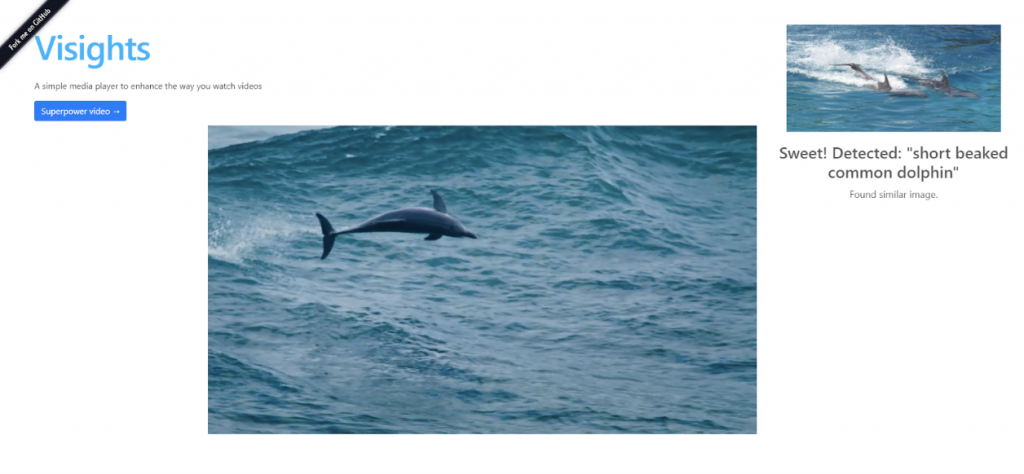

}As you can see, Google Cloud Vision guessed the location where the picture was taken and even got a similar picture!

Building a demo web app

Now it’s time to code. The API is relatively standard and easy to use. The main challenge comes from grabbing the video frames and sending them with the right format.

Here I built a basic web app that does this with videos. When you want to get insights from an image in the video, all you need to do is capture the frame to get the information. I also used plyr.js here to make the video player nicer.

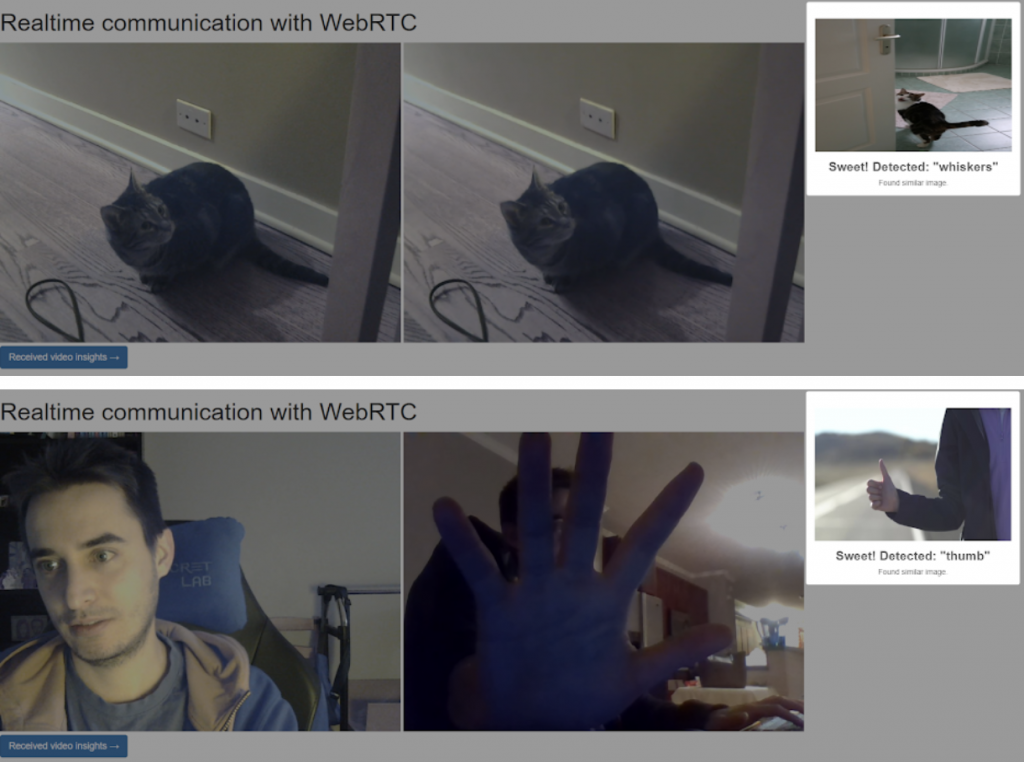

What if I want to do it with a live stream?

Well, that’s a bit trivial since we were already using a <video> element in the previous application. The same code will apply to WebRTC video apps. You will need to grab the video frame that you want and send it while watching the live stream. In this repository, I integrated the previous example in a WebRTC application. Try it out!

Let’s get started

At WebRTC.ventures, we love to tinker with new technologies. We build interactive, live streaming, customizable video applications, call centers, and many other real time data apps. There are many great opportunities in the field of video and audio communications. Contact us to build the next generation of video applications!