In 2015, we shared an article about applying effects to WebRTC in real time. A few things have changed since then. Today we bring you a new post about effects in WebRTC — this time discussing one of the most popular features of today’s social media apps: filters.

Requirements

To follow along, you’ll need Git, Node, and NPM installed. Installation steps for each operating system will vary, so refer to official documentation when necessary.

Once you have everything installed, we’ll work on top of a running WebRTC application. For this tutorial, we will use the webRTC branch of our webrtc-video-conference-tutorial github repo. (You might want to check out our eCourse about this!)

The resulting code of the tutorial is available at webrtc-filters-tutorial repo.

The What, When, and How

The purpose of this tutorial is to establish a WebRTC call and apply a filter to the video stream that we’re sending to the other user. To do this, we should manipulate the stream we get from the getUserMedia API and then add it to the RTCPeerConnection object.

To achieve this, we’ll use the Canvas HTML tag to draw our filters and then we’ll get the stream using theCanvas.captureStream method.

We also need to implement face detection to draw filters correctly. To do this, we will use the face-api.js API.

Steps

The first thing to do is clone the base repo and switch to webrtc branch. To do this from the terminal, navigate to an appropriate folder and run the following commands:

git clone git@github.com:agilityfeat/webrtc-video-conference-tutorial.git<br> cd webrtc-video-conference-tutorial<br> git checkout webrtc

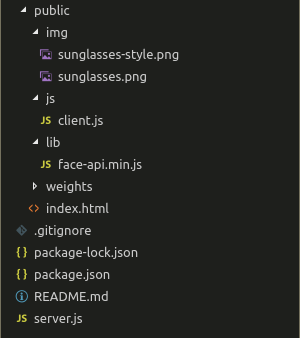

Now we have to make a few changes to the file structure. First, we’ll move the client.js file to its own js folder. Then we’ll add the face-api.js API. Download it here and place it under the lib folder. Then download the <a rel="noreferrer noopener" aria-label=" (opens in a new tab)" href="https://github.com/agilityfeat/webrtc-filters-tutorial/tree/master/public/weights" target="_blank">weights</a>folder and put it under public. You can also download these files from the official repo.

We also need to add the images that we will use as filters. You can use any images you’d like; just note that you’ll need to adjust the drawing coordinates to fit them into the video. The images used in this tutorial can be downloaded here.

At this point, you should have a file structure similar to the image below. Note our filters under the img folder.

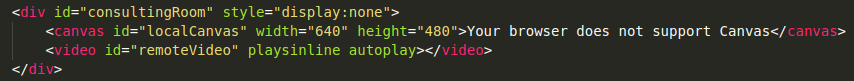

Next, open index.html and replace the localVideo tag with a canvas, as shown below.

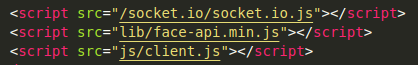

Update the script tag for client.js and add a new one for face-api.js.

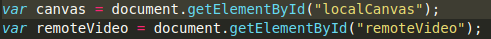

Now open js/client.js. We will begin by removing the reference to the removed video tag and adding a reference for the canvas.

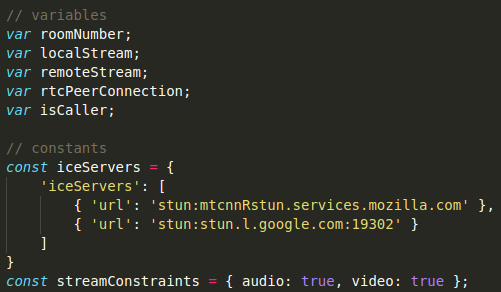

After that, we will reorganize the code a little bit. First, rearrange the variables and constants as shown below.

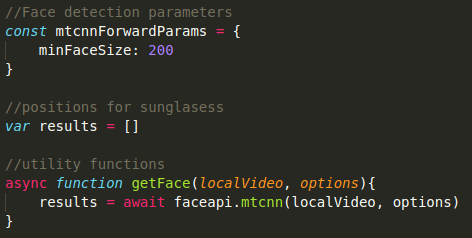

Next, we will set the parameters of our face detection mechanism and add an array to store its coordinates. We’re also adding a function that will get those coordinates.

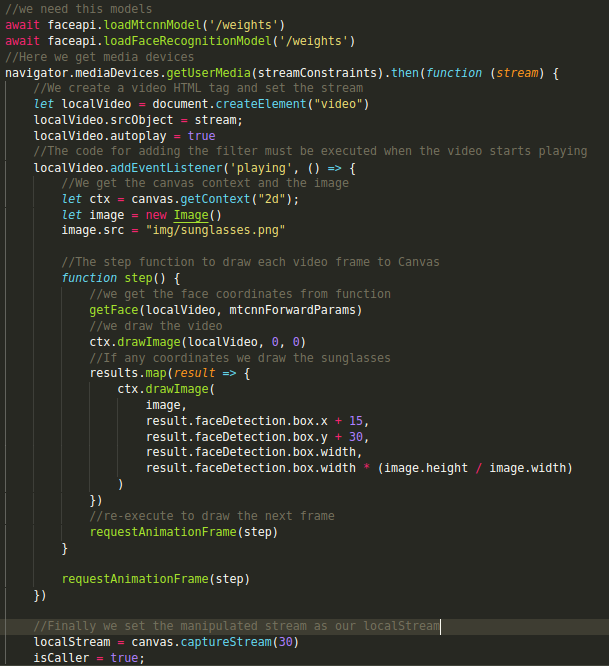

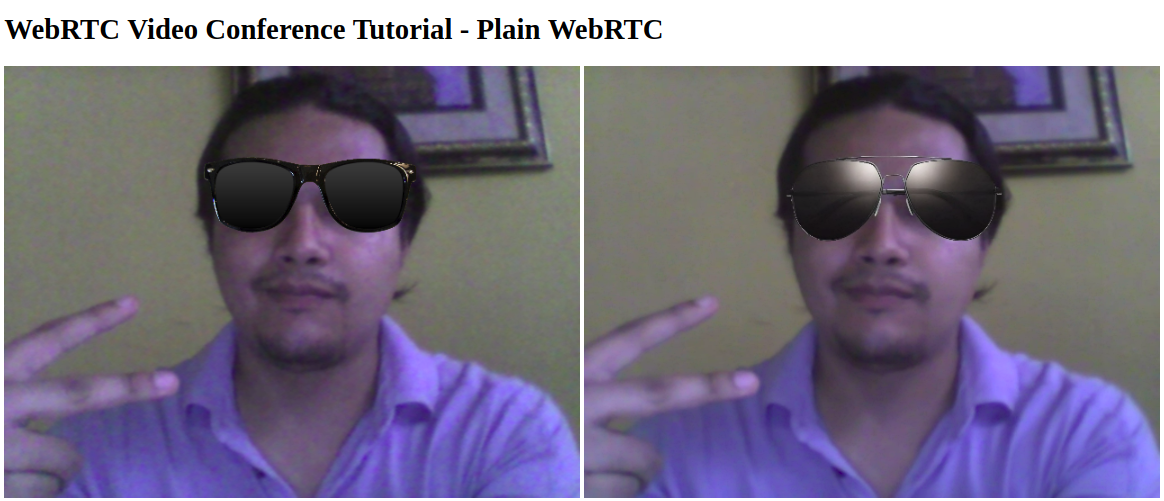

Now we’re ready to apply the filter to our stream. For this tutorial, we will set a fixed filter to each user. User 1 will get normal sunglasses and User 2 will get some more stylish ones.

The idea is that after we execute the getUserMedia API, we set the resulting stream to a video HTML tag. Then we’ll take that tag and draw it into a canvas. There we’ll be able to draw the filter on top of it.

Finally, we’ll get the stream from the canvas using the Canvas.captureStream method and assign it to our PeerConnection. The other user would receive the stream that has the filter.

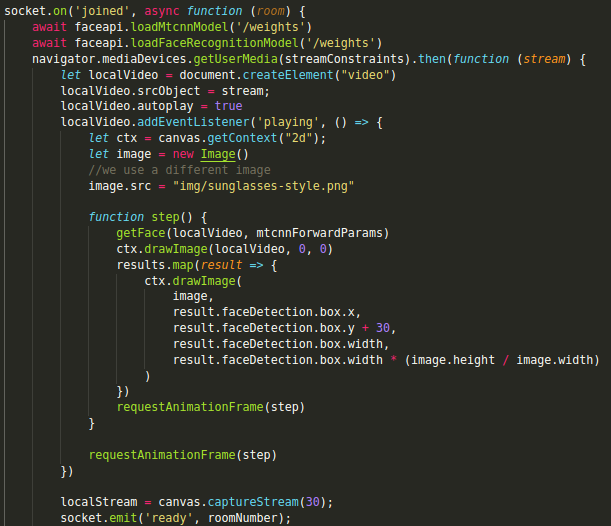

In the base application, getUserMedia is executed both when User 1 has created a new room and when User 2 has joined to it. We will use each one to set a different filter for each user.

Modify the getUserMedia code for the created event as follows.

Add the code to the joined event. This time, specify a different image.

Now we’re ready to see our filters in action! Using the terminal, navigate to the project folder and run the application as follows.

npm install<br> node server.js

Then open two different Chrome windows and go to http://localhost:3000. Enter the same room number in both of them and click “Go.” You should see something like this:

Conclusion

As you can see, it’s fairly simple to add fancy effects like filters to a WebRTC application. Be sure to check our other tutorials if you want to know more about WebRTC!