Graphics Processing Units (GPUs) were originally designed to accelerate gaming, enabling complex graphics computations to run in parallel. Unlike Central Processing Units (CPUs), which excel at executing a few instructions at a time with high precision, GPUs are built for massive parallelism—handling thousands of operations simultaneously.

This fundamental difference makes GPUs ideal not just for rendering graphics but also for the large-scale data processing that have been driving advancements in WebRTC video streaming. This capability has also become essential for AI-driven applications, dramatically improving training and inference speed for deep learning models.

Let’s take a look at how GPUs enhance WebRTC applications across two critical areas: the fundamental advantages of parallel processing for real-time communication, including GPU-accelerated video streaming capabilities, and the revolutionary impact on AI features like speech processing and language models.

The GPU Advantage: Understanding Parallel Processing

The difference between CPU and GPU processing is quite dramatic. One of my favorite demonstrations of this comes from a classic Mythbusters episode, where they show how a GPU processes complex calculations orders of magnitude faster than a CPU.

NVIDIA was the pioneer in parallel processing breakthroughs. Later, companies like AMD, Intel, and more recently Apple and Google, have developed their own innovations in GPU technology, each bringing unique strengths to different aspects of performance.

Disclaimer: The author has shares in NVIDIA.

The Foundation of Real-Time Communication: Where CPUs Still Rule

Before we dive too deep into GPU capabilities, it’s important to understand that not everything in WebRTC needs GPU acceleration. In fact, CPUs remain essential for many real-time communication processes.

In WebRTC media servers, for example, streaming is inherently sequential: packets are encoded, sometimes transcoded, and forwarded. This process is CPU-intensive, and throwing GPU power at it wouldn’t help much. Basic audio streaming typically runs efficiently on CPUs alone. Even some AI-powered voice applications, such as the popular open-source Voice Activity Detector (VAD), performs well without GPU acceleration.

GPU Acceleration in Video Streaming: Where Parallel Processing Shines

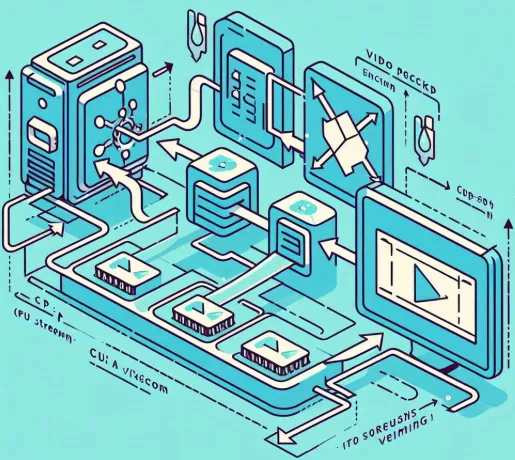

While the basic task of forwarding packets (even video packets) in WebRTC media servers might not need GPU power, there are some exceptions where GPU helps. In these cases, GPUs are beneficial since video is inherently parallelizable. Video streams consist of countless pixels and frames that can be processed simultaneously, a perfect match for GPU’s parallel processing capabilities.

High-Resolution Video Processing

Encoding and decoding high-resolution 4K video streams in real-time without a GPU would significantly increase latency and place a strain on the CPU. GPUs make this process much more efficient by handling large volumes of data in parallel, enabling the real-time transmission of high-quality video.

Hardware Acceleration

On the client side devices both CPU and GPU are used and it’s important to note that GPU-powered encoding/decoding is not limited to 4K streams. Some devices support hardware-accelerated decoding at lower resolutions as well. For instance, Apple has been utilizing hardware-accelerated GPUs for decoding H.264 video for years, showing that even lower-resolution videos can benefit from GPU capabilities. Another example is Intel’s new Iris Xe GPUs, which include dedicated AV1 hardware encoders that can efficiently process AV1 video.

You can learn more about WebRTC audio and video codecs here: Understanding WebRTC Codecs – WebRTC.ventures

Real-Time Transcoding

When you’re on a video call and your colleague is using a different device with different capabilities (e.g: a user dialing in through their phone to a video conference), real-time transcoding becomes necessary. GPUs excel at this task, efficiently converting video streams between different formats and resolutions faster than a CPU based architecture would.

GPU Acceleration for AI in WebRTC

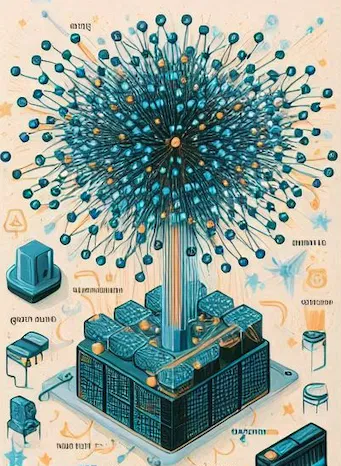

Perhaps the most exciting application of GPUs in WebRTC is running AI models for speech-to-text (STT), text-to-speech (TTS), and Large Language Models. These tasks require heavy matrix operations, also called tensor operations, that benefit from GPU acceleration, reducing latency.

AI models process data as numerical matrices:

- Speech-to-Text (STT): Converts audio waves into spectrograms (2D matrices) before passing them through neural networks that extract phonetic patterns and predict text sequences. A popular open source model used for this is Whisper.

- Text-to-Speech (TTS): Transforms textual input into character embeddings and later into phoneme embeddings (vectors), which are then processed by deep neural networks to generate spectrograms and waveforms.

- Large Language Models (LLMs): Use embeddings to convert words into vector spaces (collection of numbers), followed by matrix multiplications to determine relationships between words and generate meaningful responses.

These tasks involve multiplying large matrices, which CPUs handle sequentially, slowing inference. GPUs, with thousands of cores and specialized Tensor Cores, accelerate these operations massively, reducing latency, key for real-time applications like live captions and AI assistants.

Optimizing Real-Time Video & Audio with GPU Acceleration

Implementing WebRTC applications requires expertise in both real-time communications and hardware optimization. At WebRTC.ventures, we specialize in developing cutting-edge solutions that leverage the full potential of modern GPU capabilities. Our team can help you implement efficient, scalable solutions for:

- Low-latency video streaming

- AI-powered real-time features like voice bots

- Complex audio and video processing

- Custom WebRTC applications

Contact us to discuss how we can help make your next real-time communication project a success!