Generative AI and LLMs have proven to be essential tools when it comes to providing personalized and efficient self-service experiences in the contact center industry. Amazon Connect cloud contact center and customer service software, Amazon Lex AI chatbot, Amazon Bedrock generative AI, and AWS Lambda serverless compute functions are all powerful components that can be combined to create dynamic conversational bots that enhance customer experience (CX).

In this blog post, we’ll explore how to set up a system that leverages these technologies to enable a bot that can handle complex customer inquiries.

Prerequisites

- Set up an Amazon Connect instance and configure a phone number and a flow.

- Create an Amazon Lex bot with the customer intents you want to handle.

- Enable the desired foundational models in Amazon Bedrock.

Overview

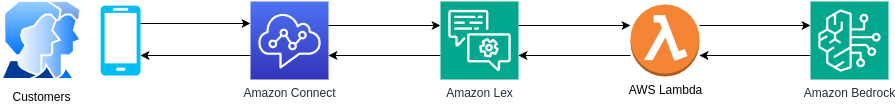

The core of this setup is an AWS Lambda function that operates during the fulfillment step of an Amazon Lex Intent. This function processes the customer’s input, sends a request to a Large Language Model (LLM) running in Amazon Bedrock, and then returns the response to Lex, which ultimately delivers it to the customer.

In Amazon Connect, the Amazon Lex bot is associated with a Get Customer Input block.

The whole setup is depicted below:

The code examples in this post are for Python, but the concepts are applicable to any other programming language.

Writing an AWS Lambda Function for Interacting with Amazon Bedrock

Let’s start by writing the Lambda function. It will perform the following tasks:

- Read the user’s input and the recognized intent from Amazon Lex.

- Based on the intent, build an appropriate prompt for the LLM.

- Invoke an LLM in Amazon Bedrock, passing the prompt and user’s inquiry.

- Send back the response from Amazon Bedrock to Amazon Lex, and prompt the bot to query the customer for a new intent.

Amazon Lex passes the customer’s input and the recognized intent to the Lambda function as parameters. You can find the input under the inputTranscript key and the recognized intent within the sessionState key in the event dictionary, as shown in the code snippet below:

intent_name = event['sessionState']['intent']['name']

input_transcript = event['inputTranscript']Now we need to build an appropriate system prompt for the recognized intent. For example, suppose you are building a bot that answers Frequently Asked Questions (FAQs) for a bank. If a customer makes a question about accounts, you’ll want to build a system prompt that instructs the LLM to provide detailed and accurate information related to bank accounts, such as account types, how to open an account, account features, or balance inquiries.

The code below shows an example of a very basic system prompt that includes some of this information. For a more sophisticated implementation you’ll want to use a Retrieval-Augmented Generation approach or use a model that has been fine-tuned specifically for your use case.

if intent_name == "AskAboutAccounts":

system = [{

"text": """You're a customer service agent specialized in answering

frequently asked questions for a bank. The following is the general

information about accounts:

* Steps to open an account:

1. Visit a physical agency

2. Show customer's ID

3. Fill and sign form A-50

* Accounts are suspended after 90 days of inactivity.

* If suspended, accounts can be reopened by visiting a physical agency.

* Agencies are open from 9am to 5pm, monday to friday."""

}]In addition to the system prompt, we also need to pass the user’s input to the LLM, along with any other message from previous interactions. The code below shows how to build a message that contains such an input as received from Amazon Lex.

messages = [

# ... previous messages

{

"role": "user",

"content": [{

"text": input_transcript

}]

}

]With all the pieces in place, we can invoke our favorite foundation model from Amazon Bedrock. In the example below we show a function that makes a request to Anthropic Claude 3 Haiku using the Converse API.

def invoke_model(system, messages):

bedrock_runtime = boto3.client("bedrock-runtime", region_name="us-east-1")

config = {

"maxTokens": 300,

"temperature": 0.5,

"topP": 0.999

}

claude_config = {

"top_k": 250

}

model_id = "anthropic.claude-3-haiku-20240307-v1:0"

response = bedrock_runtime.converse(

modelId=model_id,

messages=messages,

system=system,

inferenceConfig=config,

additionalModelRequestFields=claude_config

)

return responseOnce you have a response from Amazon Bedrock, you return a dictionary that includes both the response and the next step for Amazon Lex. In our example, we want Lex to ask the customer for a new intent, so we pass the ElicitIntent action. The format of the response is shown in the code snippet below:

return {

"sessionState": {

"dialogAction": {

"type": "ElicitIntent"

}

},

"messages": [{

"contentType": "PlainText",

"content": bedrock_response

}]

}Adding a Lambda Function to the Fulfillment Step in Amazon Lex

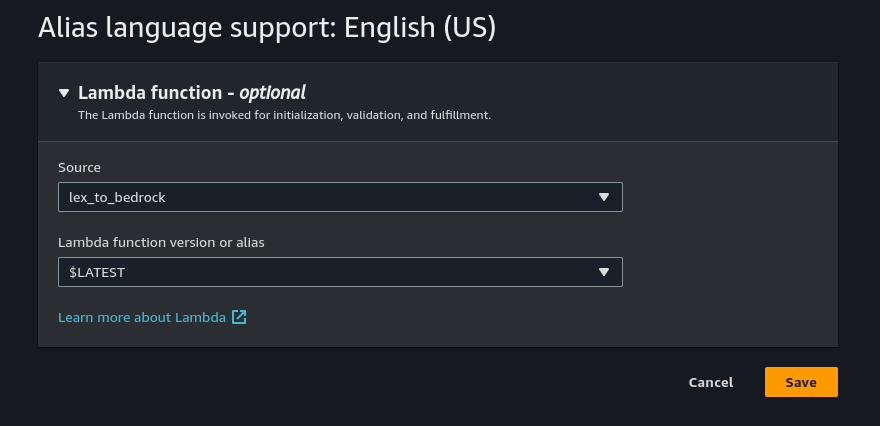

Now that you have a function, you need to make it available to Amazon Lex. To do so, you need to publish a new version of the bot and associate it with an alias. Then, in the alias’ configuration you add the function by clicking the language where you want the function to be available.

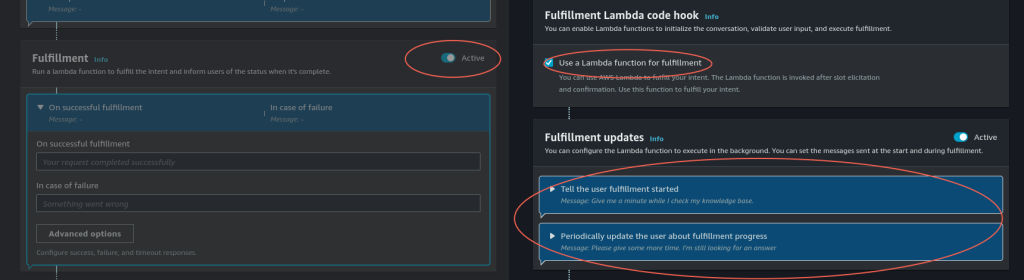

Then, for each of the intents you want the function to fulfill, make sure to enable the Fulfillment step and also to check the Use a Lambda function for fulfillment option. In case of a voice interaction, you can enable a set of canned responses for the bot to tell the customer while the Lambda function is being executed.

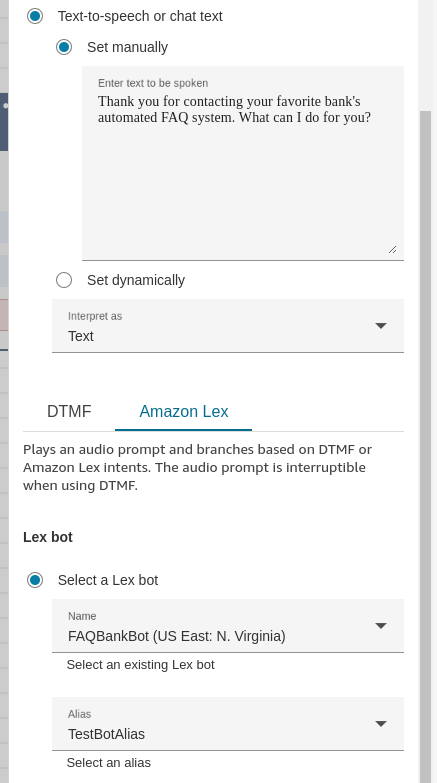

Configure the ‘Get Customer Input’ Block to Use a Lex Bot

The final step is to include the Amazon Lex bot in your Contact Flow. This is done by configuring a Get Customer Input block to use the bot to process your customers’ input.

But first, you need to add the bot to the Amazon Connect instance’s list of enabled bots. To do so, in the instance’s configuration, go to Flows and then select the region, bot and alias under Amazon Lex. Then, click on Add Amazon Lex Bot. This makes the bot available to be used in Contact Flows.

Next, in the Contact Flow you need to add a Get Customer Input block (if you haven’t already) and after setting an initial prompt message, select the bot and alias you just added.

Demo Time!

Let’s see our Bank FAQ bot in action in the video below. Note how after the customer asks how to open an account, the bot outlines the steps as defined in the system prompt we saw before.

Enabling More Dynamic Interactions for Your Contact Center

We’ve walked through the process of setting up a dynamic conversational bot in Amazon Connect using Amazon Lex, Amazon Bedrock, and AWS Lambda. By combining the power of these technologies, we can create a system that can handle complex customer inquiries and provide personalized responses.

With this setup, contact centers can provide a more efficient and effective customer experience, leading to increased customer satisfaction and loyalty. The key to success lies in defining clear system prompts and intents that accurately capture the nuances of human language.

Ready to take your customer experience to the next level? The team of experts at WebRTC.ventures can help you design and deploy a customized conversational AI system using Amazon Connect or other services.

Also consider Conectara, our cloud contact center solution built on Amazon Connect . Conectara is perfect for those who want a seamless implementation with minimal technical expertise required.

Let’s Make it Live!