In part one of this series, we discussed how automation can be helpful to deal with the complexity of building a WebRTC solution, and allows for repeatable and reliable deployments down the road. Today, we will take a look at a very simple code example that explores the approach for automating configuration for WebRTC.

As part of this exercise, we will provision two EC2 instances: one running the Janus WebRTC Server and the other one running coturn. Configuring a production-ready environment for Janus and coturn are both complete topics on their own and are out of the scope of this exercise. For demonstration purposes, we’ll configure a simple HTTP endpoint for Janus and STUN functionality for coturn.

This information is also available as a WebRTC Tips by WebRTC.ventures video:

Getting the Code Example

The code for this exercise is hosted on Github so you’ll need Git installed on your computer. You can find installation instructions here. If installing Git is not an option, you can always download the code as a zip file.

Once you have Git installed, open a terminal window and follow the instructions below to clone the repository:

git clone https://github.com/agilityfeat/coturn-janus-example.git

cd coturn-janus-exampleThen, open the folder using your favorite code editor.

Setting Up Requirements

Before exploring the code, let’s take a moment to set up requirements. The example code will provision an AWS EC2 instance using Terraform, and it will install all the required dependencies and apply configurations using Ansible. Therefore, you’ll need an AWS account, and also Terraform and Ansible installed on your computer.

If you don’t have an AWS account yet, you can sign up following these instructions. New accounts are part of the free tier, a program that will allow you to use some eligible services for free during the first year. This example uses some of such services.

Once you have your account created, move to the next step.

If you already have an AWS account, make sure you have an IAM user with administrator access and create a set of access keys. You’ll use such access keys later when running the example.

Next, you’ll need to install Terraform and Ansible. Click on the links and follow instructions there, get back here when you’re ready.

Provisioning the Infrastructure

With all dependencies set, let’s look into the code. We’re focusing on the provisioning part first, you can find it under the terraform/ folder. The most important file there is the main.tf file which is where all the resources are defined. Let’s explore the code little by little.

At the top of the file is the configuration for AWS and also definitions of existing resources that we will use. To keep things simple, we will use a public AMI and we will rely on the default VPC and subnets.

# terraform/main.tf

# Configuration for AWS

provider "aws" {

region = "us-east-2"

}

# Definition of the ubuntu AMI provided by AWS

data "aws_ami" "ubuntu" {

most_recent = true

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-focal-20.04-amd64-server-*"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

owners = ["099720109477"]

}

# Definition of a VPC. We will pass the id through terraform variables later

data "aws_vpc" "main" {

id = var.main_vpc

}

# Definition of the subnet. We will pass the id through terraform variables later

data "aws_subnet" "main_public" {

id = var.main_public_subnet

}

...Next in the file we can see the two EC2 instances and a pair of elastic IP addresses for each one.

# terraform/main.tf

...

# An EC2 instance for Janus

resource "aws_instance" "janus_dev" {

ami = data.aws_ami.ubuntu.id

instance_type = "t3.micro"

subnet_id = data.aws_subnet.main_public.id

key_name = var.main_ssh_keyname

vpc_security_group_ids = [aws_security_group.janus_dev.id]

tags = {

app = "janus"

env = "dev"

Name = "janus-dev"

}

}

# An EC2 instance for coturn

resource "aws_instance" "coturn_dev" {

ami = data.aws_ami.ubuntu.id

instance_type = "t3.micro"

subnet_id = data.aws_subnet.main_public.id

key_name = var.main_ssh_keyname

vpc_security_group_ids = [aws_security_group.coturn_dev.id]

tags = {

app = "coturn"

env = "dev"

Name = "coturn-dev"

}

}

# Elastic IP address for Janus server

resource "aws_eip" "janus-dev" {

instance = aws_instance.janus_dev.id

vpc = true

}

# Elastic IP address for coturn server

resource "aws_eip" "coturn-dev" {

instance = aws_instance.coturn_dev.id

vpc = true

}

...Finally, we have the security groups for the EC2 instances. A security group defines the ports that will allow inbound and outbound traffic. We are using the more basic traffic requirements, but both Janus and coturn support multiple kinds of services that may require setting other ports, too. You can check each project’s documentation to know more about it.

# terraform/main.tf

...

# Security group for coturn instance

resource "aws_security_group" "coturn_dev" {

# rule for ssh access

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# rule for STUN requests through tcp

ingress {

from_port = 3478

to_port = 3478

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# rule for STUN requests through udp

ingress {

from_port = 3478

to_port = 3478

protocol = "udp"

cidr_blocks = ["0.0.0.0/0"]

}

# rule for allowing outbound traffic through all port

egress {

protocol = "-1"

from_port = 0

to_port = 0

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

env = "dev"

app = "coturn"

Name = "coturn-dev-security-group"

}

}

resource "aws_security_group" "janus_dev" {

# rule for websockets

ingress {

from_port = 8188

to_port = 8188

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# rule for admin API requests

ingress {

from_port = 7088

to_port = 7088

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# rule for http requests

ingress {

from_port = 8088

to_port = 8088

protocol = "tcp"Configuring Janus and Coturn

Now let’s look at the code for installing and configuring Janus and coturn. The code for this can be found in the ansible/ folder.

Here there are two main entry points: coturn.yml for coturn and janus.yml for Janus. Both files define the parameters to log in into the EC2 instances and the tasks that will be performed.

# ansible/coturn.yml

---

- hosts: coturn # the list of server according to the inventory

remote_user: ubuntu # the user used to SSH into the instances

become: yes # whether to perform tasks as superuser

roles:

- systemli.coturn # the list of tasks that will be performed for coturn

vars_files:

- ../vars/{{ env_name }}.yml # variables to use

# ansible/janus.yml

---

- hosts: janus # the list of server according to the inventory

remote_user: ubuntu # the user used to SSH into the instances

become: yes # whether to perform tasks as superuser

roles:

- bitsyai.janus_gateway # the list of tasks that will be performed for janus

vars_files:

- ../vars/{{ env_name }}.yml # variables to useAs you can see in the code, the list of tasks is defined by a role. A role is a set of tasks, templates and other multiple Ansible resources. In this case, we’re using predefined roles from the Ansible Galaxy repository. Systemli.coturn is used for installing and configuring coturn, and bitsyai.janus_gateway for Janus.

You can download Ansible roles from the Ansible Galaxy repository or create your own from scratch.

Both files also reference a list of hosts based on the inventory file that can be found on inventory/hosts.ini. There you need to define the servers that you want to configure using Ansible. You will see a placeholder for your own servers divided into two groups: Janus and coturn.

Each group name is referenced on entry point files. In a later step you’ll enter your server details here.

# ansible/inventory/hosts.ini

[janus]

<add-ip-here> env_name=dev

[coturn]

<add-ip-here> env_name=devLast but not least, we use variables to customize the behavior of the roles. Such variables are defined in the var/dev.yml file.

We start by setting some secrets for coturn and also defining which Janus plugins should be enabled.

# ansible/vars/dev.yml

---

# coturn current vars

coturn_static_auth_secret: cw0XNmO3sgT0P0tv0ywa9ALJwNptlpvK

coturn_use_tls: false

# Janus build config

janus_build_extras:

audiobridge: false

mqtt: false

nanomsg: false

rabbitmq: false

recordplay: false

sip: false

systemd: true

websockets: true

...Next, we set some configurations for Janus and its plugins:

# ansible/vars/dev.yml

...

# Janus config

janus_user: janus

janus_group: janus

janus_workspace_dir: "{{ ansible_env.HOME }}/workspace"

janus_install_dir: /opt/janus

janus_conf_dir: "{{ janus_install_dir }}/etc/janus"

janus_log_file: /var/log/janus/janus.log

janus_lib_prefix: /usr/local

janus_pid_file: /var/run/janus/janus.pid

# libwebsocket build

janus_libwebsockets_build_dir: "{{ janus_workspace_dir }}/libwebsockets"

janus_libwebsockets_repo: https://github.com/warmcat/libwebsockets.git

janus_libwebsockets_version: v4.1.2

# libnice build

janus_libnice_build_dir: "{{ janus_workspace_dir }}/libnice"

janus_libnice_repo: https://github.com/libnice/libnice

janus_libnice_version: "0.1.18"

# usrsctp build

janus_usrsctp_build_dir: "{{ janus_workspace_dir }}/usrsctp"

janus_usrsctp_repo: https://github.com/sctplab/usrsctp

janus_usrsctp_version: 1d204411493d4a5b9ec66fa9aed958320d7fb2c9

# libsrtp build

janus_libsrtp_version: "2.4.2"

janus_libsrtp_tarball: "https://github.com/cisco/libsrtp/archive/v{{ janus_libsrtp_version }}.tar.gz"

janus_libsrtp_build_dir: "{{ janus_workspace_dir }}/libsrtp-{{ janus_libsrtp_version }}"

# Janus build

janus_build_dir: "{{ janus_workspace_dir }}/janus-gateway"

janus_repo: https://github.com/meetecho/janus-gateway.git

janus_version: v1.0.2

janus_upgrade_available: "{{ janus_installed_versions.janus == None or janus_installed_versions.janus < janus_version }}"

...Finally, we set some secrets for Janus and set values for the configuration files.

# ansible/vars/dev.yml

...

# Secrets and variables

janus_api_secret: WKOsuSgrQPjcmcvBIZhbESOVYjeTnlkCsdCxOJsqeN

janus_token_auth_secret: DFYjNlyoutNJoQJrIHIRVipEHmdRAsliEXoYTbRojL

janus_admin_secret: ihDwQcDzZlhHESBUeHJltiDhXDwaLpsCTifGrHDFjE

janus_conf_var:

janus:

plugins_folder: "{{ janus_install_dir }}/lib/janus/plugins"

transports_folder: "{{ janus_install_dir }}/lib/janus/transports"

events_folder: "{{ janus_install_dir }}/lib/janus/events"

loggers_folder: "{{ janus_install_dir }}/lib/janus/loggers"

log_to_stdout: true

debug_level: 4

daemonize: false

pid_file: "/var/run/janus.pid"

api_secret: "{{ janus_api_secret }}"

token_auth: true

token_auth_secret: "{{ janus_token_auth_secret }}"

admin_secret: "{{ janus_admin_secret }}"

server_name: "{{ inventory_hostname }}"

session_timeout: 60

candidates_timeout: 45

reclaim_session_timeout: 0

no_webrtc_encryption: false

ignore_unreachable_ice_server: true

enable_nat: true

stun_server_fqdn: stun.l.google.com

stun_server_port: 19302

stun_server_full_trickle: true

janus.transport.http:

admin_base_path: "/admin"

admin_http_port: 7088

admin_https_port: 7889

admin_http: true

admin_https: false

base_path: "/janus"

https: false

https_port: 8089

http: true

http_port: 8088

janus_conf_template:

janus.eventhandler.gelfevh.jcfg: janus.eventhandler.gelfevh.jcfg

janus.eventhandler.sampleevh.jcfg: janus.eventhandler.sampleevh.jcfg

janus.eventhandler.wsevh.jcfg: janus.eventhandler.wsevh.jcfg

janus.jcfg: janus.jcfg

janus.plugin.audiobridge.jcfg: janus.plugin.audiobridge.jcfg

janus.plugin.echotest.jcfg: janus.plugin.echotest.jcfg

janus.plugin.nosip.jcfg: janus.plugin.nosip.jcfg

janus.plugin.recordplay.jcfg: janus.plugin.recordplay.jcfg

janus.plugin.sip.jcfg: janus.plugin.sip.jcfg

janus.plugin.streaming.jcfg: janus.plugin.streaming.jcfg

janus.plugin.textroom.jcfg: janus.plugin.textroom.jcfg

janus.plugin.videocall.jcfg: janus.plugin.videocall.jcfg

janus.plugin.voicemail.jcfg: janus.plugin.voicemail.jcfg

janus.transport.http.jcfg: janus.transport.http.jcfg

janus.transport.pfunix.jcfg: janus.transport.pfunix.jcfg

janus.transport.websockets.jcfg: janus.transport.websockets.jcfgRunning the Example

WIth all the pieces in place, it’s time to run the scripts and see the magic of automation in action. Before, let’s customize the code a little bit so it suits your environment.

First of all, open a terminal window and navigate to the folder of the project, then export your AWS access keys as follows:

export AWS_ACCESS_KEY_ID=yourkeyhere

export AWS_SECRET_ACCESS_KEY=yoursecrethereThen, navigate to the terraform folder and initialize the terraform state by running:

cd terraform # make sure you’re in the terraform folder

terraform initNext, open the terraform/main.tf file and set the right region:

# terraform/main.tf

provider "aws" {

region = "change-this"

}

...And finally, create the terraform/terraform.tfvars and add the required variables as follows:

main_vpc = "vpc-abc123"

main_public_subnet = "subnet-123abc"

main_ssh_keyname = "your-key-pair-name"Now, you can run the command to apply changes from the terraform folder and review them. When you’re comfortable with the results, accept the changes:

cd terraform # navigate into the terraform folder if you’re not there already

terraform applyWait a couple of minutes and take note of the IP addresses assigned to the newly provisioned servers. Next, add such addresses to the Ansible inventory we mentioned earlier by opening ansible/inventory/hosts.ini and replacing the placeholders with the actual values as follows:

# ansible/inventory/hosts.ini

[janus]

<add-ip-here> env_name=dev

[coturn]

<add-ip-here> env_name=devWith the inventory configured, you can now configure the servers. First, run the command for Janus from inside the ansible folder (make sure you add your SSH key pair to your keychain first):

ssh-add path/to/key.pem

cd ansible # navigate into the ansible folder if you’re not there already

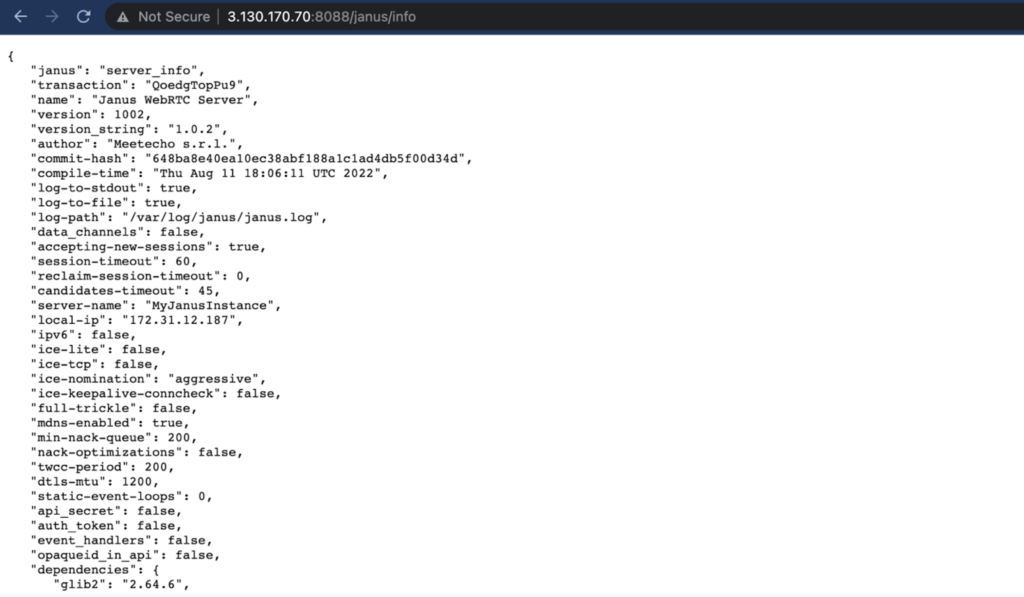

ansible-playbook -i inventory/hosts.ini janus.ymlAfter a couple minutes, Ansible will finish installing and configuring Janus in the EC2 instance as it was defined in the variables file. When it finishes, use a browser to navigate to the IP address on port 8088 to check some information about your newly configured Janus instance.

The last step is to execute the coturn playbook. As with Janus, make sure you’re in the ansible folder and run the following command:

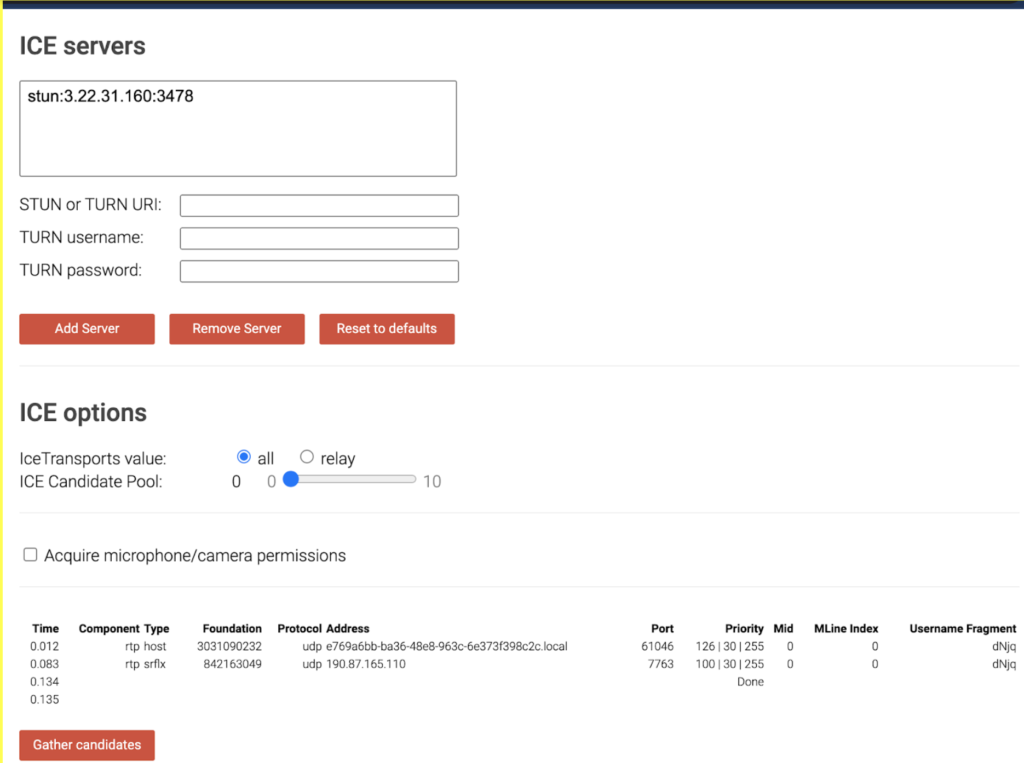

ansible-playbook -i inventory/hosts.ini coturn.ymlNow, we just need to wait until Ansible finishes applying all the required configuration. Once it’s done you can check the STUN functionality using the trickle ICE webrtc sample. After entering your server details, you should be able to see something like this:

If everything went well, you not only have a set of media and STUN servers up and running, but also a set of scripts ready that you can reuse to provision additional instances and that can be added to version control to manage changes and new features.

Before closing this post, let’s take a moment to clean up the resources to prevent unwanted charges in your AWS account. To do so, in the terminal window navigate to terraform folder and run the Terraform’s destroy command:

cd terraform # make sure you’re inside the terraform folder

terraform destroyConclusion

In this post, you saw an approach to automate configuration for WebRTC, by provisioning a set of Media and STUN servers using Terraform and Ansible. Hopefully, this example will serve as a base for you to build your own set of scripts that help you to build your WebRTC solutions.

Let our talented team of DevOps and developers do the work for you! Contact us today and tell us how we can help.