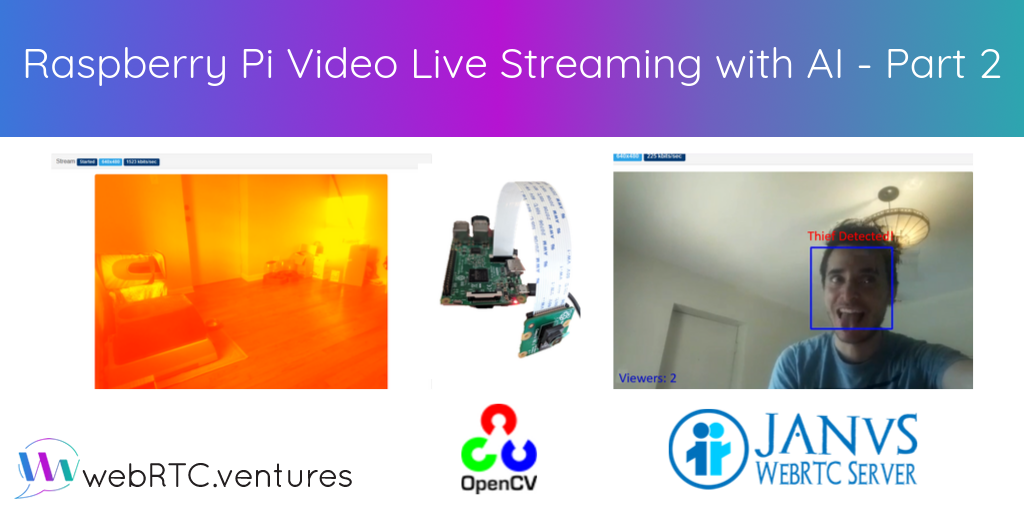

Last month, we streamed video from a Raspberry Pi, applied a filter to it on the server, and streamed it live to dozens of users. In the second part of this project, we’ll go a little deeper and do something more complex with OpenCV: implement face detection on the server.

If you want to jump directly to the code, you can find the demo project here. If you install the modules and then run node videoFaceDetection in /video-ai-service, you should see a demo video with applied face detection.

Face detection

For face detection, we can use the demo code in opencv4node here. We just need to make some modifications to reduce latency and send and receive UDP packets.

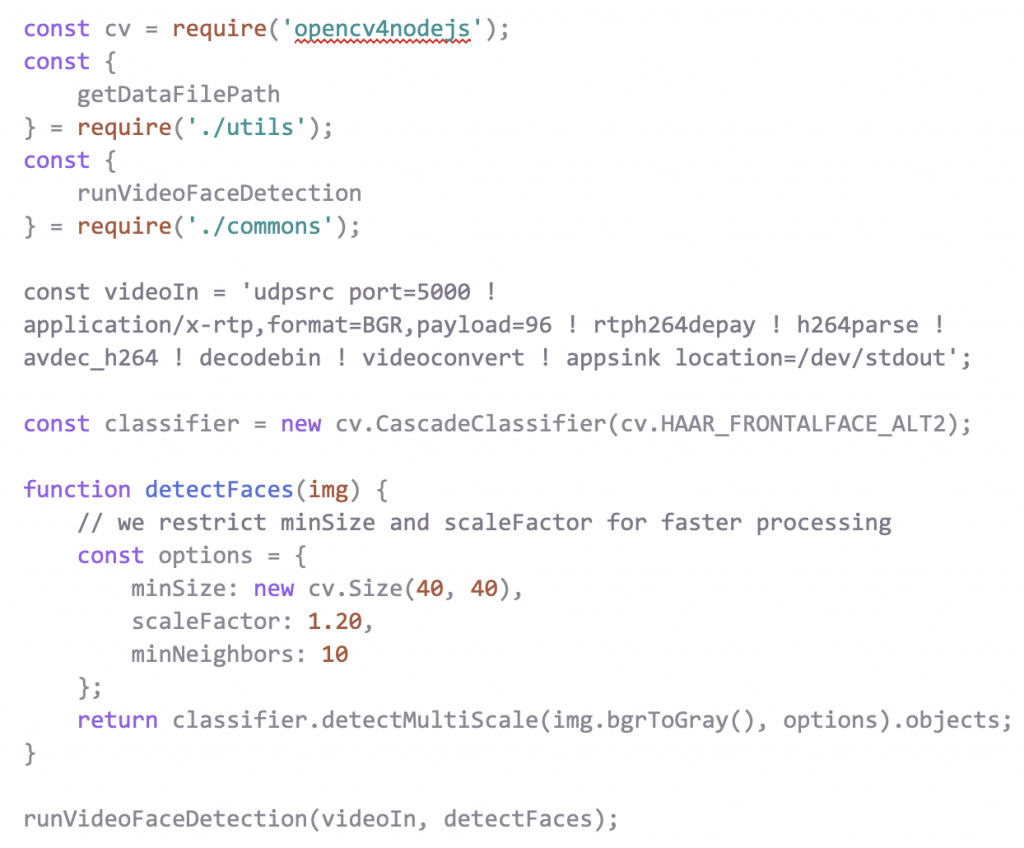

Below we have videoFaceDetection.js, where we declare the CascadeClassifier and other main variables and functions that happen in “runVideoFaceDetection()”:

In runVideoFaceDetection, the video stream will apply the CascadeClassifier and add a square around each detected face. After that, the modified UDP stream is sent to Janus and delivered to web clients using WebRTC.

To test it out with a prerecorded video, you can change videoIn to:

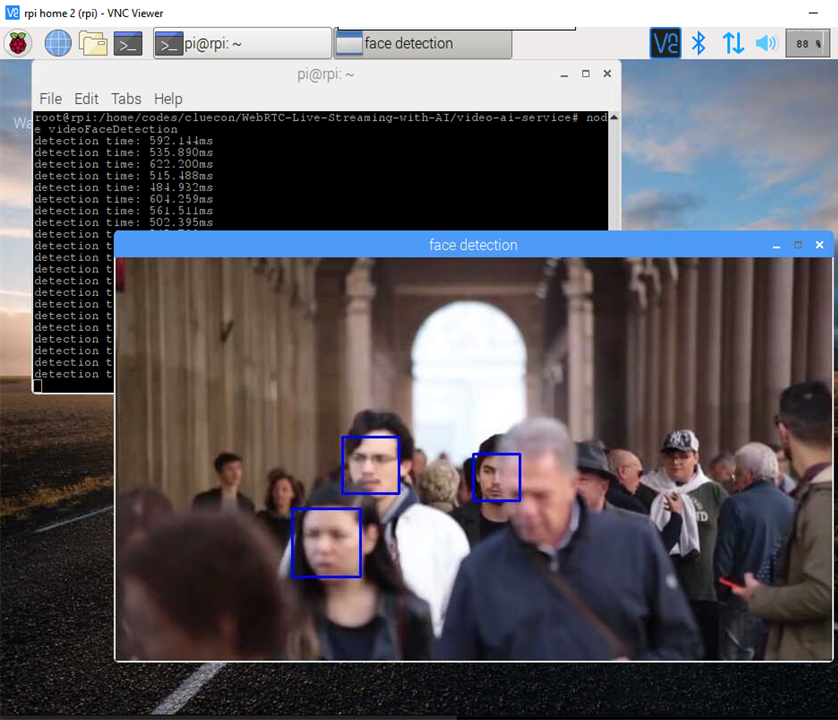

Then you’ll be able to run node videoFaceDetection and see something like this in your local machine:

If it works with the demo video, change it back to use the UDP incoming stream from the Raspberry Pi.

If you’re still having problems and can’t see the stream in the web client, you can try node index.js to generate a test source media stream and verify that the media stream is being received by Janus.

What do we build next?

As you can see, once you have a running ML live streaming solution, implementing other image detection operations for a specific use case can be achieved easily. There are many interesting examples that we’ll play around in the future, like hand gesture recognition, object detection, and even pose detection.

Contact us to build the next generation of video applications!