During a recent onsite gathering of our team at the WebRTC.ventures Panama QA office, we did a deep dive into Prompt Engineering with John Berryman, an early engineer at GitHub Copilot and author of Prompt Engineering for LLMs: The Art and Science of Building Large Language Model-Based Applications.

While we mainly focused on text-based scenarios (in LLMs, text has been king so far), we also explored specific considerations for voice and audio/video-driven applications leveraging WebRTC. Below are some concise insights combining foundational knowledge with practical guidance for developing voice AI applications.

Tackling Latency

Prioritize Short Responses Over Short Prompts for Faster Conversations

In real-time WebRTC applications, minimizing latency is critical. While LLMs process input faster than they generate output, the key to reducing response time lies in prompting the model to produce concise answers rather than focusing solely on shortening input prompts. For voice AI systems, this approach ensures more natural and conversational interactions. Engineering teams should prioritize designing prompts that encourage brief, efficient responses from the model, while still maintaining sufficient context in the input.

Prioritizing Information for Relevance

The order of information in a prompt significantly impacts the quality of the model’s output. By structuring prompts with the most critical details first, engineers can ensure that the model immediately focuses on the essential context. This is particularly important in voice applications where quick comprehension leads to better user experiences.

Note: To cut down costs, it is also helpful to place the static part of the prompt at the beginning of the call. OpenAI caches the tokens, so it’s faster and cheaper.

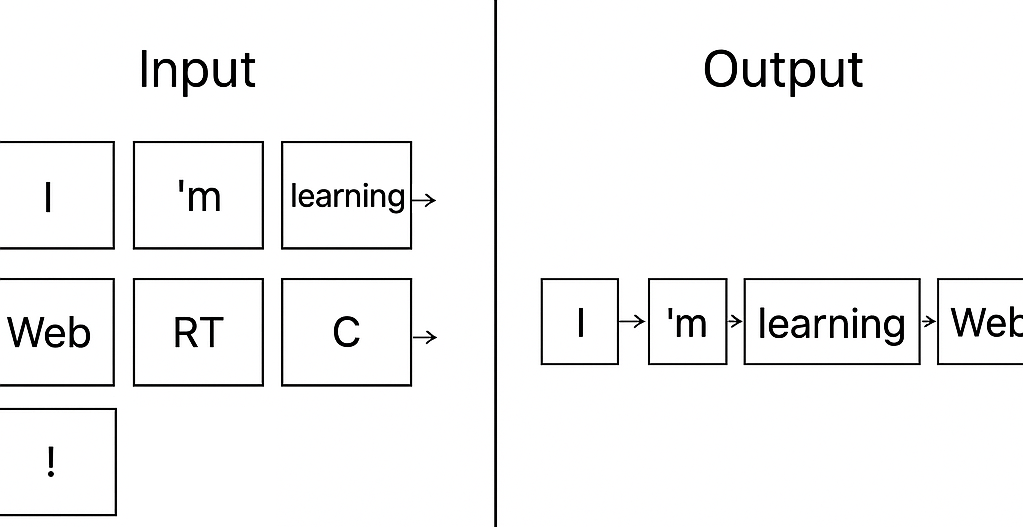

Leveraging Special Tokens for Voice Context

Custom-defined special tokens tailored for voice interactions can dramatically improve LLM performance. These tokens provide explicit instructions, clarify speaker intent, and define task parameters, making interactions more efficient. For example:

<speaker:user> "I'd like to book a flight to Miami tomorrow evening."

<context:intent=travel_booking; urgency=high>

<task:quick_response; required_info=[departure_city, flight_time_options]>

<chain-of-thought:false>

<response_format:concise; max_tokens=50>

<speaker:agent>While some of it is already automatically added and not visible when using ChatGPT it might be worth trying in some situations.

Balancing Chain-of-Thought and Responsiveness

Voice interactions via WebRTC demand immediate, clear responses. While the “chain-of-thought” approach enhances reasoning depth, it can reduce responsiveness. Strategically balance concise, direct prompts with deeper reasoning processes to maintain quality without sacrificing speed.

Optimizing Retrieval-Augmented Generation (RAG)

Hybrid Search for Optimal Performance

Leveraging a hybrid approach that combines lexical search efficiency and semantic search flexibility can deliver optimal results. This method in some situations could enhance performance and user-friendliness for WebRTC voice applications requiring real-time data retrieval. Of course, if the search of data is not specific words or phrases, traditional RAG might be better.

Bypassing RAG for Smaller Datasets

If datasets fit comfortably within the LLM context window, omitting RAG altogether simplifies interactions and reduces processing overhead—beneficial for real-time WebRTC responsiveness. At WebRTC.ventures, this is our preferred approach, if possible.

More Natural Conversations: Interruptions, Turn Detection and Improved User Experiences

Beyond Basic VAD

While Voice Activity Detection (VAD) remains foundational, 2025 systems increasingly combine:

- Semantic VAD: OpenAI’s latest models analyze speech content and prosody to predict turn endpoints, reducing false positives from filler words like “uhm”.

- LLM-native detection: Frameworks like LiveKit and Pipecat by Daily now integrate turn-taking directly into LLM inference, enabling bidirectional audio streaming.

- Transformer-based hybrids: Models like Voice Activity Projection (VAP) use multi-layer transformers to anticipate turn transitions through real-time acoustic and semantic analysis.

Agentic AI for Complex Tasks

While AGI remains future-oriented, tools like CrewAI already enable sophisticated multi-step tool calling interactions involving API calls, searches, and dynamic response generation. This capability significantly enriches complex WebRTC applications. Also, frameworks like LiveKit Agents and Pipecat can be used to develop your custom function calls, too.

Enhancing Feedback Through Artifacts

Feedback in voice AI interactions enhances user trust and usability. Structured artifacts (such as JSON or HTML) help visualize LLM actions alongside voice conversations, making it easier for users to understand the AI-driven processes. A practical format discussed was:

<artifact identifier="d3adb33f" type="application/json" title="Example Artifact">

... content ...

</artifact>Recently we have seen some examples of that from OpenAI:

Real-time browser visualization, previously challenging, is now increasingly feasible within modern WebRTC-supported environments. We will explore this in future blog posts.

Observability is a must. Evaluation using LLM Judges is optional.

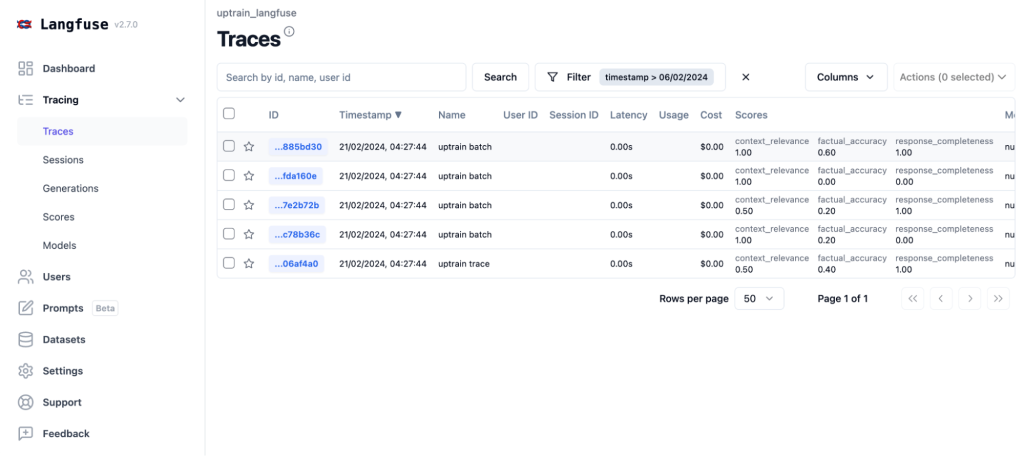

Incorporating observability tools like Langfuse into your deployment pipeline helps identify issues early and enables effective A/B testing. Metrics-driven development ensures continuous improvement.

Automated evaluations employing LLM judges offer scalable, objective performance assessments. For example, evaluating a travel AI agent would involve:

- Generating synthetic user scenarios

- Automated transcript analysis with structured outcomes

- Complementary human oversight to refine automated judgments

Cost-Effective Model Adaptation with LoRA

Full fine-tuning of large LLMs is computationally intensive and often cost-prohibitive for many organizations. Instead, techniques like Low-Rank Adaptation (LoRA) offer a more efficient alternative by injecting small, trainable parameter matrices into the model. This allows you to fine-tune specific behaviors while dramatically reducing both training time and infrastructure costs. LoRA can be applied using tools like OpenAI’s Fine-Tuning API or platforms such as Amazon SageMaker.

Another effective strategy is distillation, where a smaller student model is trained to mimic the behavior of a larger teacher model. Distilled models or Small Language Models (SLMs) retain much of the original model’s capabilities but are faster and more suitable for deployment in latency-sensitive environments.

Multimodal Speech Language Models (SLMs)

Models like Ultravox v0.5 and GPT-4o-mini now process audio waveforms directly, bypassing traditional speech-to-text (STT) and text-to-speech (TTS) pipelines. This architectural shift unlocks new capabilities, especially in conversational fluidity, natural turn-taking, and nuanced understanding, where Multimodal Speech Language Models (SLMs) increasingly outperform legacy pipelines.

Although SLMs offer a more integrated and expressive conversational experience, accuracy benchmarks still favor traditional pipelines, which allow developers to pair best-in-class STT and TTS components for maximum precision.

Despite their promise, SLMs haven’t yet closed the latency gap. According to a recent WebRTC Hacks analysis (April 2025), OpenAI’s WebRTC-based Realtime API still exhibits end-to-end latencies above one second. In contrast, well-optimized pipeline-based voice assistants often respond in 100–300ms for short queries.

In summary: SLMs are a powerful leap forward in conversational AI design, offering more natural and emotionally responsive interactions. However, they remain less mature than traditional pipelines, fewer tooling options, and less widespread production support, though that gap is closing very fast.

Final Thoughts

Voice AI development is rapidly evolving, blending lessons from text-based prompt engineering with unique considerations for real-time audio/video systems. By applying these strategies, ranging from optimizing prompts to leveraging hybrid retrieval methods, engineering teams can deliver high-performance solutions tailored to modern WebRTC environments.

At WebRTC.ventures, we specialize in building custom voice and video AI solutions that meet rigorous performance, security, and scalability standards. If you’re ready to bring your vision to life, let’s collaborate!